|

|

发表于 2020-7-7 13:26:29

|

显示全部楼层

发表于 2020-7-7 13:26:29

|

显示全部楼层

本帖最后由 Twilight6 于 2020-7-7 13:27 编辑

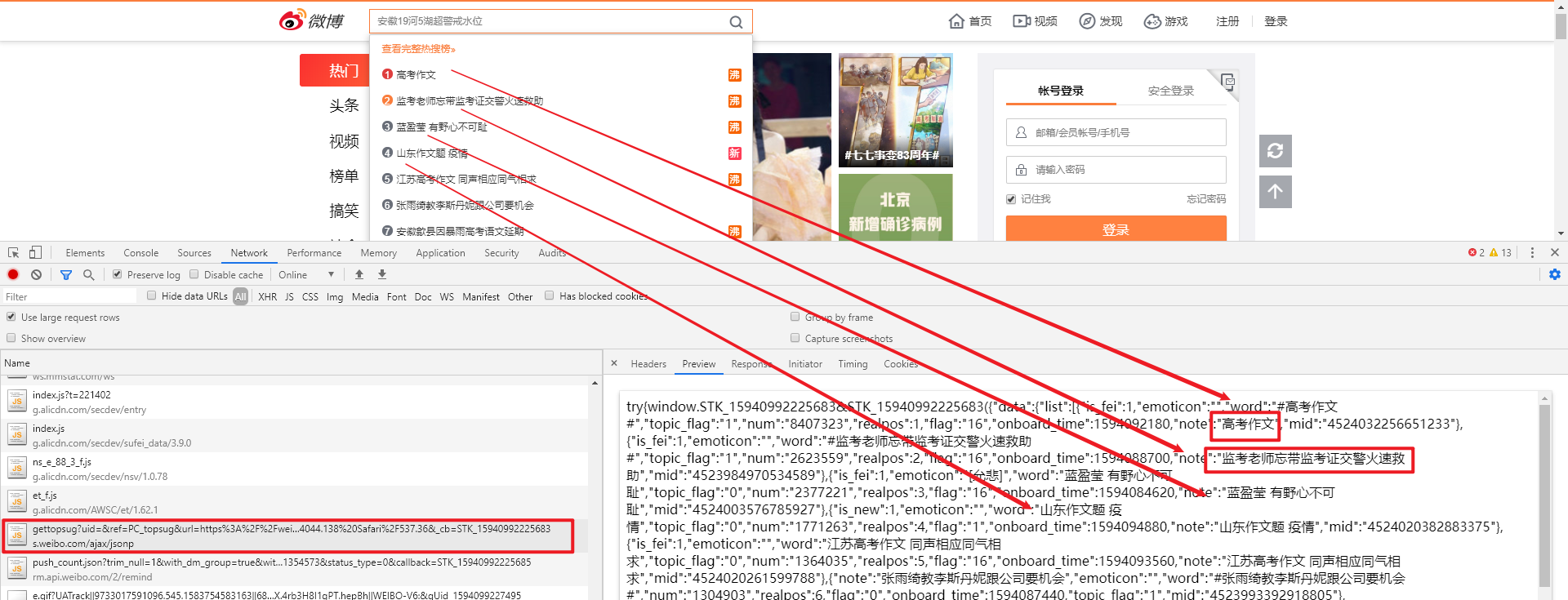

热搜的内容都在 js 文件里,建议新人别爬微博,知名网站反爬都做到比较好,建议爬些小网站,这些不适合新手练手~

- import bs4, re, requests

- def open_url(url):

- head = {

- 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36',

- 'cookie': 'SUHB=0P1cj8UzrPZieU; ALF=1612759724; UOR=login.sina.com.cn,widget.weibo.com,hs.blizzard.cn; SINAGLOBAL=368489229629.54724.1584496033619; SUB=_2AkMpoQ7pf8NxqwJRmfgXym_lbI13yw_EieKf_f8yJRMxHRl-yT9jqkIrtRB6AiEgBqMePr49zcjIOmMqSFuMxuXBHrjG; SUBP=0033WrSXqPxfM72-Ws9jqgMF55529P9D9WhTNUK1iYkr2EuR8lSXbYID; login_sid_t=804e3dc45618b7bcbf86163d280ec29f; cross_origin_proto=SSL; Ugrow-G0=1ac418838b431e81ff2d99457147068c; YF-V5-G0=b1b8bc404aec69668ba2d36ae39dd980; WBStorage=42212210b087ca50|undefined; _s_tentry=-; wb_view_log=1536*8641.25; Apache=5618895476598.254.1594094991152; ULV=1594094991156:4:2:1:5618895476598.254.1594094991152:1593672161009',

- }

- res = requests.get(url, headers=head)

- return res

- def get_some(res):

- soup = bs4.BeautifulSoup(res.text, 'html.parser')

- hot_list = re.findall(r'"note":"(.+?)"', res.text)

- # hot_list = soup.find('li',class_='clearfix')

- for i in hot_list:

- print(i)

- def main():

- url = 'https://s.weibo.com/ajax/jsonp/gettopsug?uid=&ref=PC_topsug&url=https%3A%2F%2Fweibo.com%2F&Mozilla=Mozilla%2F5.0%20(Windows%20NT%2010.0%3B%20Win64%3B%20x64)%20AppleWebKit%2F537.36%20(KHTML%2C%20like%20Gecko)%20Chrome%2F81.0.4044.138%20Safari%2F537.36&_cb=STK_15940991135653'

- res = open_url(url)

- get_some(res)

- if __name__ == "__main__":

- main()

运行结果:

- 高考作文

- 监考老师忘带监考证交警火速救助

- 蓝盈莹 有野心不可耻

- 山东作文题 疫情

- 江苏高考作文 同声相应同气相求

- 张雨绮教李斯丹妮跟公司要机会

- 关锦鹏批评伊能静

- 全国二卷作文演讲稿

- 安徽歙县因暴雨高考语文延期

- 高考语文

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)