|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

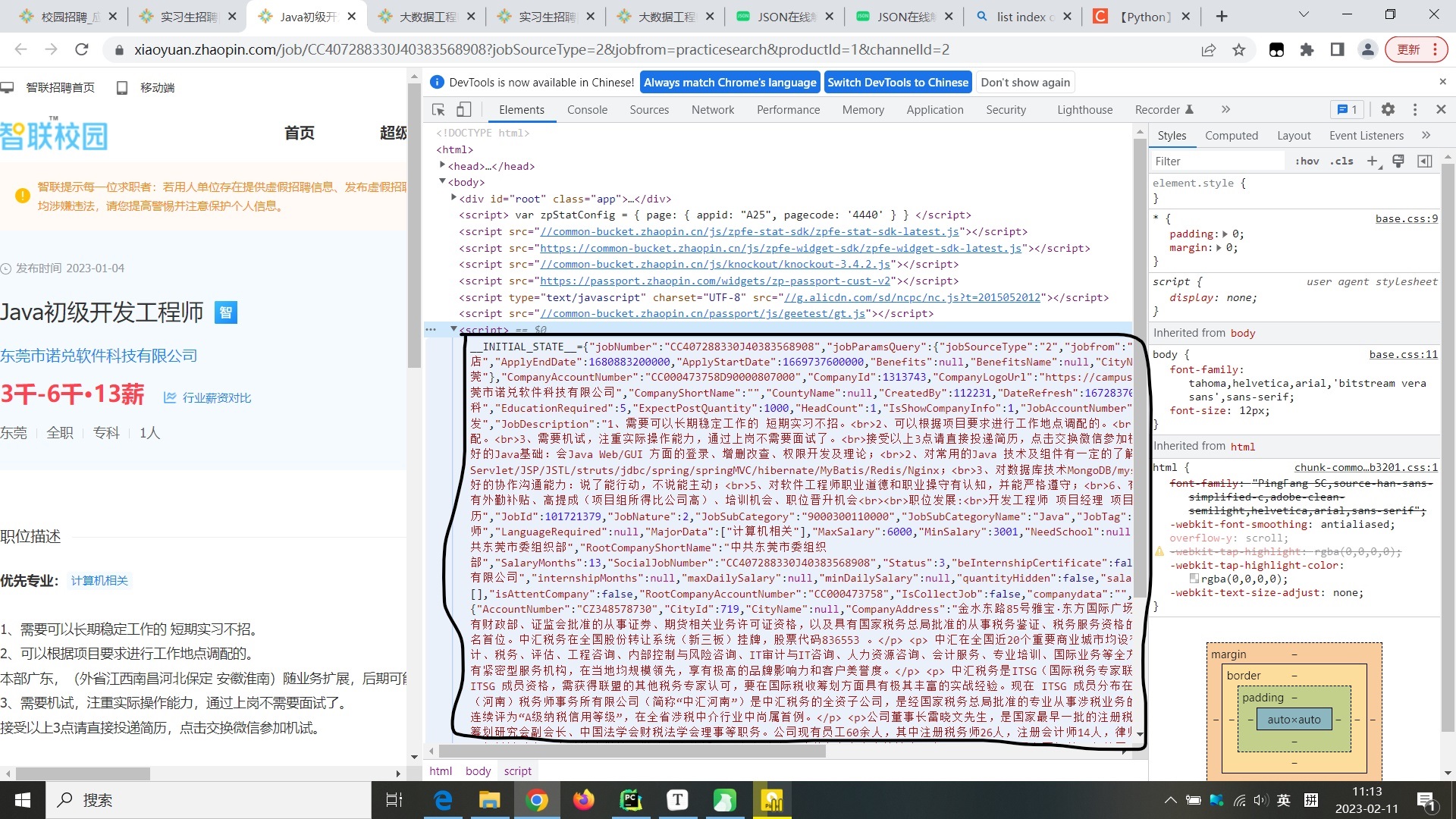

xpath怎样写可以把__INITIAL_STATE__后面的json字符串提取出来呢- from selenium import webdriver

- from time import sleep

- import requests

- from lxml import html

- etree = html.etree

- import json

- from selenium.webdriver.common.by import By

- bro = webdriver.Chrome('D:/技能/chromedriver.exe')

- bro.get('https://xiaoyuan.zhaopin.com/job/CC407288330J40383568908')

- sleep(2)

- cookies = bro.get_cookies()

- print(cookies)

- bro.quit()

- #解析cookie

- dic = {}

- for cookie in cookies:

- dic[cookie['name']] = cookie['value']

- #解析页面源码

- url = 'https://xiaoyuan.zhaopin.com/api/sou?S_SOU_FULL_INDEX=java&S_SOU_POSITION_SOURCE_TYPE=&pageIndex=1&S_SOU_POSITION_TYPE=2&S_SOU_WORK_CITY=&S_SOU_JD_INDUSTRY_LEVEL=&S_SOU_COMPANY_TYPE=&S_SOU_REFRESH_DATE=&order=12&pageSize=30&_v=0.43957010&at=8d9987f50aed40bc8d1362e9c44a7fba&rt=ed9c026545294384a20a4473e1e2ecd3&x-zp-page-request-id=0933f66d64684fd6b0bc0756ed6791b6-1675650906506-860845&x-zp-client-id=b242c663-f23a-4571-aca7-de919a057afe'

- header = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

- }

- url1 = 'https://xiaoyuan.zhaopin.com/api/sou?S_SOU_FULL_INDEX=java&S_SOU_POSITION_SOURCE_TYPE=&pageIndex=1&S_SOU_POSITION_TYPE=2&S_SOU_WORK_CITY=&S_SOU_JD_INDUSTRY_LEVEL=&S_SOU_COMPANY_TYPE=&S_SOU_REFRESH_DATE=&order=&pageSize=30&_v=0.06047107&at=9790578095794e1b9cc693485ef05237&rt=6448b0d50c2d460eb823575593f5a909&cityId=&jobTypeId=&jobSource=&industryId=&companyTypeId=&dateSearchTypeId=&x-zp-page-request-id=fcf1dcda72444dc6b8a17609bdb3a02f-1676083831807-687308&x-zp-client-id=b242c663-f23a-4571-aca7-de919a057afe'

- response = requests.get(url=url1,headers=header,cookies=dic).json()#json后要加括号

- list = response['data']['data']['list']

- for i in list:

- name = i['name']

- number = i['number']

- job_url = 'https://xiaoyuan.zhaopin.com/job/' + number

- page = requests.get(url=job_url, headers=header,cookies=dic).text

- tree = etree.HTML(page)

- #解析详情页数据

- imformation = tree.xpath('/html/body/script[8]/text()')

- print(imformation)

- break

- from selenium import webdriver

- from time import sleep

- import requests

- from lxml import html

- etree = html.etree

- import json

- from selenium.webdriver.common.by import By

- bro = webdriver.Chrome('D:/技能/chromedriver.exe')

- bro.get('https://xiaoyuan.zhaopin.com/job/CC407288330J40383568908')

- sleep(2)

- cookies = bro.get_cookies()

- print(cookies)

- bro.quit()

- #解析cookie

- dic = {}

- for cookie in cookies:

- dic[cookie['name']] = cookie['value']

- #解析页面源码

- url = 'https://xiaoyuan.zhaopin.com/api/sou?S_SOU_FULL_INDEX=java&S_SOU_POSITION_SOURCE_TYPE=&pageIndex=1&S_SOU_POSITION_TYPE=2&S_SOU_WORK_CITY=&S_SOU_JD_INDUSTRY_LEVEL=&S_SOU_COMPANY_TYPE=&S_SOU_REFRESH_DATE=&order=12&pageSize=30&_v=0.43957010&at=8d9987f50aed40bc8d1362e9c44a7fba&rt=ed9c026545294384a20a4473e1e2ecd3&x-zp-page-request-id=0933f66d64684fd6b0bc0756ed6791b6-1675650906506-860845&x-zp-client-id=b242c663-f23a-4571-aca7-de919a057afe'

- header = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36",

- }

- url1 = 'https://xiaoyuan.zhaopin.com/api/sou?S_SOU_FULL_INDEX=java&S_SOU_POSITION_SOURCE_TYPE=&pageIndex=1&S_SOU_POSITION_TYPE=2&S_SOU_WORK_CITY=&S_SOU_JD_INDUSTRY_LEVEL=&S_SOU_COMPANY_TYPE=&S_SOU_REFRESH_DATE=&order=&pageSize=30&_v=0.06047107&at=9790578095794e1b9cc693485ef05237&rt=6448b0d50c2d460eb823575593f5a909&cityId=&jobTypeId=&jobSource=&industryId=&companyTypeId=&dateSearchTypeId=&x-zp-page-request-id=fcf1dcda72444dc6b8a17609bdb3a02f-1676083831807-687308&x-zp-client-id=b242c663-f23a-4571-aca7-de919a057afe'

- response = requests.get(url=url1,headers=header,cookies=dic).json()#json后要加括号

- list = response['data']['data']['list']

- for i in list:

- name = i['name']

- number = i['number']

- job_url = 'https://xiaoyuan.zhaopin.com/job/' + number

- page = requests.get(url=job_url, headers=header,cookies=dic).text

- tree = etree.HTML(page)

- #解析详情页数据

- information = tree.xpath('/html/body/script[6]/text()')[0].removeprefix('__INITIAL_STATE__=') # 改了这里

- print(information)

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)