|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

import urllib.request

import re

def open_url(url):

req = urllib.request.Request(url)

req.add_header('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36')

page = urllib.request.urlopen(req)

html = page.read().decode('UTF-8')

return html

def get_img(html):

p = r'<img src="([^"]+\.jpg)"'

imglist = re.findall(p,html)

for each in imglist:

print(each)

for each in imglist:

filename = each.split('/')[-1]

urllib.request.urlretrieve('http://' + each,filename,None)

if __name__ == '__main__':

url = 'http://jandan.net/ooxx/MjAyMTAyMDgtOTk=#comments'

get_img(open_url(url))

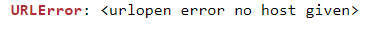

报错截图如下:

网址是最近煎蛋网上的随手拍那一栏的网址,这里小白先谢过各位鱼油们!谢谢~

- import urllib.request as u_request

- import os, re, base64, requests

- header ={}

- header['User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 11_1_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36'

- def url_open(url):

- html = requests.get(url, headers=header).text

- return html

- def find_images(url):

- html = url_open(url)

- m = r'<img src="([^"]+\.jpg)"'

- match = re.findall(m, html)

- for each in range(len(match)):

- match[each] = 'http:' + match[each]

- print(match[each])

- return match

- def save_images(folder, img_addrs):

- for each in img_addrs:

- try:

- req = u_request.Request(each, headers = header)

- response = u_request.urlopen(req)

- cat_image = response.read()

- filename = each.split('/')[-1]

- with open(filename,'wb') as f:

- f.write(cat_image)

- #print(each)

- except OSError as error:

- print(error)

- continue

- except ValueError as error:

- print(error)

- continue

- def web_link_encode(url, folder):

- for i in range(180,200):

- string_date = '20201216-'

- string_date += str(i)

- string_date = string_date.encode('utf-8')

- str_base64 = base64.b64encode(string_date)

- page_url = url + str_base64.decode() + '=#comments'

- print(page_url)

- img_addrs = find_images(page_url)

- save_images(folder, img_addrs)

- def download_the_graph(url):

- folder = 'graph'

- os.mkdir(folder)

- os.chdir(folder)

- web_link_encode(url, folder)

- if __name__ == '__main__':

- url = 'http://jandan.net/pic/'

- download_the_graph(url)

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)