|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 chenxz186 于 2018-6-29 16:55 编辑

曾几何时,你是否见到某小说突然收费了,然后抓狂不断在网上查找哪里免费的下载资源,结果很多时候是找不到的,而且找到的也只是web上的,我们只能在浏览器上看,若没有网络那咋办呢?继续抓狂呗!

我喜欢听小说,就是把小说下载下来后,放进小说软件上开启听书模式,然后静静的聆听起来。毕竟天天操电脑,眼睛也需要休息的,所以听听书也挺不错,喜欢听书的朋友可以用一些听书的软件,把下载好的小说放进去,开启听书模式就能听了。好了,废话不多说了,要尽快亮出今天的主角内容。

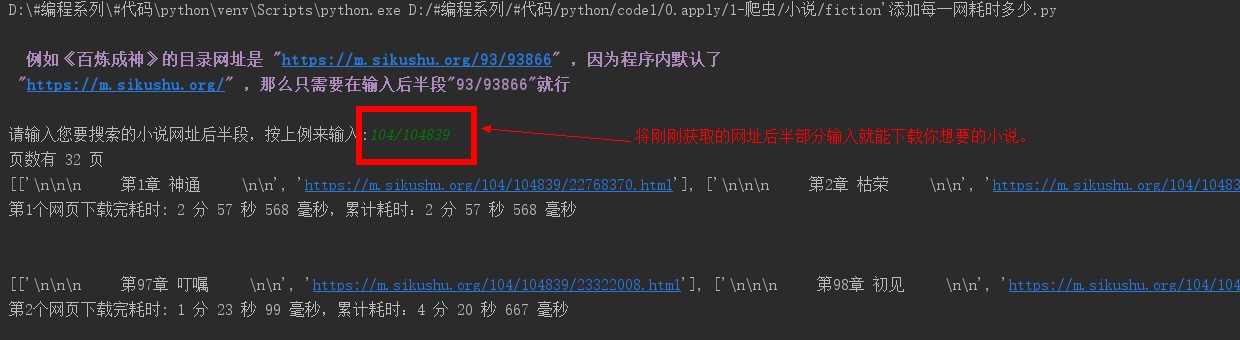

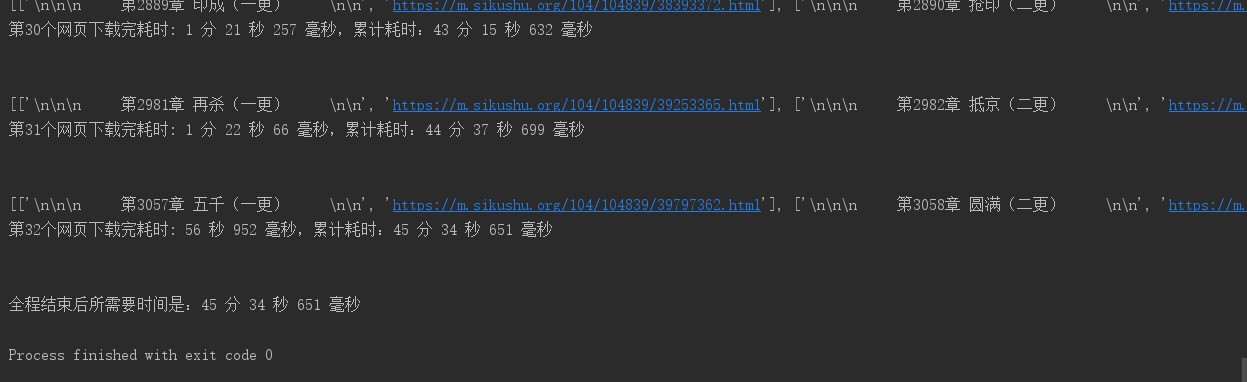

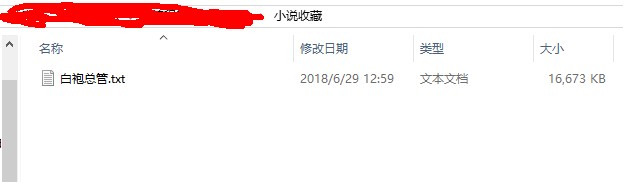

近来我迷上一部小说《百炼成神》, 我们在https://m.sikushu.org/可以查找到,今天这个爬虫小程序能在此网上爬取所有的小说,只需要应用requests、bs4、os、time这四个模块,其中time模块只是为了测算整个过程耗费的时间,对整个程序没有根本性的作用,各位可根据实际情况取舍,其余三个模块在网上可以找到相关学习资料,我就不多说了,直接上代码。

代码:

- import requests

- import bs4

- import os

- import time

- def folder_func(folder_name='小说收藏'):

- """生成文件夹"""

- if os.path.exists(folder_name):

- os.chdir(folder_name)

- folder = os.getcwd()

- else:

- os.mkdir(folder_name)

- os.chdir(folder_name)

- folder = os.getcwd()

- return folder

- def download_per_chapter_content(name, target, fiction_name, folder):

- content = target.find('div', id="nr1").text.strip()

- total_content = [name, content]

- filename = folder + '/' + fiction_name + '.txt'

- with open(filename, 'a', encoding='utf-8') as file:

- file.writelines(total_content)

- def find_per_chapter(soup):

- # 找出相关的 a 标签

- a_tag = soup.find_all('a')

- a_list = []

- for each in a_tag:

- if '.html' in each['href']:

- # 找每一章的章节名称

- chapter_name = each.text

- # 找每一章的内容

- chapter_url = 'https://m.sikushu.org' + each['href']

- # 合并数据

- a_list.append(['\n\n\n ' + chapter_name + ' \n\n', chapter_url])

- return a_list

- def find_page(soup):

- page = 0

- for each in soup.find_all('div', class_="page"):

- if '输入页数' in each.text:

- page += int(each.text.split('/', 1)[1].split('页)', 1)[0])

- return page

- def find_fiction_name(soup):

- fiction_name = soup.find('title').text.split('_', 1)[0]

- return fiction_name

- def cooking_soup(res):

- soup = bs4.BeautifulSoup(res.text, 'html.parser')

- return soup

- def open_url(url):

- headers = {

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'}

- res = requests.get(url, headers=headers)

- res.encoding = 'gbk'

- return res

- def timer(param_time):

- hours = int(param_time // 3600)

- minutes = int(param_time % 3600 // 60)

- seconds = int(param_time % 3600 % 60)

- mses = int((param_time % 3600 % 1) * 1000)

- if param_time < 1:

- time_meter = '%d 毫秒' % mses

- elif 1 <= param_time < 60:

- time_meter = '%d 秒 %d 毫秒' % (seconds, mses)

- elif 60 <= param_time < 3600:

- time_meter = '%d 分 %d 秒 %d 毫秒' % (minutes, seconds, mses)

- else:

- time_meter = '%d 时 %d 分 %d 秒 %d 毫秒' % (hours, minutes, seconds, mses)

- return time_meter

- def main(start_time):

- folder = folder_func()

- print('\n \033[1;35m 例如《百炼成神》的目录网址是 "https://m.sikushu.org/93/93866" ,因为程序内'

- '默认了\n "https://m.sikushu.org/" ,那么只需要在输入后半段"93/93866"就行\033[0m \n')

- prompt = 'https://m.sikushu.org/' + input('请输入您要搜索的小说网址后半段,按上例来输入:')

- response = open_url(prompt)

- prompt_soup = cooking_soup(response)

- fiction_name = find_fiction_name(prompt_soup)

- page = find_page(prompt_soup)

- print('页数有 ' + str(page) + ' 页')

- count = 1

- start = start_time

- for i in range(1, page + 1):

- url = '%s_%s/' % (prompt, str(i))

- res = open_url(url)

- soup = cooking_soup(res)

- a_list = find_per_chapter(soup)

- print(a_list)

- for each_chapter in a_list:

- chapter_res = open_url(each_chapter[1])

- chapter_soup = cooking_soup(chapter_res)

- download_per_chapter_content(each_chapter[0], chapter_soup, fiction_name, folder)

- end_time = time.time()

- end = end_time

- cumulative_time = end_time - start_time

- gap_time = end - start

- print('第%d个网页下载完耗时: %s,累计耗时:%s \n\n' % (count, timer(gap_time), timer(cumulative_time)))

- start = end_time

- count += 1

- if __name__ == '__main__':

- begin_time = time.time()

- main(begin_time)

- finish_time = time.time()

- total_time = finish_time - begin_time

- print('全程结束后所需要时间是:%s' % timer(total_time))

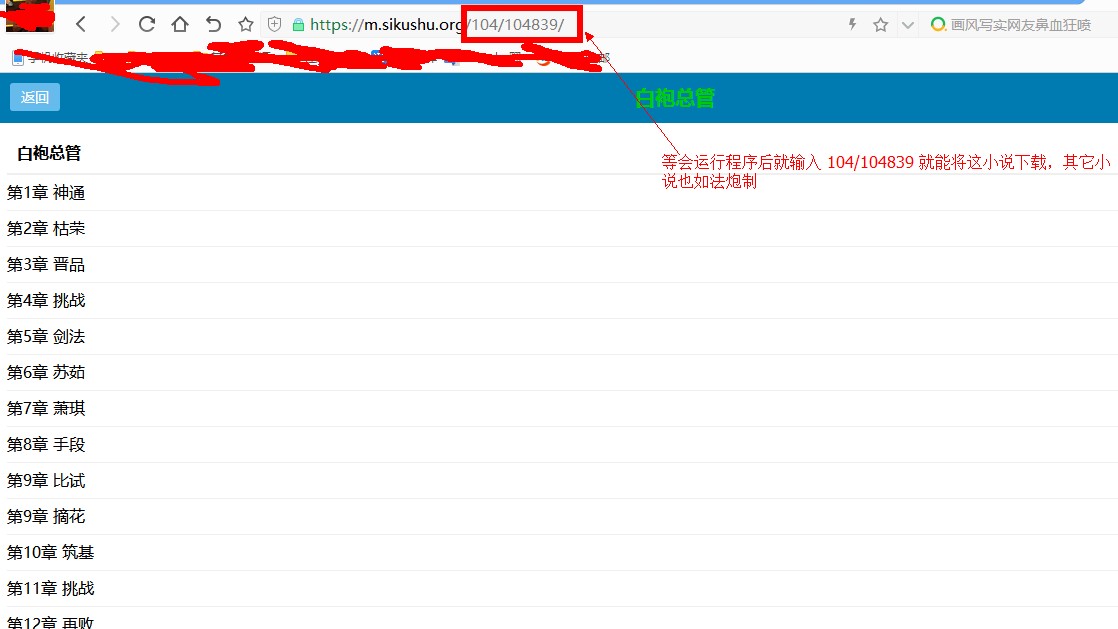

例如我们要下载《白袍总管》, 就在 https://m.sikushu.org/ 搜索上输入 白袍总管 找到此书后

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)