|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

自认为最满意的作品,大佬勿喷

有些代码看了可能会觉得啰嗦,技术有限也就这样了

不足点麻烦大佬们指出来,下次作品争取更上一层楼

注释部分给小白看的,希望能给你们带来帮助

下面有给大佬们看的没有代码部分

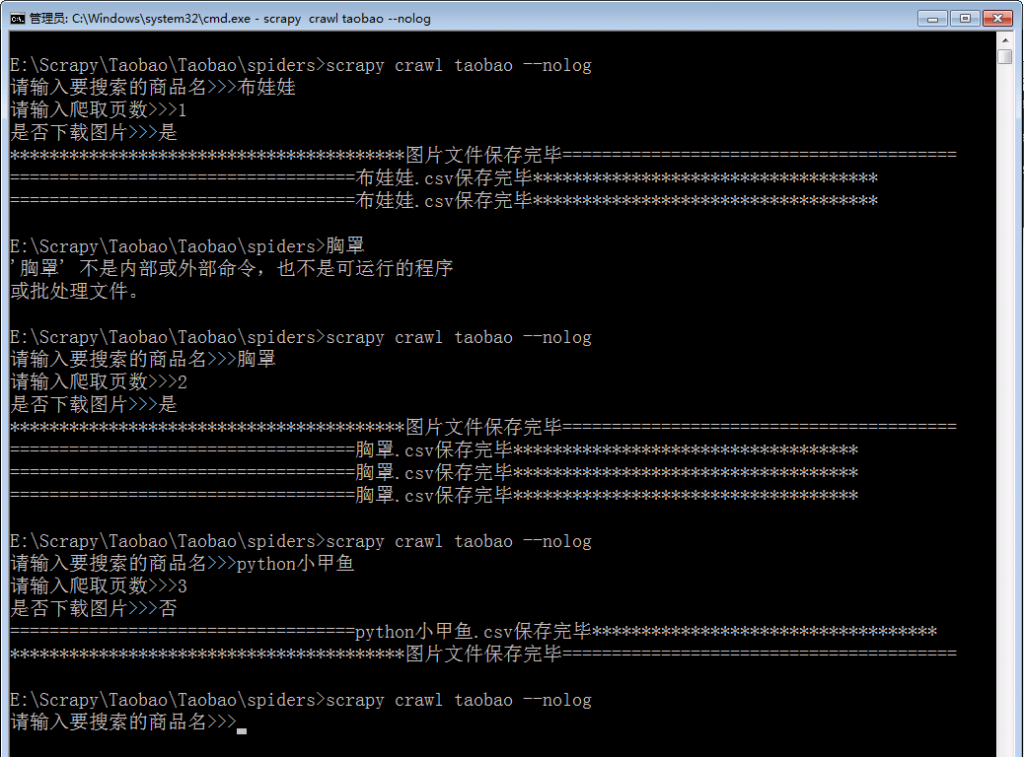

cmd

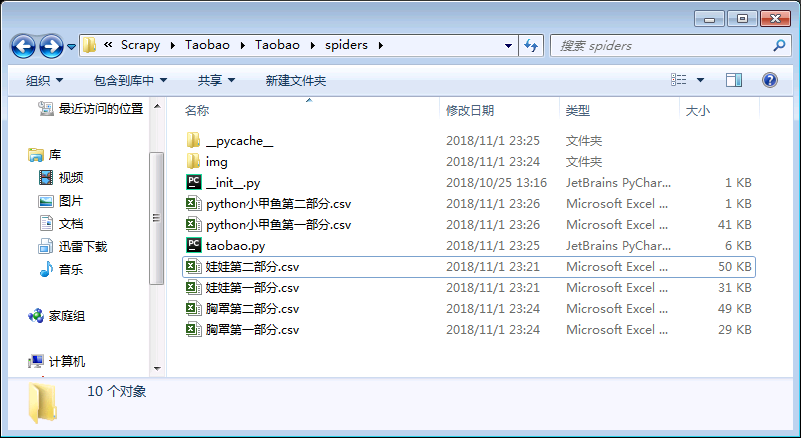

csv

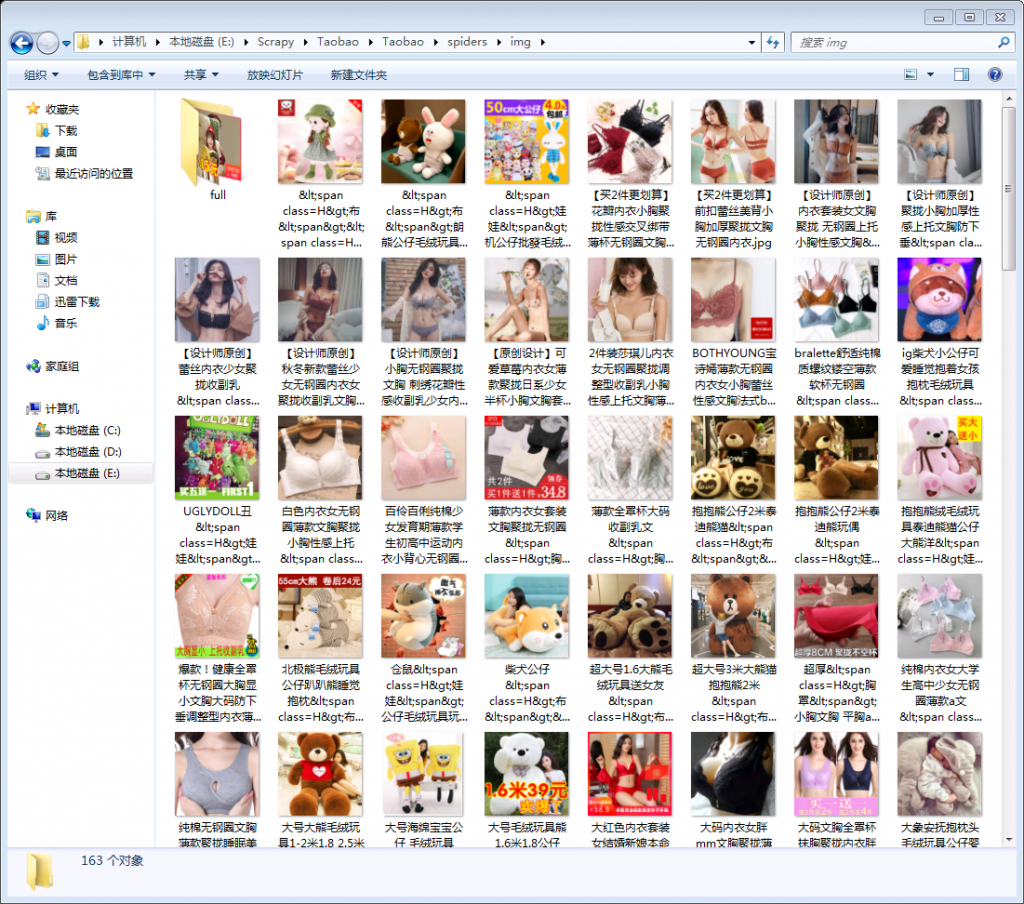

图片

有注释的代码没有注释代码分开放,

爬虫文件

- # -*- coding: utf-8 -*-

- import scrapy

- import json

- from Taobao.items import TaobaoItem

- # url编码

- from urllib.parse import quote

- # url解码

- from urllib.parse import unquote

- class TaobaoSpider(scrapy.Spider):

- name = 'taobao'

- # allowed_domains = ['taobao.com/']

- Quote = input('请输入要搜索的商品名>>>')

- pages = input('请输入爬取页数>>>')

- start_urls = []

- page = 0

- ppage = 0

- while page <= int(pages): # 判断语句要用int类型

- page += 1

- if page%2 != 0: # 取奇数

- ppage += 1

- # 将符合判断语句的url添加到'start_urls'列表 # 翻页规律 # 第一页,第一个异步加载page参数1,ppage参数为1,pageNav参数为true

- start_urls.append('https://ai.taobao.com/search/getItem.htm?_tb_token_=353a8b5a1b773&__ajax__=1&pid=mm_10011550_0_0&unid=&clk1=&page={}&pageSize=60&ppage={}&squareFlag=&sourceId=search&ppathName=&supportCod=&city=&ppath=&dc12=&pageNav=true&itemAssurance=&fcatName=&price=&cat=&from=&tmall=&key={}&fcat=&debug=false&maxPageSize=200&sort=&exchange7=&custAssurance=&postFree=&npx=50&location='.format(page,ppage,quote(Quote,'utf-8')))

- if page%2 == 0: # 取偶数

- # 同上将符合判断的url添加到列表 # 第一页,第二个异步加载page参数2,ppage为0,pageNav参数为false

- start_urls.append('https://ai.taobao.com/search/getItem.htm?_tb_token_=353a8b5a1b773&__ajax__=1&pid=mm_10011550_0_0&unid=&clk1=&page={}&pageSize=60&squareFlag=&sourceId=search&ppathName=&supportCod=&city=&ppath=&dc12=&pageNav=false&itemAssurance=&fcatName=&price=&cat=&from=&tmall=&key={}&fcat=&ppage=0&debug=false&maxPageSize=200&sort=&exchange7=&custAssurance=&postFree=&npx=50&location='.format(page,quote(Quote,'utf-8')))

- print(start_urls)

- def parse(self, response):

- # 因为在两个js链接中获取数据,(技术有限,数据不会放在同一个文件里)

- # 创建第一个csv文件,注意文件编码格式"encoding=utf-8"否则写数据会乱码

- f1 = open('{}第一部分.csv'.format(self.Quote), 'w', encoding='utf-8')

- f1.write("商品名,价格,店铺网址,图片url\n")

- # 创建第二个csv文件

- f2 = open('{}第二部分.csv'.format(self.Quote), 'w', encoding='utf-8')

- f2.write("商品名,价格,店铺网址,图片url\n")

- a = 0

- # 链接参数中有"pageNav = false"的链接js数据中没有'p4ptop'数据,它也是要爬取的数据

- try:

- # 将js解码,并获取所有链接中有'result'下的'auction'内所有信息

- auction = json.loads(response.body)['result']['auction']

- # 遍历字典'auction'(它里面全是一个一个的列表)

- for x in auction:

- a += 1

- # 将要写入csv文件中的数据信息写成字典

- dict2 = {

- 'name': x['description'], # 商品名

- 'clickUrl': x['clickUrl'], # 商品价格

- 'realPrice': x['realPrice'], # 商品url地址

- 'origPicUrl': x['origPicUrl'], # 商品图片地址

- }

- print('*'*100)

- print(a)

- # 数据写入字典中

- f1.write("{name},{realPrice},{clickUrl},{origPicUrl}\n".format(**dict2))

- item = TaobaoItem()

- item['name'] = x['description']

- item['clickUrl'] = x['clickUrl']

- item['realPrice'] = x['realPrice']

- item['origPicUrl'] = x['origPicUrl']

- yield item

- # 获取所有url中有'result'下的'p4ptop'内所有信息

- p4ptop = json.loads(response.body)['result']['p4ptop']

- for y in p4ptop:

- a += 1

- # 同上写入csv文件信息

- dict = {

- 'name': y['title'],

- 'realPrice': y['salePrice'],

- 'clickUrl' : y['eurl'],

- 'origPicUrl' : y['tbGoodSLink'],

- }

- print('*' * 100)

- print(a)

- # 同上写入csv文件

- f2.write("{name},{realPrice},{clickUrl},{origPicUrl}\n".format(**dict))

- item = TaobaoItem()

- item['name'] = y['title']

- item['clickUrl'] = y['eurl']

- item['realPrice'] = y['salePrice']

- item['origPicUrl'] = y['tbGoodSLink']

- # print('=' * 100)

- # print(a)

- yield item

- # 如果没有'p4ptop'键则跳过,继续执行下面的代码;(注:搜索包含'auction'的代码不能写在错误下,否则不会跳过报错)

- # 有的js数据没有'p4ptop'会报KeyError错误,跳过继续

- except KeyError:

- pass

- # 关闭文件

- f1.close()

- f2.close()

items

- # -*- coding: utf-8 -*-

- # Define here the models for your scraped items

- #

- # See documentation in:

- # https://doc.scrapy.org/en/latest/topics/items.html

- import scrapy

- class TaobaoItem(scrapy.Item):

- # 商品名

- name = scrapy.Field()

- # # 价格

- realPrice = scrapy.Field()

- # 商品url

- clickUrl = scrapy.Field()

- # 图片

- origPicUrl = scrapy.Field()

管道文件

- # -*- coding: utf-8 -*-

- # Define your item pipelines here

- #

- # Don't forget to add your pipeline to the ITEM_PIPELINES setting

- # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

- # 导入处理媒体文件模块

- from scrapy.pipelines.images import ImagesPipeline

- import scrapy

- # 导入同级目录下settings.py代码中设置的下载文件目录

- from Taobao.settings import IMAGES_STORE

- # 创建文件模块

- import os

- # 处理媒体文件类

- class TaobaoPipeline(ImagesPipeline):

- # 下载图片方法,需在管道文件创建'IMAGES_STORE'文件目录

- def get_media_requests(self, item, info):

- image_link = item['origPicUrl']

- # 将图片链接前面加上https并请求链接

- yield scrapy.Request('https:'+image_link)

- # 重写文件名方法(将下载图片名字改成商品名)

- def item_completed(self, results, item, info):

- # ==========================================================

- # 返回results内容

- # print(results)

- # [(True,

- # {'url': 'https://gaitaobao4.alicdn.com/tfscom/i3/1607379596/O1CN01vv4Zlp2Kl17e4jX7T_!!0-item_pic.jpg',

- # 'path': 'full/321e6d31eed0d9fd036968547943219104c6ab4c.jpg',

- # 'checksum': 'ca4e396b135ea49bb2a66c4d8806f6fd'}

- # )]'

- # ==========================================================

- # 三元操作;判断'ok'是否为'True',为真则获取字典内'path'的值

- path = [x['path'] for ok, x in results if ok]

- # split()分割,提取文件后缀

- jpg = [x['path'] for ok, x in results if ok][0].split('.')[-1]

- # 因为商品名有'/',需要替换掉才能保存文件,要不然会被认为下一级

- names = item['name'].replace('/','')

- # rename()文件名修改函数;

- # 有些图片链接挂了,在'results'里可以看到状态为False;执行时会提示文件保存失败或索引超出范围

- # path[0]即文件目录,'path': 'full/321e6d31eed0d9fd036968547943219104c6ab4c.jpg'

- # jpg即提取的所有图片格式

- os.rename(IMAGES_STORE + path[0],IMAGES_STORE + names + '.' + jpg)

- # 返回

- return item

settings.py

- BOT_NAME = 'Taobao'

- SPIDER_MODULES = ['Taobao.spiders']

- NEWSPIDER_MODULE = 'Taobao.spiders'

- # 设置图片下载目录

- IMAGES_STORE = 'E:/Scrapy/Taobao/Taobao/spiders/img/'

- # 设置U-A

- USER_AGENT = 'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)'

- # 管道文件配置

- ITEM_PIPELINES = {

- 'Taobao.pipelines.TaobaoPipeline': 300,

- }

没有注释的代码,item、settings两个文件看上面的吧

爬虫文件

- # -*- coding: utf-8 -*-

- import scrapy

- import json

- from Taobao.items import TaobaoItem

- from urllib.parse import quote

- class TaobaoSpider(scrapy.Spider):

- name = 'taobao'

- Quote = input('请输入要搜索的商品名>>>')

- pages = input('请输入爬取页数>>>')

- start_urls = []

- page = 0

- ppage = 0

- while page <= int(pages):

- page += 1

- if page%2 != 0:

- ppage += 1

- start_urls.append('https://ai.taobao.com/search/getItem.htm?_tb_token_=353a8b5a1b773&__ajax__=1&pid=mm_10011550_0_0&unid=&clk1=&page={}&pageSize=60&ppage={}&squareFlag=&sourceId=search&ppathName=&supportCod=&city=&ppath=&dc12=&pageNav=true&itemAssurance=&fcatName=&price=&cat=&from=&tmall=&key={}&fcat=&debug=false&maxPageSize=200&sort=&exchange7=&custAssurance=&postFree=&npx=50&location='.format(page,ppage,quote(Quote,'utf-8')))

- if page%2 == 0:

- start_urls.append('https://ai.taobao.com/search/getItem.htm?_tb_token_=353a8b5a1b773&__ajax__=1&pid=mm_10011550_0_0&unid=&clk1=&page={}&pageSize=60&squareFlag=&sourceId=search&ppathName=&supportCod=&city=&ppath=&dc12=&pageNav=false&itemAssurance=&fcatName=&price=&cat=&from=&tmall=&key={}&fcat=&ppage=0&debug=false&maxPageSize=200&sort=&exchange7=&custAssurance=&postFree=&npx=50&location='.format(page,quote(Quote,'utf-8')))

- print(start_urls)

- def parse(self, response):

- f1 = open('{}第一部分.csv'.format(self.Quote), 'w', encoding='utf-8')

- f1.write("商品名,价格,店铺网址,图片url\n")

-

- f2 = open('{}第二部分.csv'.format(self.Quote), 'w', encoding='utf-8')

- f2.write("商品名,价格,店铺网址,图片url\n")

-

- a = 0

- try:

- auction = json.loads(response.body)['result']['auction']

- for x in auction:

- a += 1

- dict2 = {

- 'name': x['description'], # 商品名

- 'clickUrl': x['clickUrl'], # 商品价格

- 'realPrice': x['realPrice'], # 商品url地址

- 'origPicUrl': x['origPicUrl'], # 商品图片地址

- }

- f1.write("{name},{realPrice},{clickUrl},{origPicUrl}\n".format(**dict2))

- item = TaobaoItem()

- item['name'] = x['description']

- item['clickUrl'] = x['clickUrl']

- item['realPrice'] = x['realPrice']

- item['origPicUrl'] = x['origPicUrl']

- yield item

- p4ptop = json.loads(response.body)['result']['p4ptop']

- for y in p4ptop:

- a += 1

- dict = {

- 'name': y['title'],

- 'realPrice': y['salePrice'],

- 'clickUrl' : y['eurl'],

- 'origPicUrl' : y['tbGoodSLink'],

- }

- f2.write("{name},{realPrice},{clickUrl},{origPicUrl}\n".format(**dict))

- item = TaobaoItem()

- item['name'] = y['title']

- item['clickUrl'] = y['eurl']

- item['realPrice'] = y['salePrice']

- item['origPicUrl'] = y['tbGoodSLink']

- yield item

- except KeyError:

- pass

- f1.close()

- f2.close()

管道文件

- # 导入处理媒体文件模块

- from scrapy.pipelines.images import ImagesPipeline

- import scrapy

- from Taobao.settings import IMAGES_STORE

- import os

- class TaobaoPipeline(ImagesPipeline):

- def get_media_requests(self, item, info):

- image_link = item['origPicUrl']

- yield scrapy.Request('https:'+image_link)

- def item_completed(self, results, item, info):

- path = [x['path'] for ok, x in results if ok]

- jpg = [x['path'] for ok, x in results if ok][0].split('.')[-1]

- names = item['name'].replace('/','')

- os.rename(IMAGES_STORE + path[0],IMAGES_STORE + names + '.' + jpg)

- return item

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)