|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 金刚 于 2019-6-2 23:00 编辑

- from urllib.request import Request

- from urllib.request import urlopen

- from lxml import etree

- import re, os, random, time

- def get_html(url):

-

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.157 Safari/537.36'}

- req = Request(url, headers=headers)

- response = urlopen(req)

- html = response.read().decode("gbk")

- return html

- def html_parse(html):

-

- tree = etree.HTML(html)

- job = tree.xpath('//div[@class="el"]/p//a/@title')

- name = tree.xpath('//div[@class="el"]/span//a/@title')

- address = tree.xpath('//div[@class="el"]/span[@class="t3"]/text()')

- salary = tree.xpath('//div[@class="el"]/span[@class="t4"]/text()')

- date = tree.xpath('//div[@class="el"]/span[@class="t5"]/text()')

- return [job, name, address, salary, date]

- def optimize_result(all_msg_list, page):

- try:

- all_l_optimized = []

- for i in range(50):

- line = [all_msg_list[0][i], all_msg_list[1][i], all_msg_list[2][i], all_msg_list[3][i], all_msg_list[4][i]]

- line = [line+ "....." for line in line]

- line_msg = str(i+1)+" : "+ "职位名:"+line[0]+ "公司名:"+line[1]+ "工作地点:"+line[2]+ "薪资:"+line[3]+ "发布时间:"+line[4][0:-5]+"\n\n"

- all_l_optimized.append(line_msg)

- except IndexError:

- line = [line+ "....." for line in line]

- line_msg = str(i+1)+" : "+ "职位名:"+line[0]+ "公司名:"+line[1]+ "工作地点:"+line[2]+ "薪资:"+line[3]+ "发布时间:"+line[4][0:-5]

- line_msg += "\n"

- all_l_optimized.append(line_msg)

- return all_l_optimized

- else:

- return all_l_optimized

- finally:

- print("第%d页获取开始!" % page)

- def save_msg(all_l_optimized, page):

-

- with open("./"+files+"/"+str(page)+".txt", "w") as f:

- for each_job_msg in all_l_optimized:

- f.write(each_job_msg)

- # timer = random.choice([3, 4, 5, 6])

- # time.sleep(3)

-

- def get_job_msg(page, url):

-

- html = get_html(url)

- all_msg_list = html_parse(html)

- all_l_optimized = optimize_result(all_msg_list, page)

- save_msg(all_l_optimized, page)

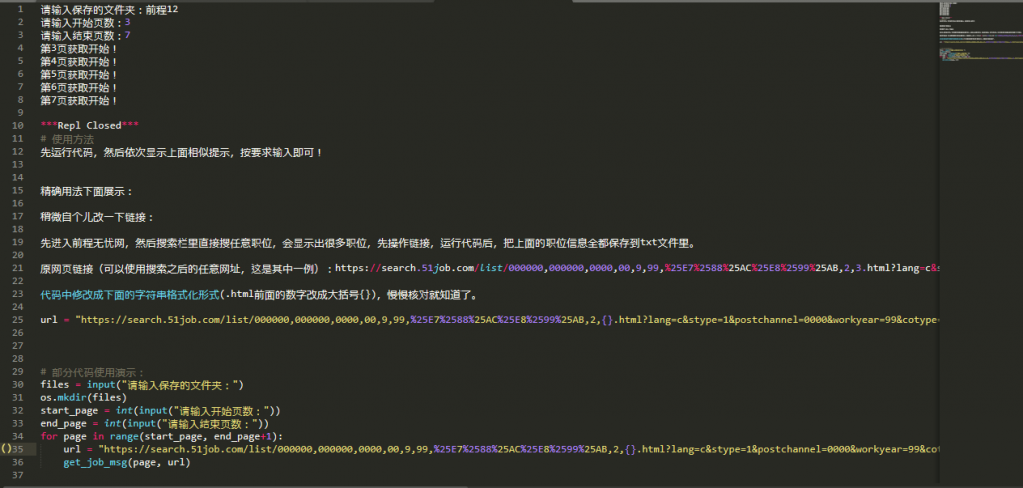

- files = input("请输入保存的文件夹:")

- os.mkdir(files)

- start_page = int(input("请输入开始页数:"))

- end_page = int(input("请输入结束页数:"))

- for page in range(start_page, end_page+1):

- url = "https://search.51job.com/list/000000,000000,0000,00,9,99,python,2,{}.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=".format(page)

- get_job_msg(page, url)

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)