|

|

30鱼币

- import requests

- import bs4

- import re

- def open_url(url):

- # 使用代理

- # proxies = {"http": "127.0.0.1:1080", "https": "127.0.0.1:1080"}

- headers = {

- 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36'}

- # res = requests.get(url, headers=headers, proxies=proxies)

- res = requests.get(url, headers=headers)

- return res

- def main():

- ff=open('name.txt','r')

- allurl=[]

- for i in ff.readlines():

- host = "https://trace.ncbi.nlm.nih.gov/Traces/sra/?run="+i

- res = open_url(host)

- soup = bs4.BeautifulSoup(res.text, 'html.parser')

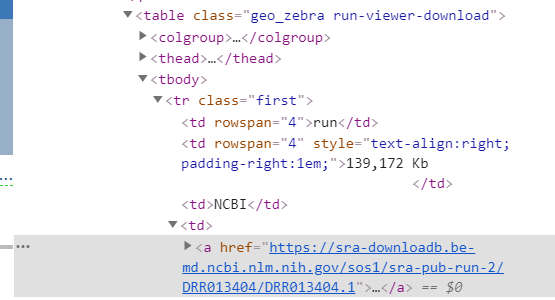

- targets = soup.find_all("table", class_="geo_zebra run-viewer-download")

- for each in targets:

- if each.tbody.tr.td[4].a['href'] is not None:

- g=each.tbody.tr.td[4].a.get('href')

- allurl.append(g)

- with open("allurl.txt", "w", encoding="utf-8") as f:

- f.writelines([j+'\n' for j in allurl])

- if __name__ == "__main__":

- main()

我想要提取图片里面 第四个td下面的链接 但是我不知道怎么做

- urls = soup.find_all("a",href=re.compile("https://sra-downloadb.st-va.ncbi.nlm.nih.gov"))

直接这样试试看

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)