|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

放暑假了 最近闲的蛋疼 写了个爬虫结果一堆bug 想改成多线程加速吧 bug更多了

单线程版:

- #导包

- import requests

- import parsel

- import os

- import time

- #准备工作

- if not os.path.exists('image'):

- os.mkdir('image')

- headers = {

- 'User-Agent':

- 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'

- }

- #爬虫

- for page in range(0,6500):

- #url,headers准备

- print("=================/正在保存第{}页数据=================".format(page))

- base_url = 'https://anime-pictures.net/pictures/view_posts/{}?lang=en'.format(page)

- if page>0:

- time.sleep(10)

- print('太累了,休息下|・ω・` )')

- #请求数据

- response = requests.get(url = base_url,headers = headers)

- html_data = response.text

- #筛选数据

- selector = parsel.Selector(html_data)

- result_list = selector.xpath('//span[@class="img_block_big"]')

- for result in result_list:

- image_url = result.xpath('./a/picture/source/img/@src').extract_first()

- image_id = result.xpath('./a/picture/source/img/@id').extract_first()

- img_url = 'https:' + image_url # 手动拼接完整url

- all_title = image_id + '.' + img_url.split('.')[-1]

- img_data = requests.get(url = img_url,headers = headers).content

- #保存数据

- try:

- with open('image\\' + all_title, mode='wb') as f:

- print('保存成功:', image_id)

- f.write(img_data)

- except:

- pass

- print('保存失败:', image_id,'(•́へ•́╬)')

-

异常内容:

C:\Users\Administrator\AppData\Local\Programs\Python\Python36\python.exe E:/python_fruit/滑稽图/爬虫.py

=================/正在保存第0页数据=================

保存成功: common_preview_img_655082

保存成功: common_preview_img_655084

保存成功: common_preview_img_655085

保存成功: common_preview_img_654687

保存成功: common_preview_img_655061

保存成功: common_preview_img_655062

保存成功: common_preview_img_655063

保存成功: common_preview_img_655064

保存成功: common_preview_img_654930

保存成功: common_preview_img_654929

保存成功: common_preview_img_654927

保存成功: common_preview_img_654850

保存成功: common_preview_img_654926

Traceback (most recent call last):

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\contrib\pyopenssl.py", line 488, in wrap_socket

cnx.do_handshake()

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\OpenSSL\SSL.py", line 1934, in do_handshake

self._raise_ssl_error(self._ssl, result)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\OpenSSL\SSL.py", line 1663, in _raise_ssl_error

raise SysCallError(errno, errorcode.get(errno))

OpenSSL.SSL.SysCallError: (10054, 'WSAECONNRESET')

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\connectionpool.py", line 677, in urlopen

chunked=chunked,

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\connectionpool.py", line 381, in _make_request

self._validate_conn(conn)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\connectionpool.py", line 976, in _validate_conn

conn.connect()

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\connection.py", line 370, in connect

ssl_context=context,

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\util\ssl_.py", line 377, in ssl_wrap_socket

return context.wrap_socket(sock, server_hostname=server_hostname)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\contrib\pyopenssl.py", line 494, in wrap_socket

raise ssl.SSLError("bad handshake: %r" % e)

ssl.SSLError: ("bad handshake: SysCallError(10054, 'WSAECONNRESET')",)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\adapters.py", line 449, in send

timeout=timeout

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\connectionpool.py", line 725, in urlopen

method, url, error=e, _pool=self, _stacktrace=sys.exc_info()[2]

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\urllib3\util\retry.py", line 439, in increment

raise MaxRetryError(_pool, url, error or ResponseError(cause))

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='cdn.anime-pictures.net', port=443): Max retries exceeded with url: /jvwall_images/956/9566c4f4db6d8c928273bc192fae2a40_cp.jpg (Caused by SSLError(SSLError("bad handshake: SysCallError(10054, 'WSAECONNRESET')",),))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "E:/python_fruit/滑稽图/爬虫.py", line 44, in <module>

img_data = requests.get(url = img_url,headers = headers).content

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\api.py", line 76, in get

return request('get', url, params=params, **kwargs)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\api.py", line 61, in request

return session.request(method=method, url=url, **kwargs)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\sessions.py", line 530, in request

resp = self.send(prep, **send_kwargs)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\sessions.py", line 643, in send

r = adapter.send(request, **kwargs)

File "C:\Users\Administrator\AppData\Local\Programs\Python\Python36\lib\site-packages\requests\adapters.py", line 514, in send

raise SSLError(e, request=request)

requests.exceptions.SSLError: HTTPSConnectionPool(host='cdn.anime-pictures.net', port=443): Max retries exceeded with url: /jvwall_images/956/9566c4f4db6d8c928273bc192fae2a40_cp.jpg (Caused by SSLError(SSLError("bad handshake: SysCallError(10054, 'WSAECONNRESET')",),))

Process finished with exit code 1

多线程版(多线程还没写好,先测试函数版单线程)

- #导包

- import requests

- import time

- import os

- import threading

- import parsel

- if not os.path.exists('image'):

- os.mkdir('image')

- base_url = 'https://anime-pictures.net/pictures/view_posts/0?lang=en'

- headers = {

- 'User-Agent':

- 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'

- }

- cookie = {

- 'GA1.2.1560346118.1594984735'

- }

- def get(url,headers):

- '''请求数据'''

- response = requests.get(url,headers)

- html_data = response.text

- return html_data

- def parsel_data(html_data):

- '''筛选数据'''

- selector = parsel.Selector(html_data)

- result_list = selector.xpath('//span[@class="img_block_big"]')

- for result in result_list:

- image_url = result.xpath('./a/picture/source/img/@src').extract_first()

- image_id = result.xpath('./a/picture/source/img/@id').extract_first()

- img_url = 'https:' + image_url #手动拼url

- all_title = img_url

- img_data = requests.get(url = all_title,headers = headers).content

- yield all_title,image_id,img_data

- def save(all_title,image_id,img_data):

- '''保存数据'''

- with open('image\\' + all_title, mode='wb') as f:

- print('保存成功:', image_id)

- #f.write(img_data)

- #except:

- #pass

- #print('保存失败:', img_id,'(•́へ•́╬)')

- def sleep(time):

- '''休眠'''

- time.sleep(time)

- if __name__ == '__main__':

- html_data = get(url=base_url, headers=headers)

- for image_data in parsel_data(html_data):

- all_title = image_data[0] # url https://xxxxxxx...

- img_id = image_data[1] # ID号

- img_data = image_data[2] # 数据

- #print(all_title,img_id,img_data)

- save(all_title = all_title, image_id = img_id, img_data = img_data)

- 异常内容:

- (异常处理被我注释掉了 好弄出来报错内容 如果把注释去掉 则会一直显示保存失败)

- C:\Users\Administrator\AppData\Local\Programs\Python\Python36\python.exe E:/python_fruit/滑稽图/爬虫_多线程.py

- Traceback (most recent call last):

- File "E:/python_fruit/滑稽图/爬虫_多线程.py", line 82, in <module>

- save(all_title = all_title, image_id = img_id, img_data = img_data)

- File "E:/python_fruit/滑稽图/爬虫_多线程.py", line 57, in save

- with open('image\\' + all_title, mode='wb') as f:

- OSError: [Errno 22] Invalid argument: 'image\\https://cdn.anime-pictures.net/jvwall_images/000/00064ebef030944d326648e00ba8aa07_cp.png'

- Process finished with exit code 1

- 还有 帮我修bug时 请注意带好纸巾 避免失血过多{:10_256:}

- 最后 感谢各位鱼油的帮助{:10_334:}

本帖最后由 1q23w31 于 2020-8-7 07:11 编辑

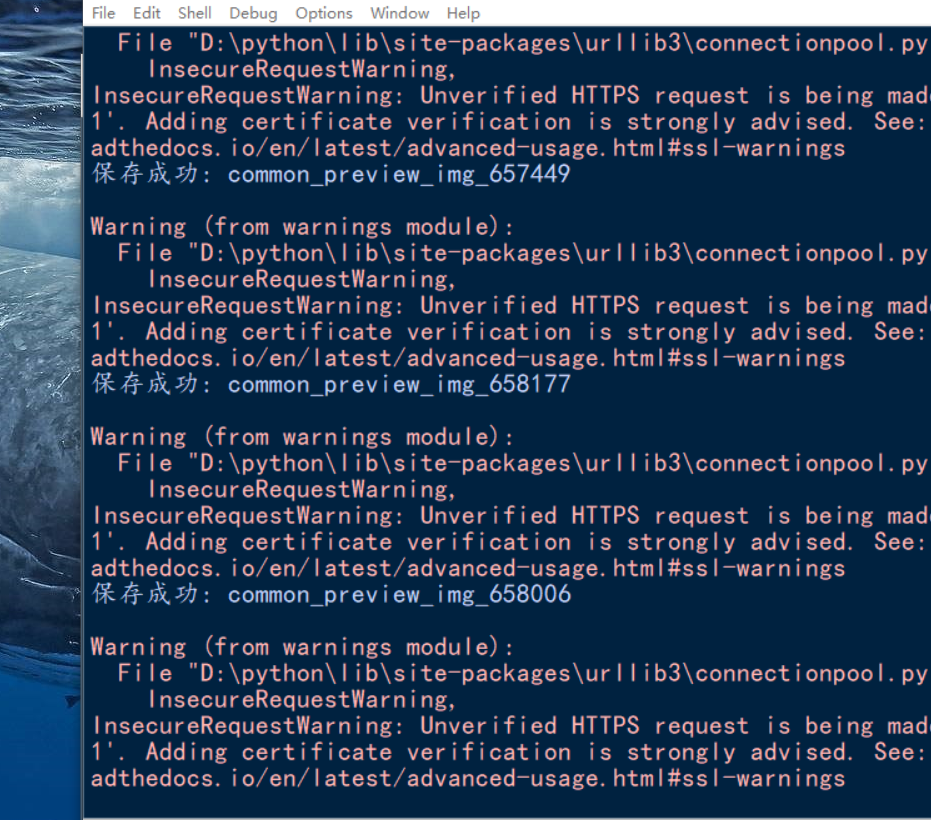

- #导包

- import requests

- import parsel

- import os

- import time

- #准备工作

- if not os.path.exists('image'):

- os.mkdir('image')

- headers = {

- 'User-Agent':

- 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36'

- }

- #爬虫

- for page in range(0,6500):

- #url,headers准备

- print("=================/正在保存第{}页数据=================".format(page))

- base_url = 'https://anime-pictures.net/pictures/view_posts/{}?lang=en'.format(page)

- if page>0:

- time.sleep(10)

- print('太累了,休息下|・ω・` )')

- #请求数据

- response = requests.get(url = base_url,headers = headers,verify = False)

- html_data = response.text

- #筛选数据

- selector = parsel.Selector(html_data)

- result_list = selector.xpath('//span[@class="img_block_big"]')

- for result in result_list:

- image_url = result.xpath('./a/picture/source/img/@src').extract_first()

- image_id = result.xpath('./a/picture/source/img/@id').extract_first()

- img_url = 'https:' + image_url # 手动拼接完整url

- all_title = image_id + '.' + img_url.split('.')[-1]

- img_data = requests.get(url = img_url,headers = headers,verify = False).content

- #保存数据

- try:

- with open('image\\' + all_title, mode='wb') as f:

- print('保存成功:', image_id)

- f.write(img_data)

- except:

- pass

- print('保存失败:', image_id,'(•́へ•́╬)')

-

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)