|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 fytfytf 于 2020-7-27 16:02 编辑

- import requests

- import bs4

- import pickle

- from openpyxl import Workbook

- def open_url(url,keyword,page):

- order=['click','pubdate','dm','stow']

- res=[]

- res_all=[]

- head={'user-agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36 LBBROWSER'}

- for j in range(page):

- page_now=j+1

- for i in order:

- params={'keyword':keyword,'order':i,'page':page_now}

- res.append(requests.get(url,params=params,headers=head))

- res_all.append(res)

- return res_all

- def find_data(res_all):

- wb=Workbook()

- wb.guess_type=True

- wa=wb.active

- num=0

- for i in res_all:

- num+=1

- wa.append(['标题','观看人数','弹幕','上传时间','页数'])

- for k in i:

- soup=bs4.BeautifulSoup(k.text,'lxml')

- data_list=soup.find_all('li',class_='video-item matrix')

- for each in data_list:

- title=each.a['title']

- guankan=each.find('span',title='观看').text

- danmu=each.find('span',title='弹幕').text

- time=each.find('span',title='上传时间').text

- wa.append([title,guankan,danmu,time,num])

- wb.save('bilibili爬取.xlsx')

- def main():

- keyword=input('请输入搜索词:')

- page=int(input('请输入页数:'))

- url='https://search.bilibili.com/all?'

- res_all=open_url(url,keyword,page)

- data_list=find_data(res_all)

- if __name__=='__main__':

- main()

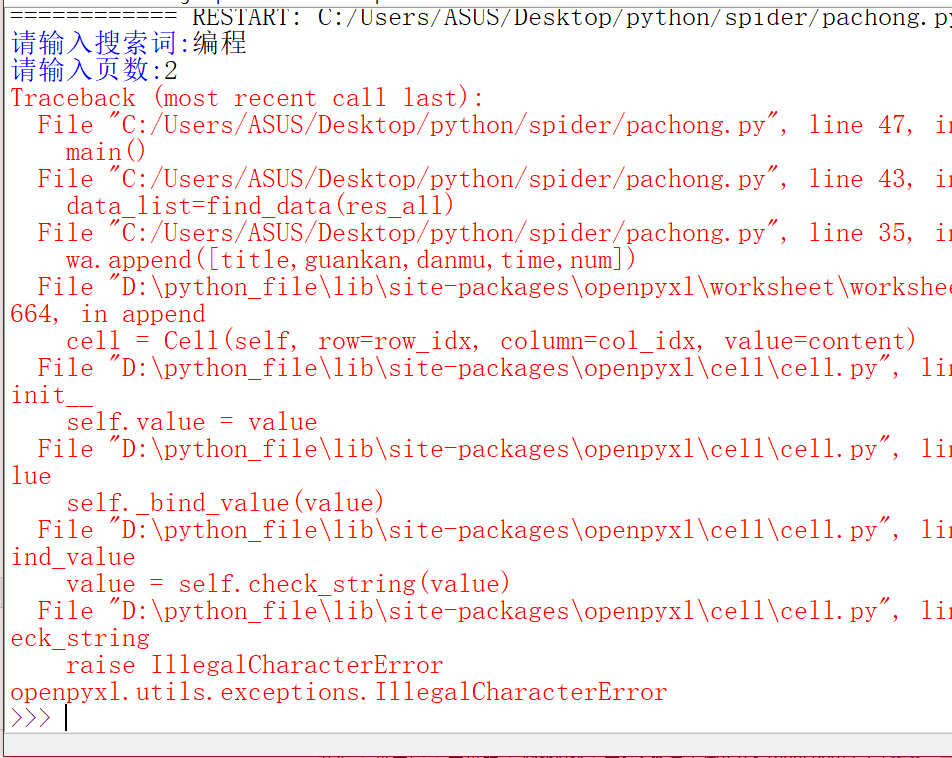

想把bilibili的数据保存进xlsx,结果报错了,这个报错怎么解决啊,求解

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)