|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 L嘉 于 2020-8-4 11:20 编辑

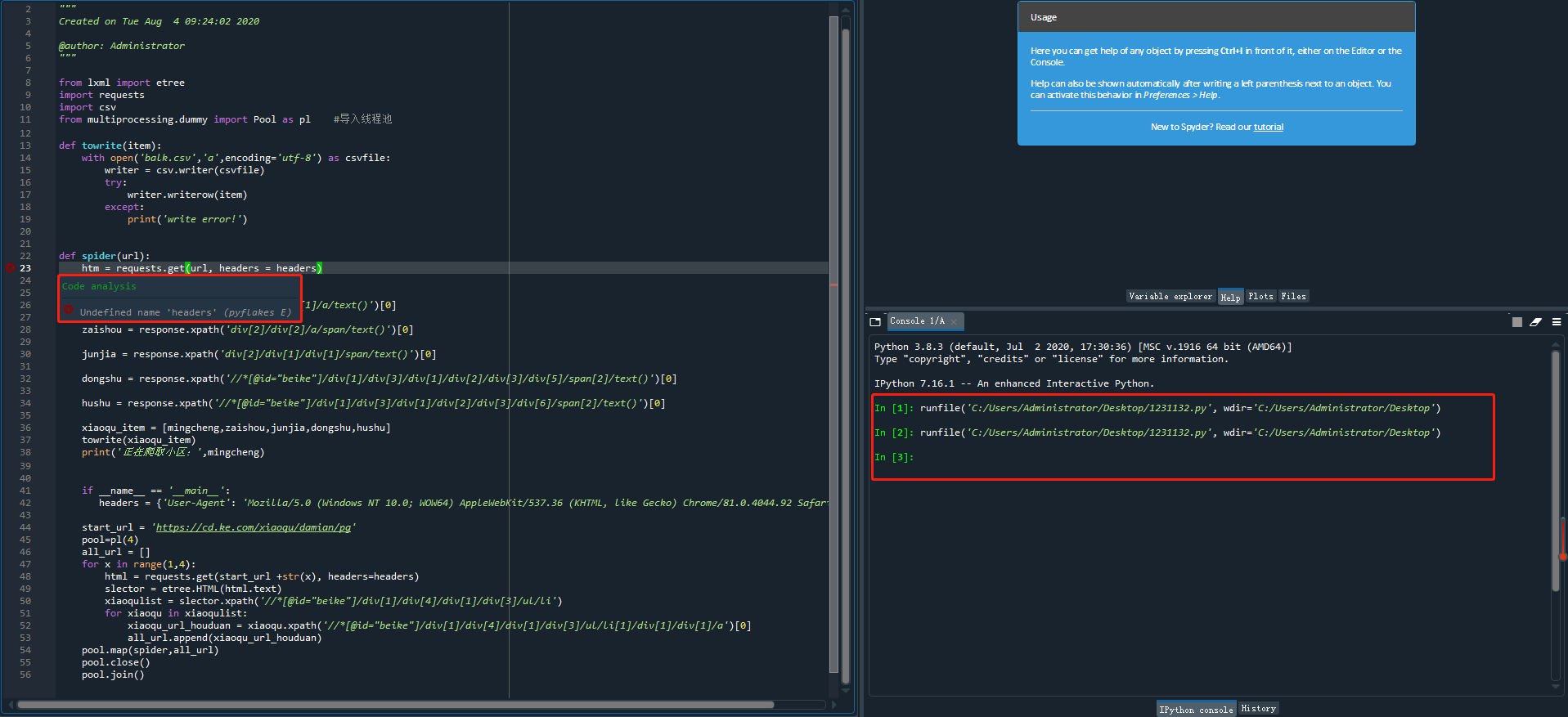

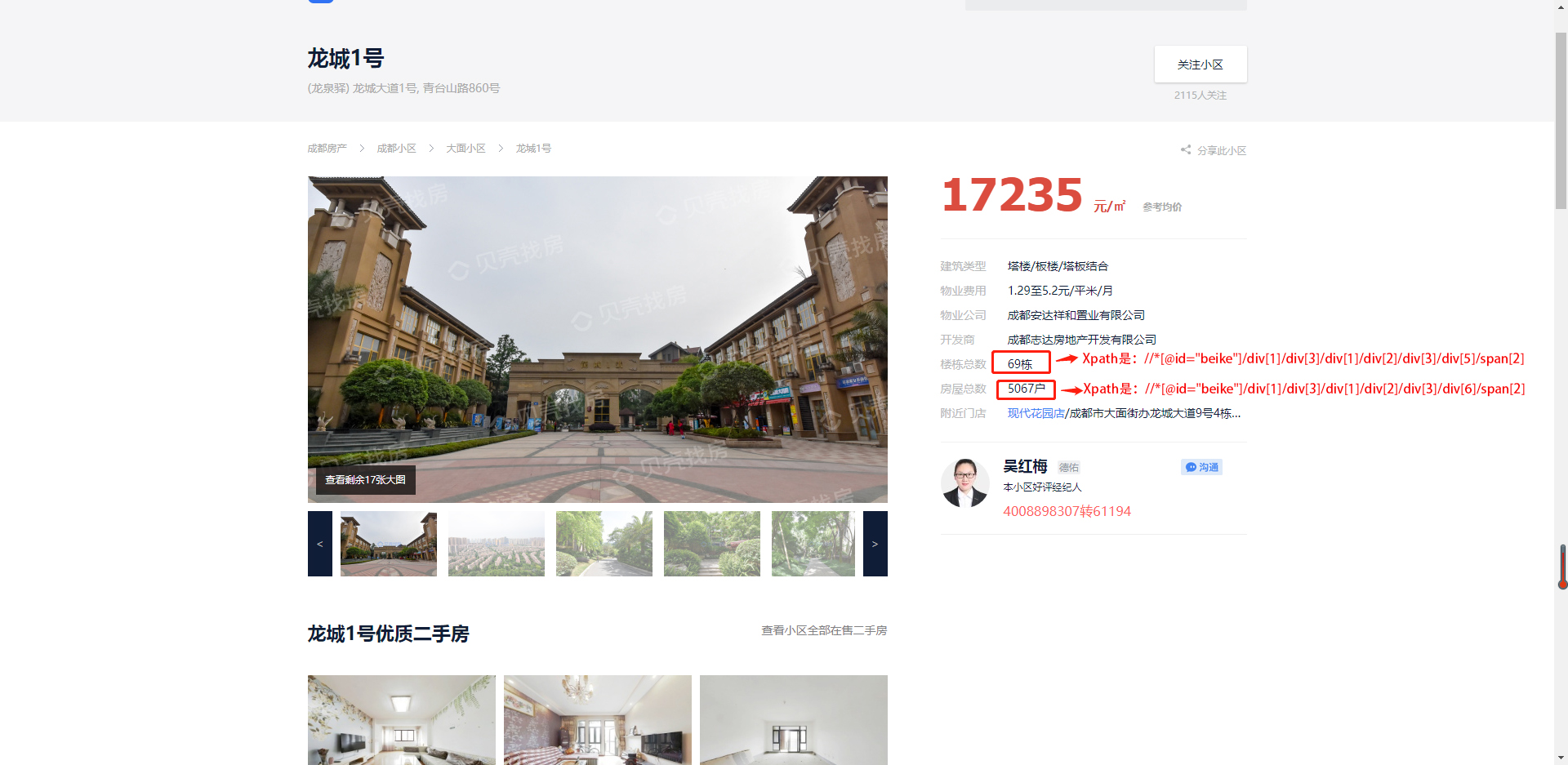

现在我只能爬到标题这些,但是我要爬取子页面的内容应该怎么写了呢?如下图,我要爬取子页面的栋数和户数,但是我这个写好的代码爬取没有反应怎么一回事啊

代码在最后

- # -*- coding: utf-8 -*-

- """

- Created on Tue Aug 4 09:24:02 2020

- @author: Administrator

- """

- from lxml import etree

- import requests

- import csv

- from multiprocessing.dummy import Pool as pl #导入线程池

- def towrite(item):

- with open('balk.csv','a',encoding='utf-8') as csvfile:

- writer = csv.writer(csvfile)

- try:

- writer.writerow(item)

- except:

- print('write error!')

-

-

- def spider(url):

- htm = requests.get(url, headers = headers)

- response=etree.HTML(htm.text)

-

- mingcheng = response.xpath('div[1]/div[1]/a/text()')[0]

-

- zaishou = response.xpath('div[2]/div[2]/a/span/text()')[0]

-

- junjia = response.xpath('div[2]/div[1]/div[1]/span/text()')[0]

-

- dongshu = response.xpath('//*[@id="beike"]/div[1]/div[3]/div[1]/div[2]/div[3]/div[5]/span[2]/text()')[0]

-

- hushu = response.xpath('//*[@id="beike"]/div[1]/div[3]/div[1]/div[2]/div[3]/div[6]/span[2]/text()')[0]

-

- xiaoqu_item = [mingcheng,zaishou,junjia,dongshu,hushu]

- towrite(xiaoqu_item)

- print('正在爬取小区:',mingcheng)

-

-

- if __name__ == '__main__':

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.92 Safari/537.3'}

-

- start_url = 'https://cd.ke.com/xiaoqu/damian/pg'

- pool=pl(4)

- all_url = []

- for x in range(1,4):

- html = requests.get(start_url +str(x), headers=headers)

- slector = etree.HTML(html.text)

- xiaoqulist = slector.xpath('//*[@id="beike"]/div[1]/div[4]/div[1]/div[3]/ul/li')

- for xiaoqu in xiaoqulist:

- xiaoqu_url_houduan = xiaoqu.xpath('//*[@id="beike"]/div[1]/div[4]/div[1]/div[3]/ul/li[1]/div[1]/div[1]/a')[0]

- all_url.append(xiaoqu_url_houduan)

- pool.map(spider,all_url)

- pool.close()

- pool.join()

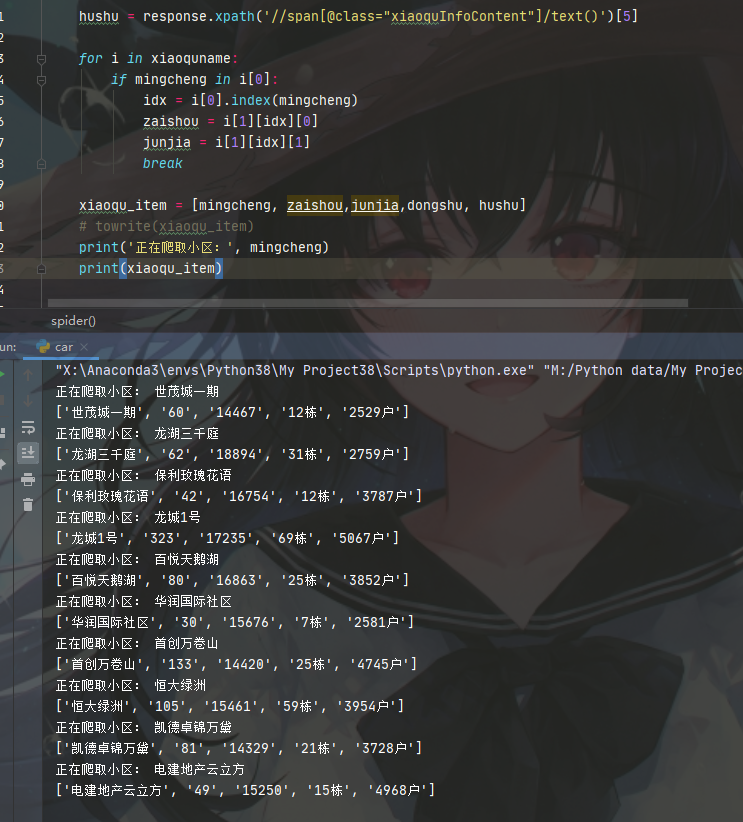

帮你改完了,应该能达到你的目的了:

- # -*- coding: utf-8 -*-

- """

- Created on Tue Aug 4 09:24:02 2020

- @author: Administrator

- """

- from lxml import etree

- import requests

- import csv

- from multiprocessing.dummy import Pool as pl # 导入线程池

- def towrite(item):

- with open('balk.csv', 'a', encoding='utf-8') as csvfile:

- writer = csv.writer(csvfile)

- try:

- writer.writerow(item)

- except:

- print('write error!')

- def spider(url):

- htm = requests.get(url, headers=headers)

- response = etree.HTML(htm.text)

- mingcheng = response.xpath('//div[@class="title"]/h1/text()')[0].strip()

- dongshu = response.xpath('//span[@class="xiaoquInfoContent"]/text()')[4]

- hushu = response.xpath('//span[@class="xiaoquInfoContent"]/text()')[5]

- for i in xiaoquname:

- if mingcheng in i[0]:

- idx = i[0].index(mingcheng)

- zaishou = i[1][idx][0]

- junjia = i[1][idx][1]

- break

- xiaoqu_item = [mingcheng, zaishou,junjia,dongshu, hushu]

- towrite(xiaoqu_item)

- print('正在爬取小区:', mingcheng)

- if __name__ == '__main__':

- headers = {

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.92 Safari/537.3'}

- start_url = 'https://cd.ke.com/xiaoqu/damian/pg'

- pool = pl(4)

- all_url = []

- xiaoquname = []

- for x in range(1, 4):

- html = requests.get(start_url + str(x), headers=headers)

- slector = etree.HTML(html.text)

- xiaoqulist = slector.xpath('//div[@class="info"]/div[@class="title"]/a/@href')

- name = slector.xpath("//a[@class='maidian-detail']/text()")

- jiage = slector.xpath("//div[@class='totalPrice']/span/text()")

- zaishous = slector.xpath("//a[@class='totalSellCount']/span/text()")

- xiaoquname.append([name, list(zip(zaishous,jiage))])

- for xiaoqu in xiaoqulist:

- all_url.append(xiaoqu)

- pool.map(spider, all_url)

- pool.close()

- pool.join()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)