|

|

50鱼币

本帖最后由 景暄 于 2020-10-25 17:39 编辑

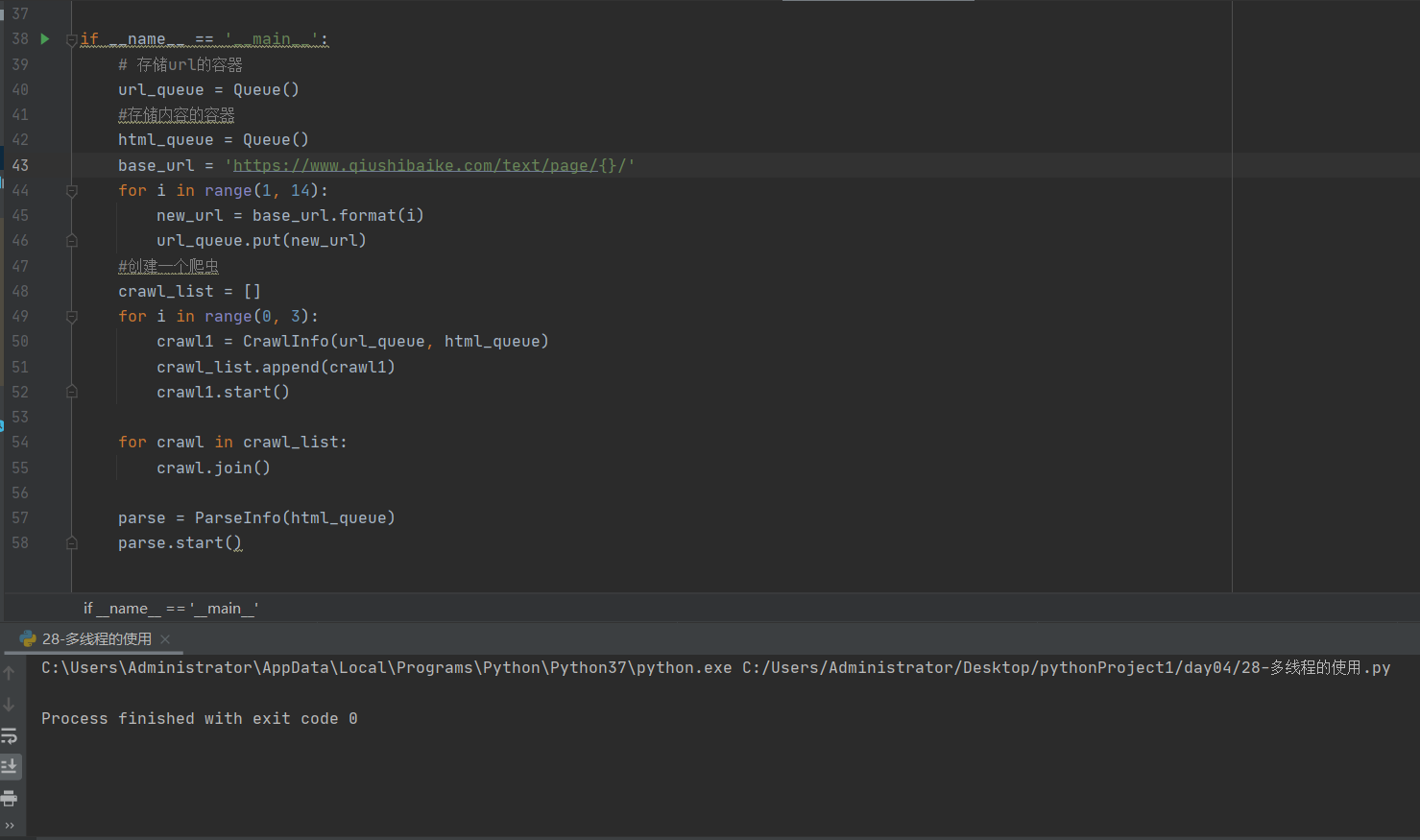

爬取糗事百科的段子,可能是join()函数设置的有问题,每次运行都没报错,但也没把结果打印出来

- from threading import Thread

- from queue import Queue

- from fake_useragent import UserAgent

- import requests

- from lxml import etree

- #爬虫类

- class CrawlInfo(Thread):

- def __init__(self,url_queue,html_queue):

- Thread.__init__(self)

- self.url_queue = url_queue

- self.html_queue = html_queue

- def run(self):

- headers = {

- 'User-Agent':UserAgent().random

- }

- while self.url_queue.empty() == False:

- url = self.url_queue.get()

- response = requests.get(url, headers=headers)

- if response.status_code == 200:

- self.html_queue.put(response.text)

- #解析类

- class ParseInfo(Thread):

- def __init__(self, html_queue):

- Thread.__init__(self)

- self.html_queue = html_queue

- def run(self):

- while self.html_queue.empty() == False:

- e = etree.HTML(self.html_queue.get())

- span_contents = e.xpath('//div[@class="content"]/sapn[1]')

- for span in span_contents:

- info = span.xpath('string(.)')

- print(info)

- if __name__ == '__main__':

- # 存储url的容器

- url_queue = Queue()

- #存储内容的容器

- html_queue = Queue()

- base_url = 'https://www.qiushibaike.com/text/page/{}/'

- for i in range(1, 14):

- new_url = base_url.format(i)

- url_queue.put(new_url)

- #创建一个爬虫

- crawl_list = []

- for i in range(0, 3):

- crawl1 = CrawlInfo(url_queue, html_queue)

- crawl_list.append(crawl1)

- crawl1.start()

- for crawl in crawl_list:

- crawl.join()

- parse = ParseInfo(html_queue)

- parse.start()

多线程爬一个不可描述网站

- import requests

- import bs4

- import time

- import os,sys

- import random

- from threading import Thread

- import re

- def down_jpg(url1,numm):

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

-

- 'referer':'newurl',

- 'cookie':'__cfduid=dccf7de8219a41296cc717949a14788851601881784; existmag=mag; 4fJN_2132_saltkey=aU1VYvxu; 4fJN_2132_lastvisit=1601882439; 4fJN_2132_visitedfid=36D2; PHPSESSID=6be8caov3lgjr4dhlsq9bm7rm7; 4fJN_2132_st_p=0%7C1602987046%7C5bc121f439bbf8cfe7e3512e0335a580; 4fJN_2132_viewid=tid_2117; 4fJN_2132_sid=IBh6Aq; 4fJN_2132_sendmail=1; 4fJN_2132_lastact=1602987586%09forum.php%09forumdisplay; 4fJN_2132_st_t=0%7C1602987586%7C88bfb33921f3da072fadcaf37c081bc4; 4fJN_2132_forum_lastvisit=D_2_1602946097D_36_1602987586'

- ,"Connection": "close"

- }

- re = requests.get(url1,headers = headers)

- img = re.content

- print('downlond jpg',url1)

- name = os.listdir()

- for i in name:

- if i !='http.txt' and i[-3:-1] =='tx':

- name1 = name[0]+str(numm)+'1'

- with open(name1+'.jpg','wb')as f:

- f.write(img)

- def jpg_list(listy):

- plist = []

- num = len(listy)

- for i in range(num):

- t = Thread(target = down_jpg,args = (listy[i],i))

- t.start()

- plist.append(t)

- for i in plist:

- i.join()

- print('图',i,'完成')

- print('------over------')

- time.sleep(10)

-

- def find_new(res2,newurl):

- num = 0

- soup = bs4.BeautifulSoup(res2.text,'html.parser')

- aaa = soup.find_all(onload='thumbImg(this)')

- print('jpg...')

- with open('http.txt','w',encoding='utf-8')as f:

- f.write(soup.text)

- listy = []

- numy = 0

- for i in aaa:

- x = i.get('src')

- listy.append(x)

- jpg_list(listy)

-

- def open_new(ilist):

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

-

- 'referer':url,

- 'cookie':'自己添加'

- }

- global newurl

- newurl = 'https://www.busfan.cloud/forum/'+ilist

- res2 = requests.get(newurl,headers = headers)

- code = res2.status_code

- if code ==200:

- print('子页面连接成功',newurl)

- time.sleep(random.randint(1,3))

- soup = bs4.BeautifulSoup(res2.text,'html.parser')

- print('txt...')

- name0 = os.getcwd()

- name = re.findall("[\u4e00-\u9fa5]+",name0)

- find_new(res2,newurl)

- else:

- print('打不开')

-

- def find_data(res):

- print('解析主页拿到子页面地址和名称')

- zlist = []

- pathlist = []

- soup = bs4.BeautifulSoup(res.text,'html.parser')

- aaa = soup.find_all(onclick = 'atarget(this)')

- for new in aaa:

- ilist = new.get('href')#子页面地址

- name = new.text #子页面名称

- print('得到:',name)

- path = 'M:\\3\\'+name

- os.chdir('M:\\3')

- lj = os.listdir()

- for xi in lj:

- if name == xi:

- print('删除重复文件夹',xi)

- os.chdir(path)

- file = os.listdir()

- for zi in file:

- os.remove(zi)

- os.chdir('M:\\3')

- os.rmdir(path)

-

- zlist.append(ilist)

- pathlist.append(path)

- num = len(zlist)

- for i in range(num):

- t = Thread(target = open_new,args = (zlist[i],))

- os.mkdir(pathlist[i])

- os.chdir(pathlist[i])

- with open(name + '.txt','w')as f:

- f.write(name)

- t.start()

- print(zlist[i])

- t.join(15)

- print('下一页')

-

- def open_url(url):

- time.sleep(1)

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36',

-

- 'referer':'https://www.busfan.cloud/',

- 'cookie':'自己添加'

- ,"Connection": "close"

- }

- res = requests.get(url,headers = headers)

- code = res.status_code

- if code ==200:

- print('成功打开主页:',res)

- time.sleep(random.randint(1,3))

- return res

- else:

- time.sleep(3)

- def main():

- res = open_url(url)

- find_data(res)

- if __name__ == '__main__':

-

- numx = 299

- while numx >0:

- url = 'https://www.busfan.cloud/forum/forum.php?mod=forumdisplay&fid=36&typeid=5&typeid=5&filter=typeid&page='+str(numx)

- numx=numx-1

- print('---------------开始--------------------','\n',numx,'@@##')

- main()

|

-

如图所示没有打印结果

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)