|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

# coding:utf-8

from selenium import webdriver

from bs4 import BeautifulSoup

driver=webdriver.Chrome(executable_path=r'E:\python\chromedriver.exe')

driver.get('http://www.toutiao.com')

wbdata=driver.page_source

soup=BeautifulSoup(wbdata,'lxml')

news_list=soup.find_all('a',attrs={'target':'_blank','rel':'noopener'})

for new in news_list:

title=new.get('title')

link=new.get('href')

data={'标题':title,

'链接':link

}

print(data)

以下是运行结果:

E:\python\python.exe G:/Python/spider/sele.py

Process finished with exit code 0

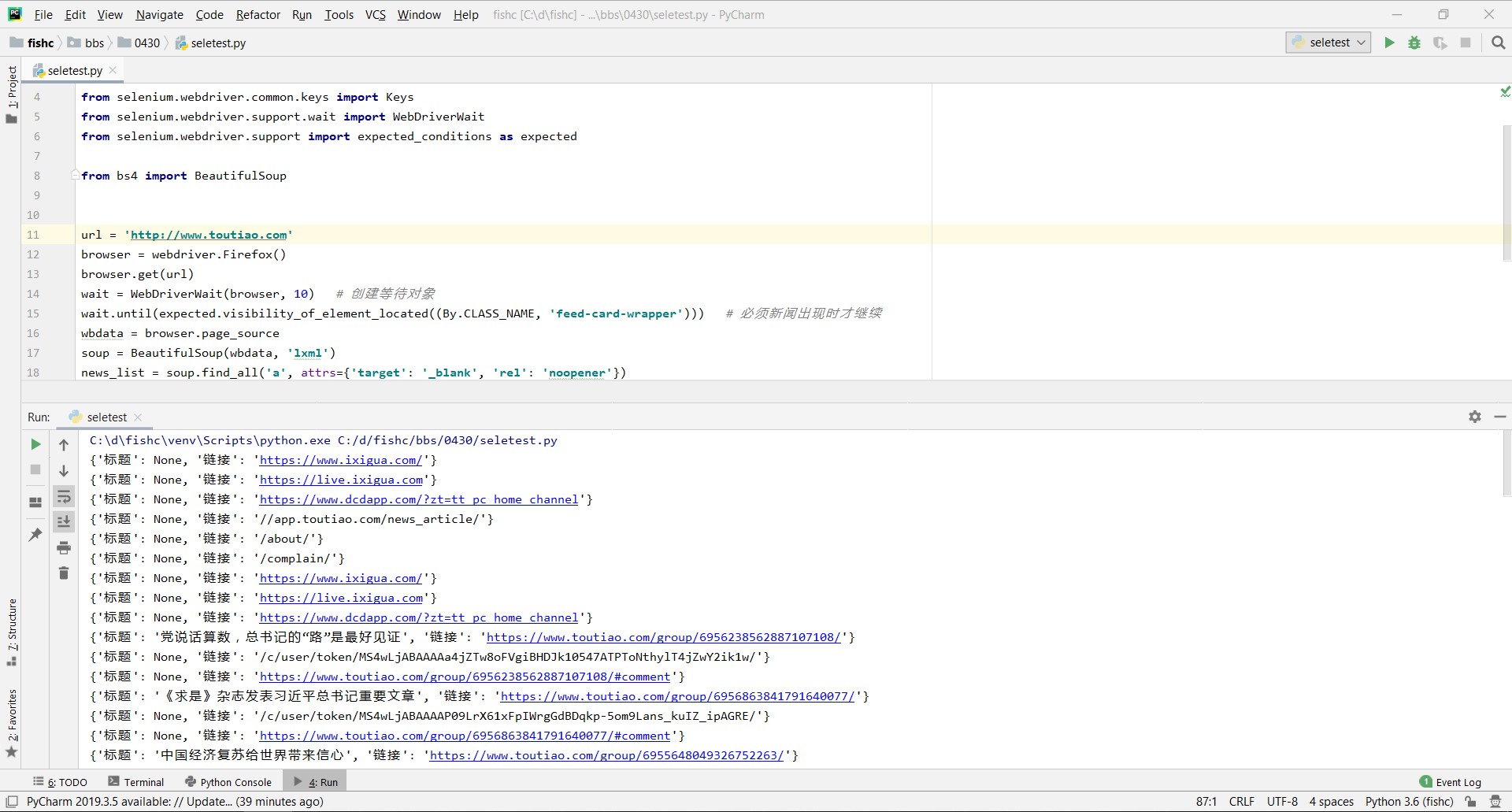

请问,有谁知道上面的代码有什么问题吗?为啥上面的代码运行的结果为空?

要等资源加载完的,selenium没有那么快: - from selenium import webdriver

- from selenium.webdriver import Firefox

- from selenium.webdriver.common.by import By

- from selenium.webdriver.common.keys import Keys

- from selenium.webdriver.support.wait import WebDriverWait

- from selenium.webdriver.support import expected_conditions as expected

- from bs4 import BeautifulSoup

- url = 'http://www.toutiao.com'

- browser = webdriver.Firefox()

- browser.get(url)

- wait = WebDriverWait(browser, 10) # 创建等待对象

- wait.until(expected.visibility_of_element_located((By.CLASS_NAME, 'feed-card-wrapper'))) # 必须新闻出现时才继续

- wbdata = browser.page_source

- soup = BeautifulSoup(wbdata, 'lxml')

- news_list = soup.find_all('a', attrs={'target': '_blank', 'rel': 'noopener'})

- for new in news_list:

- title = new.get('title')

- link = new.get('href')

- data = {'标题': title,

- '链接': link

- }

- print(data)

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)