|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

在做爬虫第一课的练习时,题目要求爬豆瓣的首页内容

结果遇到了报错

- Traceback (most recent call last):

- File "D:/python/works/test.py", line 11, in <module>

- html=urr.urlopen(ret).read()

- File "D:\python\lib\urllib\request.py", line 214, in urlopen

- return opener.open(url, data, timeout)

- File "D:\python\lib\urllib\request.py", line 523, in open

- response = meth(req, response)

- File "D:\python\lib\urllib\request.py", line 632, in http_response

- response = self.parent.error(

- File "D:\python\lib\urllib\request.py", line 561, in error

- return self._call_chain(*args)

- File "D:\python\lib\urllib\request.py", line 494, in _call_chain

- result = func(*args)

- File "D:\python\lib\urllib\request.py", line 641, in http_error_default

- raise HTTPError(req.full_url, code, msg, hdrs, fp)

- urllib.error.HTTPError: HTTP Error 418:

查了下网上,有人说加个headers里的User-Agent就可以模拟成浏览器访问

我加了之后却依然还是报错

之后我用了人家帖子里提供的User-Agent,成功爬虫。

然而问题是,我用我的浏览器访问豆瓣实际上是没有问题的。但是为什么爬虫时用我浏览器的User-Agent就不行呢?

是我写错了吗?

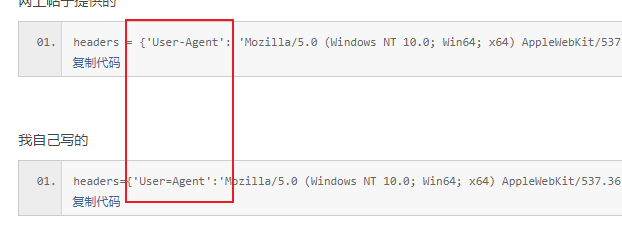

网上帖子提供的

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'}

我自己写的

- headers={'User=Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.101 Safari/537.36 Edg/91.0.864.48'}

程序全代码:

- import urllib.request as urr

- import chardet as char

- with open('urls.txt') as urls:

- num=0

- for urllink in urls.readlines():

- print(urllink)

- num+=1

- #headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'}

- headers={'User=Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.101 Safari/537.36 Edg/91.0.864.48'}

- ret=urr.Request(urllink,headers=headers)

- html=urr.urlopen(ret).read()

- urlcode=char.detect(html)['encoding']

- if urlcode=='GB2312':

- urlcode='GBK'

- html=html.decode(urlcode,'ignore')

- with open(f'url_{num}.txt','w',encoding=urlcode) as file:

- file.write(html)

...

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)