|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

这是小甲鱼答案中代码

urls.txt文件内容是:

http://www.baidu.com

http://www.zhihu.com

http://www.taobao.com

http://www.douban.com

- import urllib.request

- import chardet

- def main():

- i = 0

- with open("urls.txt", "r") as f:

- # 读取待访问的网址

- # 由于urls.txt每一行一个URL

- # 所以按换行符'\n'分割

- urls = f.read().splitlines()

- for each_url in urls:

- response = urllib.request.urlopen(each_url)

- html = response.read()

- # 识别网页编码

- encode = chardet.detect(html)['encoding']

- if encode == 'GB2312':

- encode = 'GBK'

- i += 1

- filename = "url_%d.txt" % i

- with open(filename, "w", encoding=encode) as each_file:

- each_file.write(html.decode(encode, "ignore"))

- if __name__ == "__main__":

- main()

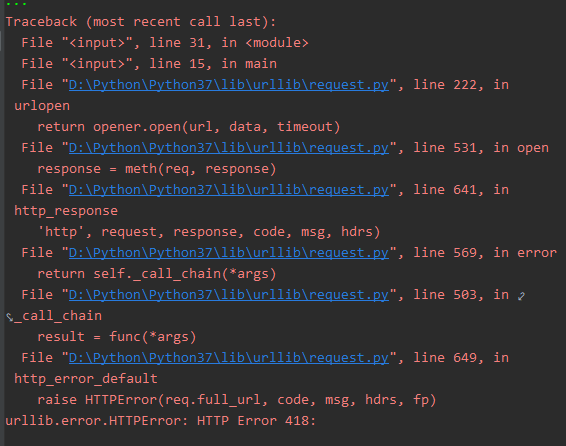

我在执行过程中报了这样的错是为什么?我自己作的答案也报了这样的错,这是我电脑设置问题?

是读取到http://www.taobao.com网站时报错的,难道是淘宝的问题?

本帖最后由 suchocolate 于 2021-9-16 21:42 编辑

- import urllib.request

- import chardet

- def main():

- i = 0

- with open("urls.txt", "r") as f:

- urls = f.read().splitlines()

- opener = urllib.request.build_opener() # 创建opener对象

- opener.addheaders = [('User-agent', 'Mozilla')] # 修改默认的UA,否则会触发网站反扒。

- for each_url in urls:

- response = opener.open(each_url)

- html = response.read()

- encode = chardet.detect(html)['encoding']

- if encode == 'GB2312':

- encode = 'GBK'

- i += 1

- with open(f"url_{i}.txt", "w", encoding=encode) as f:

- f.write(html.decode(encode, "ignore"))

- if __name__ == "__main__":

- main()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)