|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 Handsome_zhou 于 2022-10-11 10:09 编辑

循环神经网络是用于序列数据分析的神经网络,

比如: 1)情感分类

2)机器翻译

3)序列标注

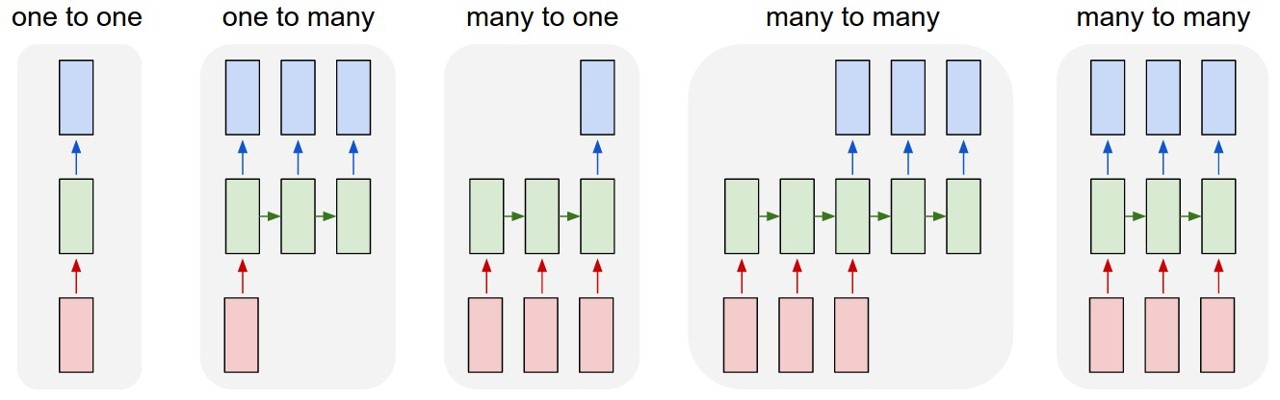

上图为循环神经网络的5种不同架构,从左到右:

第一幅图是一个输入(单一标签)对应一个输出(单一标签),即“one to one”方式;第二幅图为一个输入对应多个输出,即“one to many”方式,这种网络架构广泛用于图片的对象识别领域,即输入的是一张图片,输出的是一个文本序列;

第三幅图是多个输入对应一个输出,即“many to one”方式,这种网络架构广泛用于文本分类或视频片段分类的任务,输入的是视频或文本集合,输出的是类别标签;

第四幅图是多个输入对应有间隔的多个输出,即第一种“many to many”方式,这种网络架构就是机器翻译系统,输入的是一种语言的文本,输出的是另一种语言的文本;

第五幅图是多个输入严格对应多个输出,即第二种“many to many”方式,这种网络架构就是NLP中广泛使用的序列标注任务。

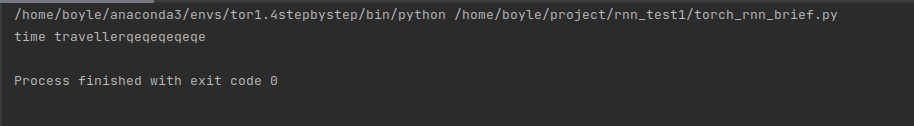

应用小例:文本序列的下一个字符预测任务以及时间序列预测任务:

字符预测任务

- import torch

- from torch import nn

- from torch.nn import functional as F

- from d2l import torch as d2l

- #数据

- batch_size, num_steps = 32, 35

- train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

- #超参数

- num_hiddens = 256

- rnn_layer = nn.RNN(len(vocab), num_hiddens)

- state = torch.zeros((1, batch_size, num_hiddens))

- X = torch.rand(size=(num_steps, batch_size, len(vocab)))

- Y, state_new = rnn_layer(X, state)

- class RNNModel(nn.Module):

- def __init__(self, rnn_layer, vocab_size, **kwargs):

- super(RNNModel, self).__init__(**kwargs)

- self.rnn = rnn_layer

- self.vocab_size = vocab_size

- self.num_hiddens = self.rnn.hidden_size

- if not self.rnn.bidirectional:

- self.num_directions = 1

- self.linear = nn.Linear(self.num_hiddens, self.vocab_size)

- else:

- self.num_directions = 2

- self.linear = nn.Linear(self.num_hiddens * 2, self.vocab_size)

- def forward(self, inputs, state):

- X = F.one_hot(inputs.T.long(), self.vocab_size)

- X = X.to(torch.float32)

- Y, state = self.rnn(X, state)

- output = self.linear(Y.reshape((-1, Y.shape[-1])))

- return output, state

- def begin_state(self, device, batch_size=1):

- if not isinstance(self.rnn, nn.LSTM):

- return torch.zeros((self.num_directions * self.rnn.num_layers,

- batch_size, self.num_hiddens),

- device=device)

- else:

- return (torch.zeros((self.num_directions * self.rnn.num_layers,

- batch_size, self.num_hiddens), device=device),

- torch.zeros((self.num_directions * self.rnn.num_layers,

- batch_size, self.num_hiddens), device=device))

- device = d2l.try_gpu()

- net = RNNModel(rnn_layer, vocab_size=len(vocab))

- net = net.to(device)

- result = d2l.predict_ch8('time traveller', 10, net, vocab, device)

- print(result)

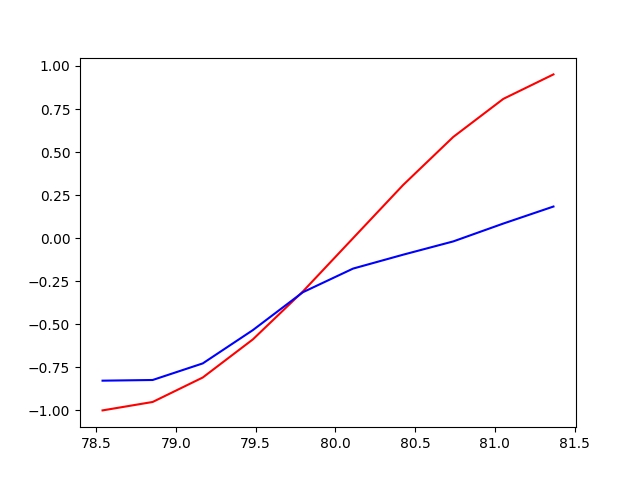

时间序列预测任务

- import torch

- from torch import nn

- import numpy as np

- import matplotlib.pyplot as plt

- from torch.autograd import Variable

- TIME_STEP = 10 # rnn time step

- INPUT_SIZE = 1 # rnn input size

- LR = 0.02 # learning rate

- #数据

- steps = np.linspace(0, np.pi*2, 100, dtype=np.float32) # float32 for converting torch FloatTensor,生成等间距数组。0到2pi生成100个数

- x_np = np.sin(steps) # 100个数的sin值

- y_np = np.cos(steps) # 100个数的cos值

- plt.plot(steps, y_np, 'r_', label='target (cos)')

- plt.plot(steps, x_np, 'b_', label='input (sin)')

- plt.legend(loc='best')

- plt.show()

- class RNN(nn.Module): # 搭建RNN框架

- def __init__(self):

- super(RNN, self).__init__()

- self.rnn = nn.RNN( # 设置超参数

- input_size=INPUT_SIZE, #编码大小为1

- hidden_size=32, #RNN隐藏单元个数为32,hidden_state有32个神经元

- num_layers=1, # 层数为1

- batch_first=True, # batch的维度放在第一个

- )

- self.out = nn.Linear(32, 1) #输出层是一个32个输入1个输出的MLP

- #定义前向传播函数

- def forward(self, x, h_state):

- # x (batch_size, time_step, input_size)

- # h_state (n_layers, batch, hidden_size)

- # r_out (batch, time_step, output_size), output_size就是hidden_size

- r_out, h_state = self.rnn(x, h_state) # 得到更新后的隐状态及输出,输入为之前的记忆和新来的x

- outs = []

- for time_step in range(r_out.size(1)):

- outs.append(self.out(r_out[:, time_step, :])) # 每个时间点输出一次,到output过一下。将结果存到outs当中。输出列表append每一个时间步的输出就是rnn的总的输出

- return torch.stack(outs, dim=1), h_state #将list格式的outs转换成tensor格式

-

- rnn = RNN()

- print(rnn)

- #定义优化器,损失函数

- optimizer = torch.optim.Adam(rnn.parameters(), lr=LR)

- loss_func = nn.MSELoss()

- #初始化隐状态

- h_state = None

- plt.figure(1, figsize=(12, 5))

- plt.ion()

- #开始训练

- for step in range(100):

- start, end = step * np.pi, (step+1)*np.pi

- steps = np.linspace(start, end, TIME_STEP, dtype=np.float32, endpoint=False) # 取10个点

- x_np = np.sin(steps) # 获得10个点的sin值

- y_np = np.cos(steps) # 获得10个点的cos值

- x = Variable(torch.from_numpy(x_np[np.newaxis, :, np.newaxis])) # 加维度:形状(batch, time_step, input_size)

- y = Variable(torch.from_numpy(y_np[np.newaxis, :, np.newaxis]))

- prediction, h_state = rnn(x, h_state)

- h_state = Variable(h_state.data) # !!!通过这一步重新将h_state.data包成Variable的形式

- loss = loss_func(prediction, y)

- optimizer.zero_grad()

- loss.backward()

- optimizer.step()

- #plotting

- plt.plot(steps, y_np.flatten(), 'r-')

- plt.plot(steps, prediction.data.numpy().flatten(), 'b-')

- plt.draw(); plt.pause(0.05)

- plt.ioff()

- plt.show()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)