|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 underwood_yo 于 2023-9-8 17:33 编辑

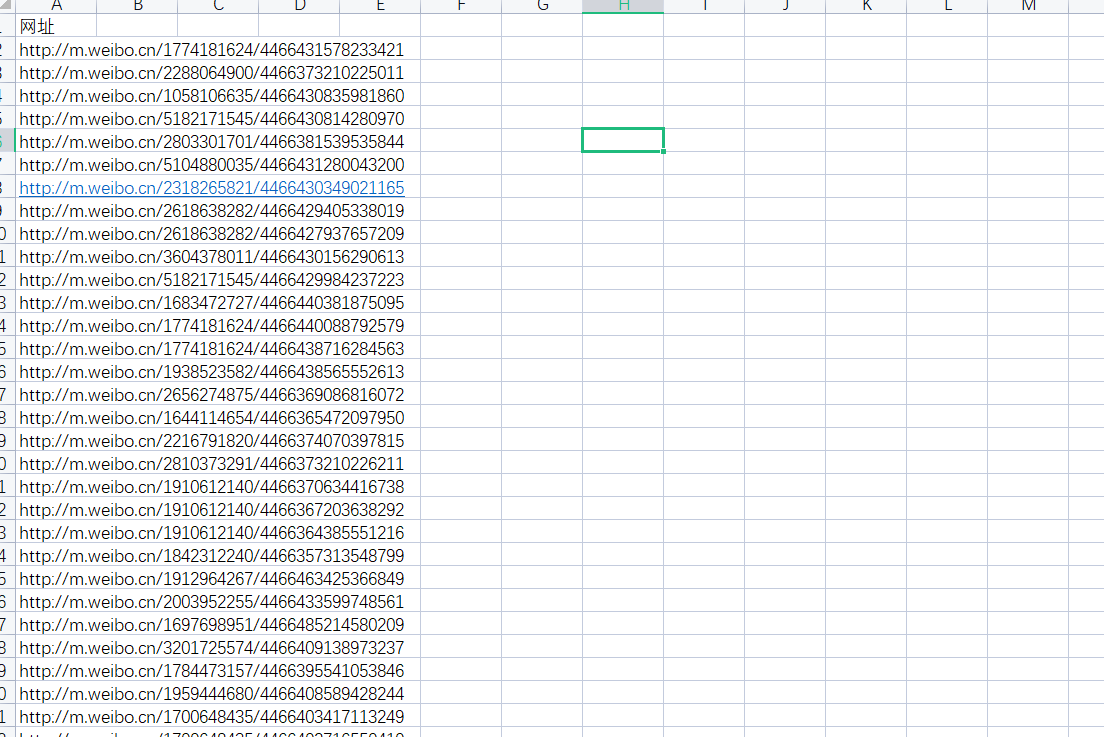

同学需要爬取微博的评论内容以及时间,给了一个excel文件,包含需要爬取的网址:

- import numpy as np

- import pandas as pd

- from selenium import webdriver

- import re

- from selenium.webdriver.common.by import By

- from bs4 import BeautifulSoup

- import time

- chrome_options = webdriver.ChromeOptions()

- chrome_options.add_argument('--headless')

- browser = webdriver.Chrome(options=chrome_options)

- start_time = time.time()

- def crawer(url):

- print('开始爬取'+ url)

- browser.get(url)

- time.sleep(1)

- res = browser.page_source

- return res

- def execution_data(res):

- bs4_res = BeautifulSoup(res, 'html.parser')

- text = bs4_res.select('#app > div.lite-page-wrap > div > div.main > div > article > div > div > div.weibo-text')[0].text

- created_at = '"created_at": "(.*?)"'

- time_ = re.findall(created_at, res,re.S)

- return text,time_

- data = pd.read_excel('wangzhi.xlsx')

- text_all = []

- time_all = []

- for i in range(data.shape[0]-1):

- try:

- url = data['网址'].iloc[i]

- res = crawer(url)

- text = execution_data(res)[0]

- time_ = execution_data(res)[1]

- text_all.append(text)

- time_all.append(time_)

- except:

- print('第'+ str(i+1) + '个网页出现问题')

- print(url)

- text = []

- time_ = []

- text_all.append(text)

- time_all.append(time_)

- context = pd.DataFrame({'文本':text_all,'时间':time_all})

- context.to_excel('微博正文.xlsx')

- print('爬取完成')

- end_time = time.time()

- total_time = end_time - start_time

- print("所有任务结束,总耗时为:" + str(total_time))[postbg]bg8.png[/postbg]

|

-

网址原数据

-

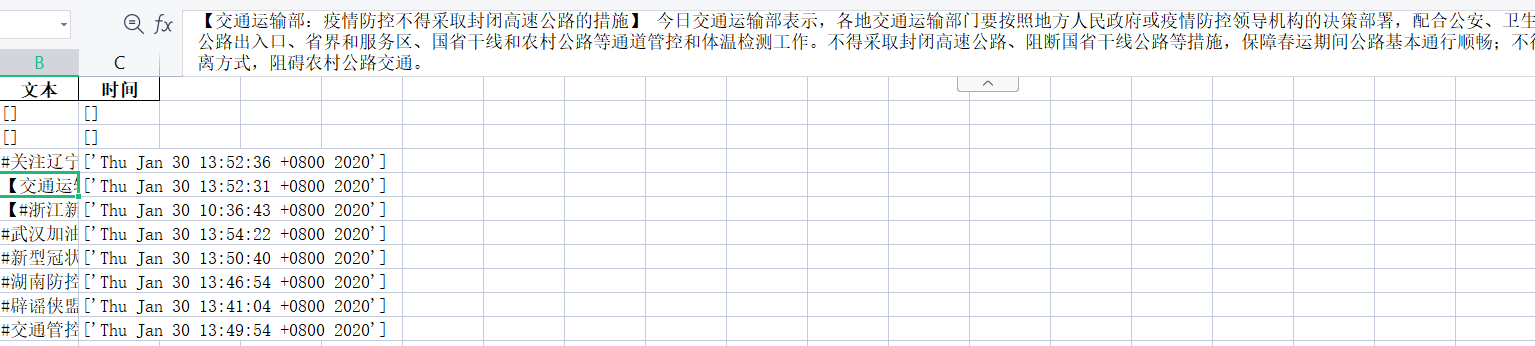

结果示意

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)