|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

- //************************** *********************

- // 2024-3-17-

- // ************************** *********************

- #include <stdio.h>

- #include <stdlib.h>

- #include <cuda_runtime.h>

- #include <device_launch_parameters.h>

- #include <string.h>

- //#include <device_functions.h>

- //使用全局内存来操作

- __global__ void global_reduce_kernel(float* d_out, float* d_in)

- {

- int myId = threadIdx.x + blockIdx.x * blockDim.x; //有多个block.在所有线程的位置

- int tid = threadIdx.x; //在当前block里面的位置

- //在global memory里面做

- //循环的条件是 s 大于 0,每次循环迭代结束后,s 的值右移一位(相当于将其除以 2),以便在下一次迭代中处理更小的数据块

- for (unsigned int s = blockDim.x / 2; s > 0; s >>= 1)

- {

- if (tid < s)

- {

- d_in[myId] += d_in[myId + s];

- }

- __syncthreads(); //每个线程块每次循环结束之后才进行下一次运行

- }

- //最后要的是线程块的所有计算的求和结果

- if (tid == 0)

- {

- d_out[blockIdx.x] = d_in[myId];

- }

- }

- //使用共享内存实现归约

- __global__ void share_reduce_kernel(float* d_out, float* d_in)

- {

- extern __shared__ float sdata[]; //给每一个block定义他们各自的内存;

- int myId = threadIdx.x + blockIdx.x * blockDim.x; //有多个block.在所有线程的位置

- int tid = threadIdx.x; //在当前block里面的位置

- //然后将全局内存的数据复制给共享内存, 之后就不需要再从全局内存读数据,

- //共享数据读取的速度快于全局内存

- sdata[tid] = d_in[myId];

- __syncthreads();

- for (unsigned int s = blockDim.x / 2; s > 0; s >>= 1)

- {

- if (tid < s)

- {

- d_in[myId] += d_in[myId + s];

- }

- __syncthreads(); //每个线程块每次循环结束之后才进行下一次运行

- }

- //最后要的是线程块的所有计算的求和结果

- if (tid == 0)

- {

- d_out[blockIdx.x] = d_in[myId];

- }

- }

- void reduce(float* d_out, float* d_intermediate, float* d_in, int size, bool usesShareMemory)

- {

- const int maxThreadsPerBlock = 1024;

- int threads = maxThreadsPerBlock;

- int blocks = size / maxThreadsPerBlock;

- //这个地方对每个

- if (usesShareMemory)

- {

- share_reduce_kernel <<< blocks, threads, threads* sizeof(float) >>> (float* d_intermediate, float* d_in);

- }

- else

- {

- global_reduce_kernel << < blocks, threads >> > (float* d_intermediate, float* d_in);

- }

- threads = blocks;

- blocks = 1;

- if (usesShareMemory)

- {

- if (usesShareMemory)

- {

- share_reduce_kernel << < blocks, threads, threads * sizeof(float) >> > (float* d_intermediate, float* d_in);

- }

- else

- {

- global_reduce_kernel << < blocks, threads >> > (float* d_intermediate, float* d_in);

- }

- }

- }

- int main(int argc, char** argv)

- {

- int deviceCount;

- cudaGetDeviceCount(&deviceCount);

- if (deviceCount == 0)

- {

- fprintf(stderr, "error: no device surporting\n");

- exit(EXIT_FAILURE);

- }

- int dev = 0;

- cudaSetDevice(dev);

- cudaDeviceProp devProps;

- if (cudaGetDeviceProperties(&devProps, dev) == 0)

- {

- printf("using device %d:\n,", dev);

- printf("%s: global memory: %dB; compute v%d.%d; clock: %d kHZ\n",

- devProps.name, (int)devProps.totalGlobalMem, (int)devProps.major, (int)devProps.major, (int)devProps.clockRate);

- }

- const int ARRAY_SIZE = 1 << 20;

- const int ARRAY_BITE = ARRAY_SIZE * sizeof(float);

- //在cpu上创建存储输入数据的数组

- float h_in[ARRAY_SIZE];

- float sum = 0.0f;

- for (int i = 0; i < ARRAY_SIZE; i++)

- {

- h_in[i] = -1.0f + (float)random() / ((float)RAND_MAX / 2.0f);

- sum += h_in[i];

- }

- //declare GPU memory pointers

- float* d_in, *d_out, * d_intermediate;

- //在GPU上创建存储待处理数据和处理结果的数组

- cudaMalloc((void**)&d_in, ARRAY_BITE);

- cudaMalloc((void**)&d_intermediate, ARRAY_BITE);

- cudaMalloc((void**)&d_out, sizeof(float));

- //将CPU输入数据输入到GPU

- cudaMemcpy(d_in, h_in, ARRAY_BITE, cudaMemcpyHostToDevice);

- int whichKernel = 0;

- if (argc == 2)

- {

- whichKernel = atoi(argv[1]);

- }

- }

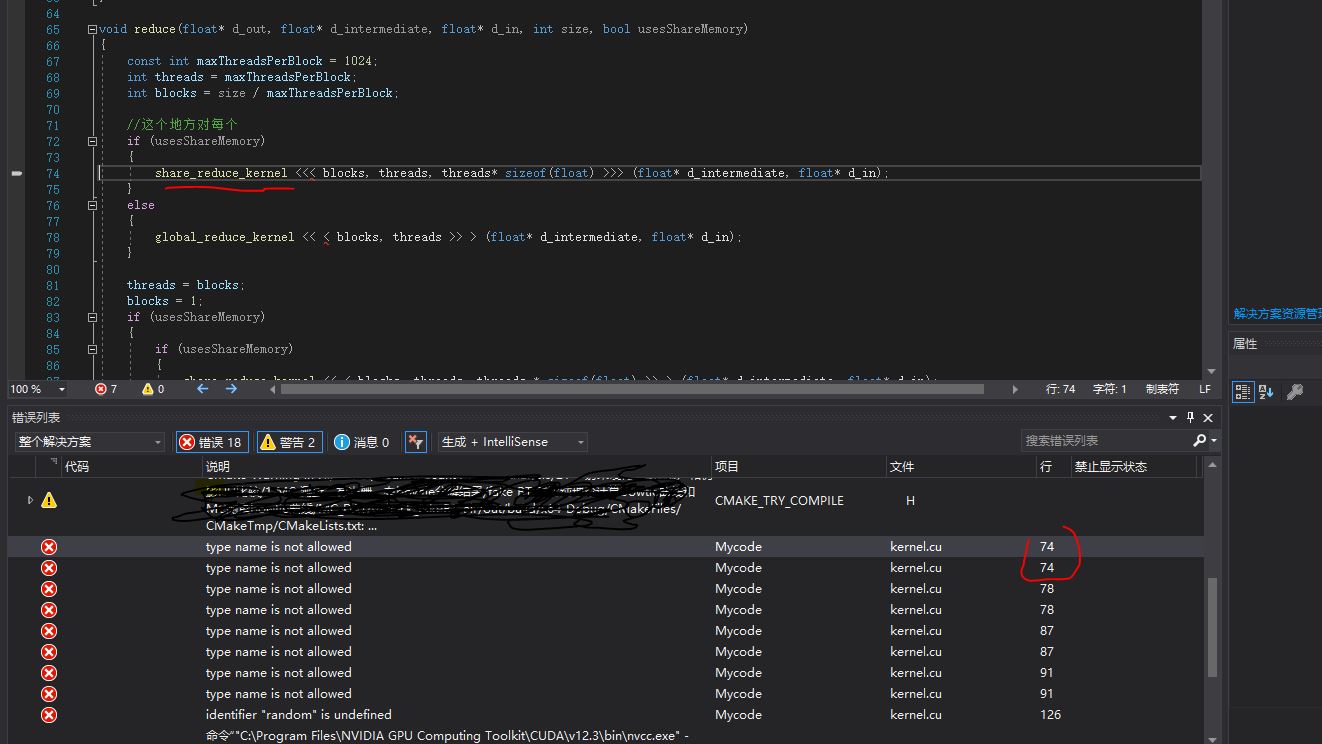

在你的代码中,出现了几个语法错误导致编译错误。我将逐个指出并给出修正后的代码。

首先,在`global_reduce_kernel`函数中,你想对全局内存的变量进行累加操作,但是你写成了`d_in += d_in`,这是错误的。应该使用`*d_in += *d_in`来对变量进行累加。此外,在最后的求和结果处,你写成了`d_out = d_in`,实际上应该是`*d_out = *d_in`。修正后的代码如下:

- __global__ void global_reduce_kernel(float* d_out, float* d_in)

- {

- int myId = threadIdx.x + blockIdx.x * blockDim.x;

- int tid = threadIdx.x;

- for (unsigned int s = blockDim.x / 2; s > 0; s >>= 1)

- {

- if (tid < s)

- {

- *d_in += *d_in;

- }

- __syncthreads();

- }

- if (tid == 0)

- {

- *d_out = *d_in;

- }

- }

接下来,在`share_reduce_kernel`函数中,你定义了一个共享内存`sdata`,但是你忘记给它分配实际的内存空间。共享内存的大小应该是每个线程块的大小(即`blockDim.x * sizeof(float)`)。修正后的代码如下:

- __global__ void share_reduce_kernel(float* d_out, float* d_in)

- {

- extern __shared__ float sdata[];

- int myId = threadIdx.x + blockIdx.x * blockDim.x;

- int tid = threadIdx.x;

- sdata[tid] = d_in[myId];

- __syncthreads();

- for (unsigned int s = blockDim.x / 2; s > 0; s >>= 1)

- {

- if (tid < s)

- {

- sdata[tid] += sdata[tid + s];

- }

- __syncthreads();

- }

- if (tid == 0)

- {

- *d_out = sdata[0];

- }

- }

在`reduce`函数中,你调用核函数的方式不正确。应该使用`<<<...>>>`语法来调用核函数,并且传递的参数应该是设备端的指针。修正后的代码如下:

- void reduce(float* d_out, float* d_intermediate, float* d_in, int size, bool usesShareMemory)

- {

- const int maxThreadsPerBlock = 1024;

- int threads = maxThreadsPerBlock;

- int blocks = size / maxThreadsPerBlock;

- if (usesShareMemory)

- {

- share_reduce_kernel<<<blocks, threads, threads * sizeof(float)>>>(d_intermediate, d_in);

- }

- else

- {

- global_reduce_kernel<<<blocks, threads>>>(d_intermediate, d_in);

- }

- threads = blocks;

- blocks = 1;

- if (usesShareMemory)

- {

- if (usesShareMemory)

- {

- share_reduce_kernel<<<blocks, threads, threads * sizeof(float)>>>(d_out, d_intermediate);

- }

- else

- {

- global_reduce_kernel<<<blocks, threads>>>(d_out, d_intermediate);

- }

- }

- }

最后,在`main`函数中,你定义了一个常量`ARRAY_SIZE`但没有给它赋值。请为它赋予一个合适的值,比如`1000`。修正后的代码如下:

- int main(int argc, char** argv)

- {

- // ...

- const int ARRAY_SIZE = 1000;

- // ...

- }

希望这些修正能够解决你遇到的问题。如果还有其他问题,请随时提问!

以上回复来自 FishC AI 小助理,如未能正确解答您的问题,请继续追问。

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)