|

|

15鱼币

本帖最后由 xiaochuan8264 于 2020-2-26 13:03 编辑

- import requests as r

- from bs4 import BeautifulSoup as bf

- import re

- def getDetails(IpTag):

- try:

- ip = IpTag.find(text=re.compile(r'\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}'))

- port = IpTag.find(text=re.compile('^(?!\d{2,3}\.)\d+))

- protocol = IpTag.find(text=re.compile('(HTTP)|(HTTPS)')).lower()

- finalIp = {protocol:protocol + '://' + ip + ':' + port}

- except TypeError:

- return None

- return finalIp

- def getIpList(bfObject):

- targets = bfObject.find_all(name='tr',class_=True)

- targets2 = bfObject.find_all(name='tr',class_='').pop(0)

- targets.extend(targets2)

- ipList = []

- try:

- count = 0

- for each in targets:

- ip = getDetails(each)

- if ip == None:

- print('在解析第 %d 项时出现了错误'% targets.index(each) + 1)

- continue

- count += 1

- ipList.append(ip)

- except:

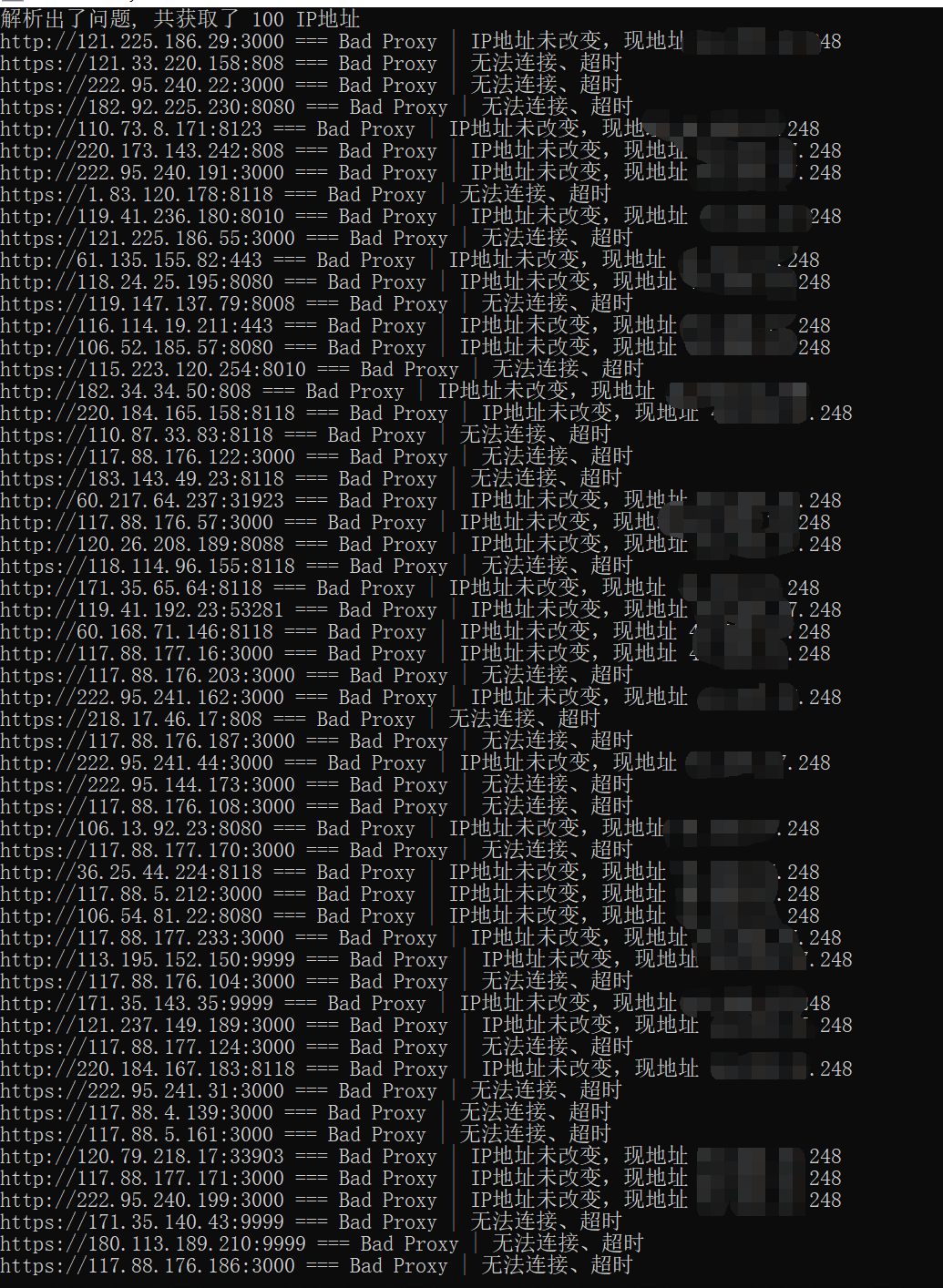

- print('解析出了问题, 共获取了 %d IP地址' % count)

- finally:

- return ipList

- def ipAnalyze(ipDict):

- ip = ipDict.get('http')

- if ip != None:

- return ip

- else:

- ip = ipDict.get('https')

- return ip

- def testIp(ip):

- testUrl = 'https://www.whatismyip.com.tw/'

- header = {'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'}

- temp = ipAnalyze(ip)

- try:

- web = r.get(url=testUrl, headers=header, proxies=ip, timeout=5)

- except (r.exceptions.ProxyError, r.exceptions.ConnectTimeout):

- print('%s === Bad Proxy | 无法连接、超时'% temp)

- except KeyboardInterrupt:

- raise

- except:

- print('%s === Bad Proxy | 其他'% temp)

- else:

- soup = bf(web.text, 'lxml')

- result = soup.find(name='b', style=True).span['data-ip']

- if temp.split('//')[1] != result:

- print('%s === Bad Proxy | IP地址未改变,现地址 %s'% (temp, result))

- else:

- print('\n%s === Functional Proxy\n'% temp)

- proxyProvider = 'https://www.xicidaili.com/nn/'

- header = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'}

- web = r.get(url=proxyProvider, headers=header, timeout=20)

- webSoup = bf(web.text, 'lxml')

- ipAcquired = getIpList(webSoup)

- for each in ipAcquired:

- testIp(each)

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)