|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 2434849827 于 2020-3-21 09:51 编辑

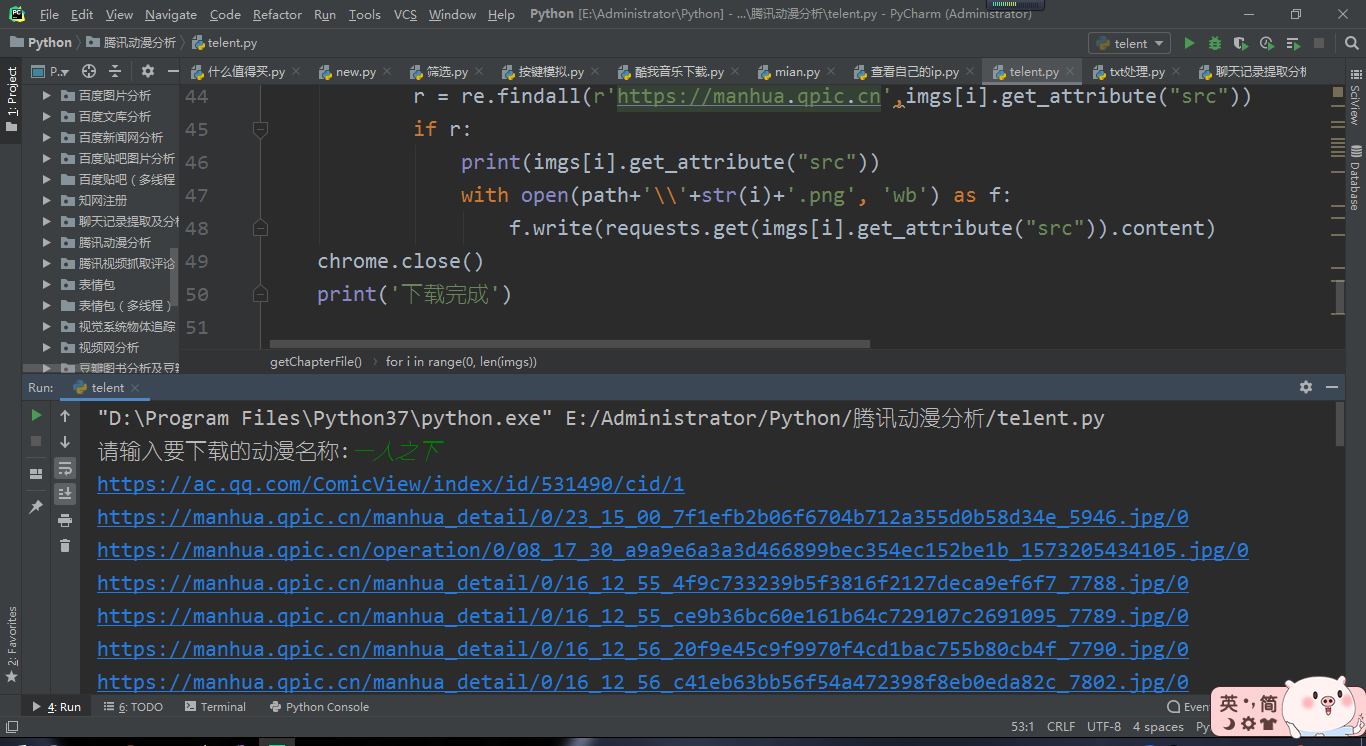

今天弄了一上午爬取腾讯动漫,大厂的就是难爬一点,各种加密,抓了一上午的包都没抓到我要的js文件,然后我找了半天资料,发现用selenium这个模拟浏览器直接去找到动漫图片的网址,然后用request下载会比较简单,代码量比较少,但是里面涵盖的知识点比较多,给大家提供参考

- [color=YellowGreen]import os

- import time,re

- import requests

- from selenium import webdriver

- def getChapterUrl():

- headers = {"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36"}

- name = input('请输入要下载的动漫名称:')

- url2 = f'https://ac.qq.com/Comic/searchList?search={name}'

- html2 = requests.get(url = url2,headers = headers)

- r11 = re.findall(r'<a href="/Comic/comicInfo/id/(\d{4,})" title',html2.text)

- res = requests.get(url = f'https://ac.qq.com/Comic/comicInfo/id/{r11[0]}', headers=headers)

- r1 = re.findall(r'title="(.*?):\d{1,5}..*"',res.text)

- r2 = re.findall(r'href="(/ComicView/index/id/.*/cid/.*?)"',res.text)

- r3 = re.findall(r'title=".*?:\d{1,5}.([\u4e00-\u9fa5].*?)"', res.text)

- del r2[-1]

- url_1 = []

- #print(r3)

- for i in r2:

- url_1.append('https://ac.qq.com'+i)

- n = 0

- for i in url_1:

- getChapterFile(i, r1[n], r3[n])

- n += 1

- def getChapterFile(url,path1,path2):

- # 漫画名称目录

- print(url)

- path=os.path.join(path1)

- if not os.path.exists(path):

- os.mkdir(path)

- # 章节目录

- path=path+'\\'+path2

- if not os.path.exists(path):

- os.mkdir(path)

- chrome = webdriver.Chrome()

- chrome.get(url)

- time.sleep(4)

- imgs = chrome.find_elements_by_xpath("//div[@id='mainView']/ul[@id='comicContain']//img")

- for i in range(0, len(imgs)):

- js="document.getElementById('mainView').scrollTop="+str((i) * 1280)

- chrome.execute_script(js)

- time.sleep(3)

- r = re.findall(r'https://manhua.qpic.cn',imgs[i].get_attribute("src"))

- if r:

- print(imgs[i].get_attribute("src"))

- with open(path+'\\'+str(i)+'.png', 'wb') as f:

- f.write(requests.get(imgs[i].get_attribute("src")).content)

- chrome.close()

- print('下载完成')

- if __name__ == '__main__':

- getChapterUrl()

- [/color]

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)