|

|

楼主 |

发表于 2020-5-22 23:16:02

|

显示全部楼层

本帖最后由 Cminglyer 于 2020-5-23 12:24 编辑

自己回复自己:

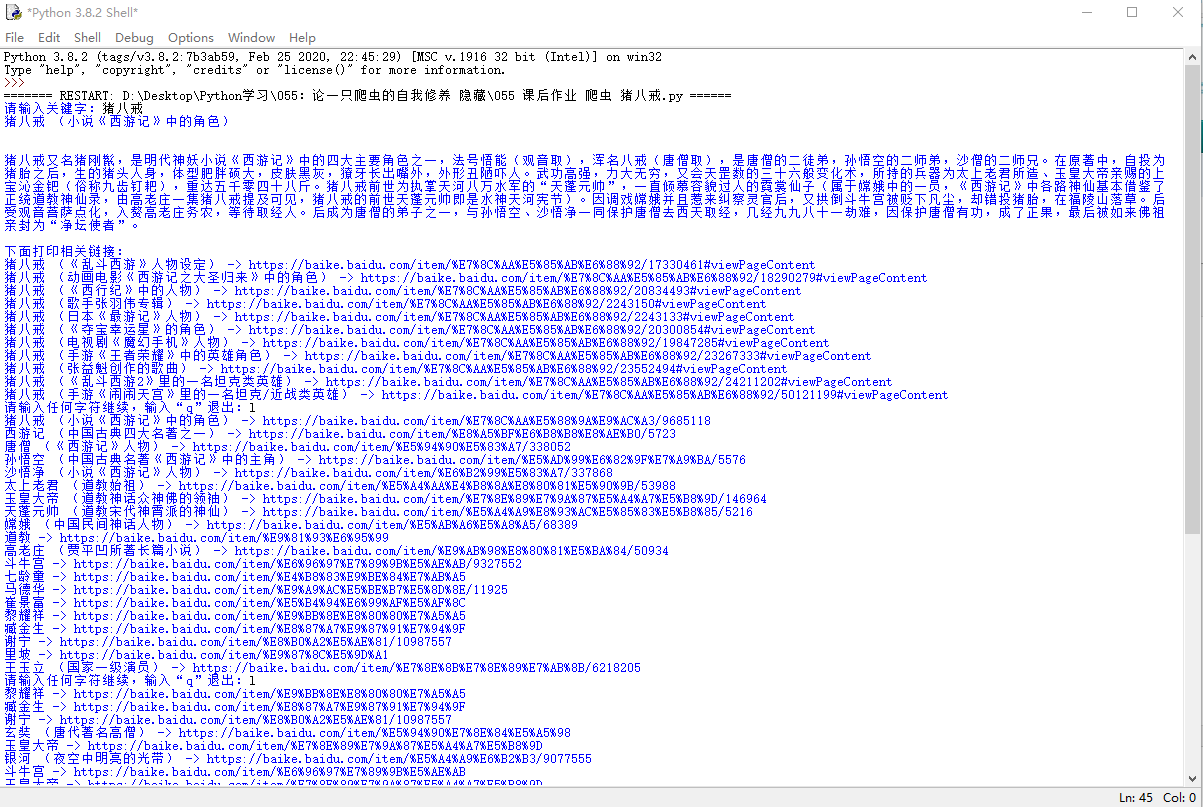

昨天的问题,今天晚上搞明白了,055课后作业,原答案的代码也有些功能无法实现,自己写的如下,有些乱,但基本可以实现功能:

- import urllib.request

- import urllib.parse

- import re

- from bs4 import BeautifulSoup

- keyword = input("请输入关键字:")

- keyword_new = urllib.parse.quote(keyword)

- url = "https://baike.baidu.com/item/{}".format(keyword_new)

- response = urllib.request.urlopen(url)

- html = response.read().decode("utf-8")

- soup = BeautifulSoup(html,"html.parser")

- content1 = soup.find("dd",class_ = "lemmaWgt-lemmaTitle-title")

- title1 = content1.find("h1").string

- title2 = content1.find("h2").string

- content2 = soup.find("div",class_ = "lemma-summary").text

- print(title1,title2)

- print("\n",content2)

- print("下面打印相关链接:")

- target_class = soup.find_all(True,{"class":["polysemantList-wrapper cmn-clearfix","main-content"]}) # 处理两个class类,输出的target_class 是含2个大列表的列表

- target_url_temp =[]

- target_url =[]

- for each_class in target_class: # 对target_class 含的2个大列表进行分别处理

- target_url_temp.append(each_class.find_all("a",href=True)) # target_url_temp 是含2个大列表的列表

- for each in target_url_temp[0]:

- target_url.append(each) # 对于target_url_temp 内的每个大列表中的元素,提取出来,导入进target_url

- for each in target_url_temp[1]:

- target_url.append(each)

- # 以上操作后,target_url 就成为了一个列表,里面每个元素都是上面提到的两个class 大类里的 许多<a>标签。

- count = 0

- list1 = []

- for each in target_url:

- try:

- response2 = urllib.request.urlopen("".join(["https://baike.baidu.com",each["href"]]))

- html2 = response2.read().decode("utf-8")

- soup2 = BeautifulSoup(html2,"html.parser")

- content2 = soup2.find("dd",class_ = "lemmaWgt-lemmaTitle-title")

- title_a = content2.find("h1").string

- except:

- continue

- else:

- try:

- title_b = content2.find("h2").string

- except:

- print(title_a,"->","".join(["https://baike.baidu.com",each["href"]]))

- continue

-

- else:

- if title_b not in list1:

-

- print(title_a,title_b,"->","".join(["https://baike.baidu.com",each["href"]]))

-

- list1.append(title_b)

-

- count = count+1

-

- if count>10 :

- order = input("请输入任何字符继续,输入“q”退出:")

- if order != "q":

- count = 0

- continue

- else:

- count = 0

- break

- else:

- continue

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)