|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

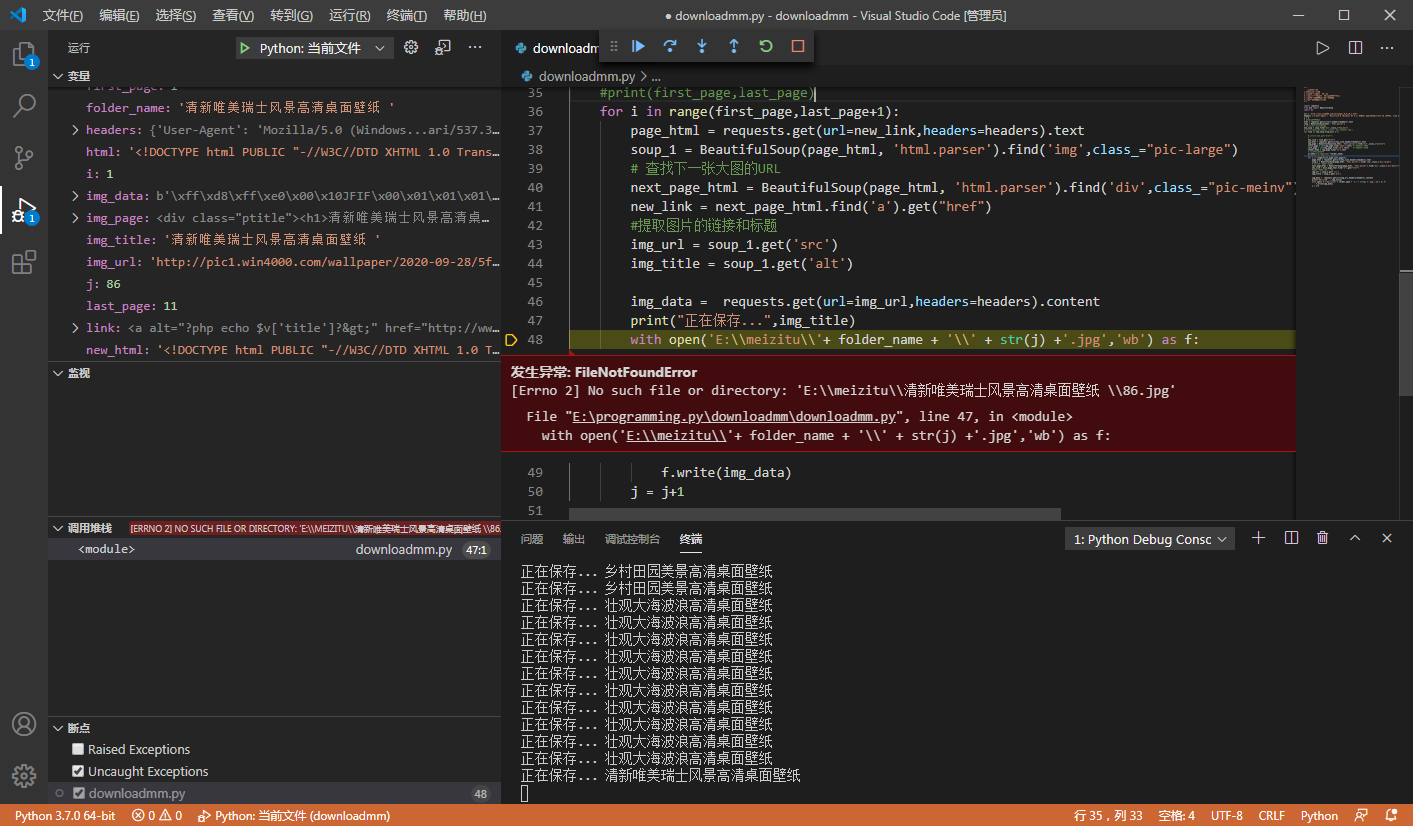

在爬取美桌壁纸图片,运行到一半时候报错:

这个路劲错误很奇怪,我的e盘下明明创建了这个文件夹....求大佬指点一二

这个是 url: http://www.win4000.com/wallpaper_0_0_10_1.html

下面是我的代码

- import requests

- from bs4 import BeautifulSoup

- import os

- url = 'http://www.win4000.com/wallpaper_0_0_10_1.html'

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36'}

- j = 0

- # 获取网页源代码

- html = requests.get(url=url,headers=headers).text

- soup = BeautifulSoup(html, 'html.parser')

- # 查找缩略图对应的网址

- new_soup = soup.find('div',class_="tab_box")

- # print(type(new_soup)) <class 'bs4.element.Tag'>

- for link in new_soup.find_all('a'):

-

- # print(link.get("href"))

- #

- new_link = link.get("href")

- new_html = requests.get(url=new_link,headers=headers).text

- img_page = BeautifulSoup(new_html, 'html.parser').find('div',class_="ptitle")

- first_page = int(img_page.find('span').text) #图片的起始页数

- last_page = int(img_page.find('em').text) #图片的末尾页数

- folder_name = img_page.find('h1').text

- # 创建分类文件夹

- os.mkdir('e:/meizitu/'+folder_name)

- #print(first_page,last_page)

- for i in range(first_page,last_page+1):

- page_html = requests.get(url=new_link,headers=headers).text

- soup_1 = BeautifulSoup(page_html, 'html.parser').find('img',class_="pic-large")

- # 查找下一张大图的URL

- next_page_html = BeautifulSoup(page_html, 'html.parser').find('div',class_="pic-meinv")

- new_link = next_page_html.find('a').get("href")

- #提取图片的链接和标题

- img_url = soup_1.get('src')

- img_title = soup_1.get('alt')

- img_data = requests.get(url=img_url,headers=headers).content

- print("正在保存...",img_title)

- with open('E:\\meizitu\\'+ folder_name + '\\' + str(j) +'.jpg','wb') as f:

- f.write(img_data)

- j = j+1

文件名可能会有空格,加个去除空格试试

- folder_name = img_page.find('h1').text.strip()

其他代码除了路径改了下,都没动,运行正常

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)