|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 payton24 于 2018-2-3 23:33 编辑

动态网页爬取的两种思路:

1.解析真实地址抓取

2.用selenium模拟浏览器抓取

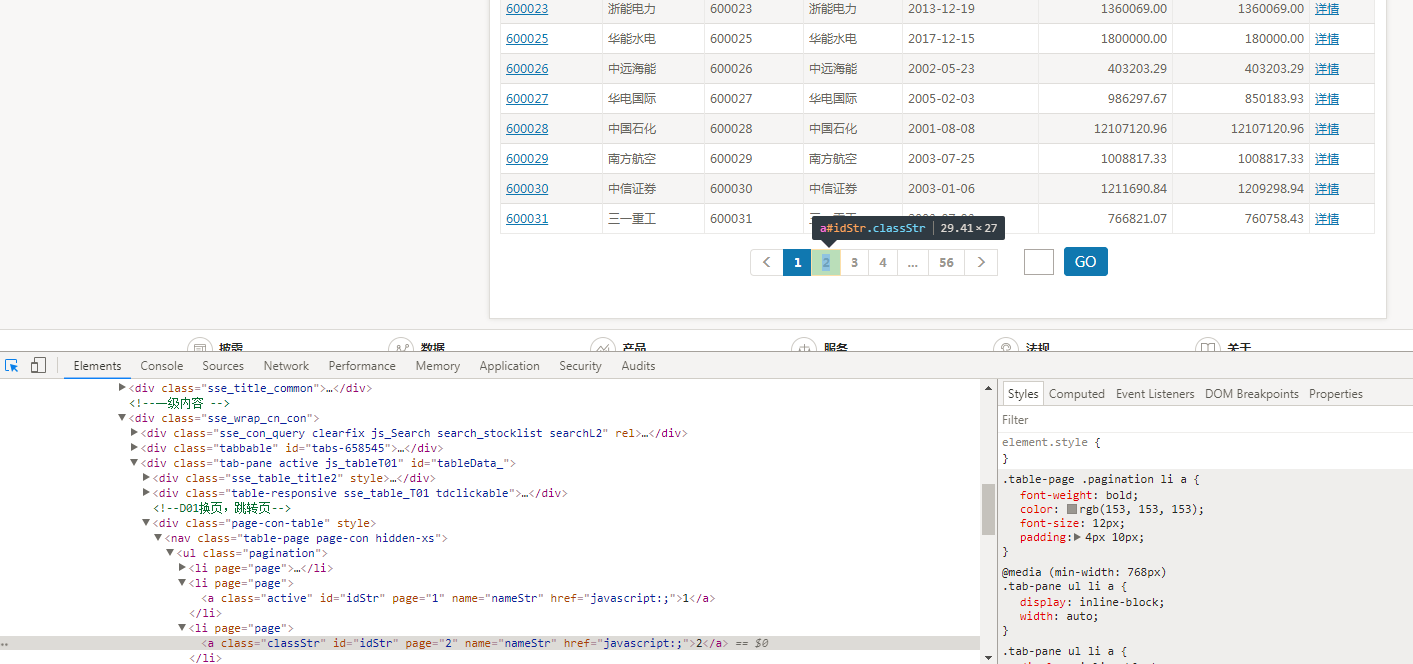

先来第一种,主要使用“检查”→“Network”→点击或刷新网页→通过Network显示数据。

这个过程俗称“抓包”。

这里使用“上海证券交易所”为例。

网址: http://www.sse.com.cn/assortment/stock/list/share/

交易所每一页的信息是动态加载的,直接通过Elements选项无法获取页面链接信息。

因此需要使用上述的抓包步骤,获取链接。

另外,由于网站有反爬虫信息,需要添加较多的headers信息才能获取。

下述代码添加了完整的头部信息,经过多次运行代码,此网站最起码需要添加User-Agent以及Referer信息。

此处获取第2页信息。

- import requests

- Cookie = "PHPStat_First_Time_10000011=1480428327337; PHPStat_Cookie_Global_User_Id=_ck16112922052713449617789740328; PHPStat_Return_Time_10000011=1480428327337; PHPStat_Main_Website_10000011=_ck16112922052713449617789740328%7C10000011%7C%7C%7C; VISITED_COMPANY_CODE=%5B%22600064%22%5D; VISITED_STOCK_CODE=%5B%22600064%22%5D; seecookie=%5B600064%5D%3A%u5357%u4EAC%u9AD8%u79D1; _trs_uv=ke6m_532_iw3ksw7h; VISITED_MENU=%5B%228451%22%2C%229055%22%2C%229062%22%2C%229729%22%2C%228528%22%5D"

- url = "http://query.sse.com.cn/security/stock/getStockListData2.do?&jsonCallBack=jsonpCallback37110&isPagination=true&stockCode=&csrcCode=&areaName=&stockType=1&pageHelp.cacheSize=1&pageHelp.beginPage=2&pageHelp.pageSize=25&pageHelp.pageNo=2&pageHelp.endPage=21&_=1516260113276"

- headers = {

- 'User-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.84 Safari/537.36',

- 'Cookie': Cookie,

- 'Connection': 'keep-alive',

- 'Accept': '*/*',

- 'Accept-Encoding': 'gzip, deflate',

- 'Accept-Language': 'zh-CN,zh;q=0.9',

- 'Host': 'query.sse.com.cn',

- 'Referer': 'http://www.sse.com.cn/assortment/stock/list/share/'

- }

- response = requests.get(url,headers=headers)

- print(response.text)

此时response.text得到的是类似json数据。

- jsonpCallback37110({"areaName":"","csrcCode":"","downloadFileName":null,"execlStream":null,"jsonCallBack":"jsonpCallback37110","pageHelp":{"beginPage":2,"cacheSize":1,"data":[{"NUM":"26","totalFlowShares":"274440.00","endDate":"2018-01-17","LISTING_DATE":"2001-02-09","SECURITY_CODE_A":"600033","COMPANY_CODE":"600033","SECURITY_CODE_B":"-","END_SHARE_CODE":"-","SECURITY_ABBR_A":"福建高速","COMPANY_ABBR":"福建高速","SECURITY_ABBR_B":"-","END_SHARE_MAIN_DEPART":"-","totalShares":"274440.00","END_SHARE_VICE_DEPART":"-","CHANGE_DATE":"-"},{"NUM":"27","totalFlowShares":"145337.79","endDate":"2018-01-17","LISTING_DATE":"2004-03-10","SECURITY_CODE_A":"600035","COMPANY_CODE":"600035","SECURITY_CODE_B":"-","END_SHARE_CODE":"-","SECURITY_ABBR_A":"楚天高速","COMPANY_ABBR":"楚天高速","SECURITY_ABBR_B":"-","END_SHARE_MAIN_DEPART":"-","totalShares":"173079.59","END_SHARE_VICE_DEPART":"-","CHANGE_DATE":"-"}..})

暂时没找到其他直接转换成json数据的简易方法,先用正则表达式提取出来吧。

- import re

- dict_data = re.search('jsonpCallback37110\((.*)\)',response.text,re.DOTALL).group(1)

- print(dict_data)

- import json

- json_data = json.loads(dict_data)

- data_list= json_data["pageHelp"]["data"]

找到替代方法了,简单地用find方法即可,基础没打好啊。

- dict_data = response.text[response.text.find("{"):response.text.find("}]")+1]

得到数据列表信息后就可以轻松提取里面的信息了。

- for i in data_list:

- CODE = i['COMPANY_CODE']

- COMPANY = i['COMPANY_ABBR']

- LDATE = i['LISTING_DATE']

- TSHARE = i['totalShares']

- TFSHARE = i['totalFlowShares']

- print(CODE,'\t',COMPANY,'\t',LDATE,'\t',TSHARE,'\t',TFSHARE)

打印结果为:

- 600033 福建高速 2001-02-09 274440.00 274440.00

- 600035 楚天高速 2004-03-10 173079.59 145337.79

- 600036 招商银行 2002-04-09 2521984.56 2521984.56

- 600037 歌华有线 2001-02-08 139177.79 116835.20

- 600038 中直股份 2000-12-18 58947.67 58947.67

- 600039 四川路桥 2003-03-25 361052.55 301973.27

- ..

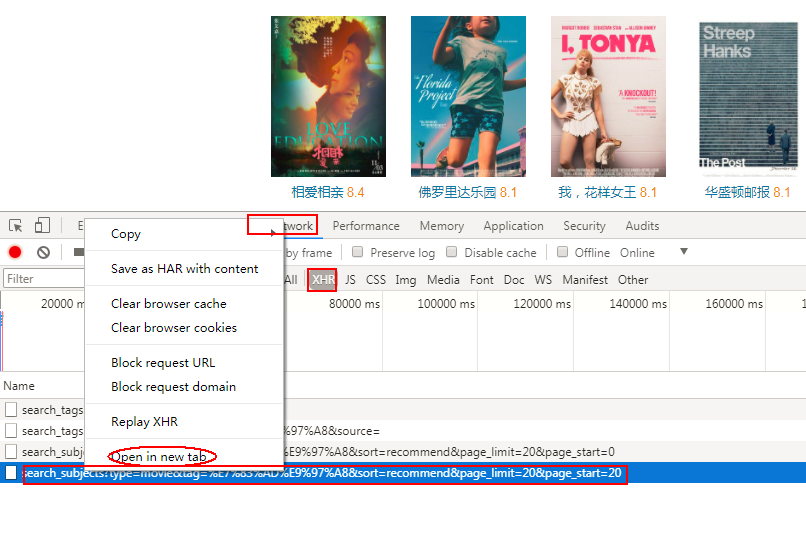

再举一个例子。

网址:https://movie.douban.com/explore#!type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start=0

可点击“检查”,进入Network选项,然后选择“XHR”。在页面中点击“加载更多”,可以观察到出现了新的请求,然后可以用“open in new tab”来检验是否所需的加载内容。

可以看到加载的网页信息分别为:

- https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start=0

- https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start=20

- https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start=40

- ..

- https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start=320

可构建列表为:

- urls = ['https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start={}'.format(i*20) for i in range(0,17)]

由于响应内容为json格式,所以使用r.json()将其转变成dict类。

源代码为:

- import requests

- import time

- urls = ['https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_start={}'.format(i*20) for i in range(0,17)]

- for url in urls:

- r = requests.get(url)

- json_data = r.json() ##转化json数据

- dict_datas = json_data['subjects']

- for dict_data in dict_datas:

- title = dict_data['title']

- rate = dict_data['rate']

- url = dict_data['url']

- print('{}-->{}-->{}'.format(title,rate,url))

- time.sleep(1)

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)