|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

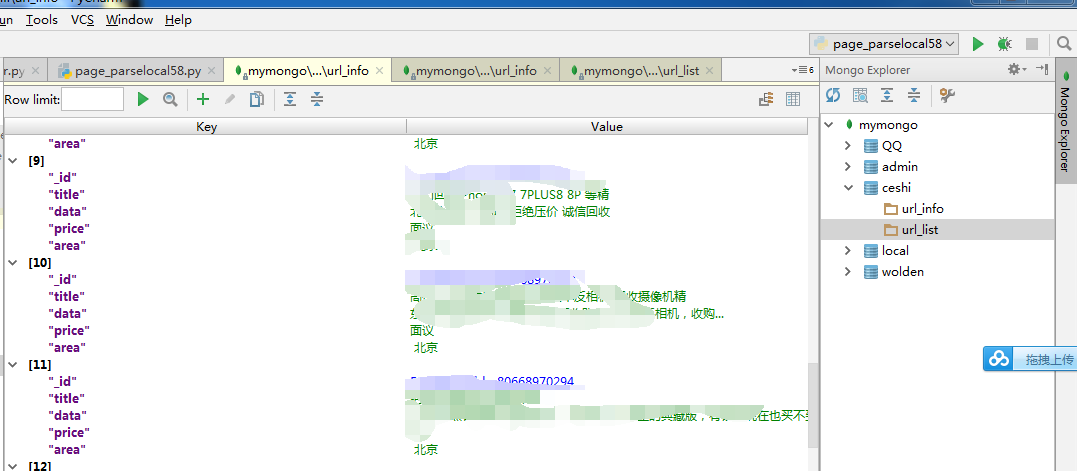

import time

import pymongo

import requests

from bs4 import BeautifulSoup

client=pymongo.MongoClient("localhost",27017)

ceshi=client['ceshi']

url_list=ceshi['url_list']

url_info=ceshi['url_info']

def get_list(channel_url,page):

url='{}/pn{}'.format(channel_url,str(page))

wb_data=requests.get(url)

time.sleep(1)

soup=BeautifulSoup(wb_data.text,'lxml')

if soup.find('td','t'):

for links in soup.select('td.t a.t'):

item_link=links.get('href').split('?')[0]

url_list.insert_one({'url':item_link})

print(item_link)

else:

pass

# get_list('http://bj.58.com/qiulei/',4)

def get_info(url,n):

wb_data=requests.get(url)

soup=BeautifulSoup(wb_data.text,'lxml')

for i in range(1,n):

title = soup.select('td.t > a')[i].text

price=soup.select('span.price')[i].text

data=soup.select('span.desc')[i].text

area=soup.select('td.t > span.fl > span:nth-of-type(1)')[i].text

url_info.insert_one({"title":title,"data":data,"price":price,"area":area})

print({"title":title,"data":data,"price":price,"area":area})

time.sleep(1)

get_list('http://bj.58.com/youxiji/',4)

get_info('http://bj.58.com/youxiji/',25) |

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)