|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 chenxz186 于 2018-7-6 20:29 编辑

是否觉得TOP.1那位很熟?没错,她就是波多野结衣,人称波姐,竟然成为广大宅男心中的NO.1,厉害厉害。可惜我不是很喜欢看她的片片,我喜欢的水野朝阳,小川阿佐美,西野翔等等,当然你们也可以通过此程序来爬取你们心中的女神到底在排行榜哪几名。

这次的程序用到模块相对比较多,分别是requests, bs4, multiprocessing,time, datetime, os

这次爬虫里用到多进程,特别是apply_async 异步非阻塞的方法,若用apply方法就跟单进程没啥分别,因为它是进入子进程执行后,等待当前子进程执行完毕,在继续执行下一个进程,所以python官方才建议:废弃apply,使用apply_async。

好了,上代码先。

- import requests

- import bs4

- from multiprocessing import Pool

- import time

- import datetime

- import os

- '''成功作品,吸取上一个作品的失败而作,我将38个子网页不再分成38个子进程,而是将每个子网页要爬的所有图片分成相

- 应的子进程去爬,例如每1页有50个图片就分成50个子进程去爬,38页只有一个就一个进程去爬。'''

- def download_actress_img(data):

- file = data[2]

- print(datetime.datetime.now())

- print('进程号-%s' % (os.getpid()))

- print(data[0])

- actress_res = open_url(data[1])

- filename = file + '/%s.jpg' % data[0]

- print(filename)

- with open(filename, 'ab') as f:

- f.write(actress_res.content)

- def find_actress_urls(soup):

- # 女优排名

- actress_top = []

- all_need_td = soup.find_all('td', class_="main2_title_td")

- # all_need_td = soup.select('.main2_title_td')# 或者用CSS选择器

- for each in all_need_td:

- actress_top.append(each.text)

- # 找出女优的名字

- actress_names = []

- all_need_a = soup.find_all('a', target="_blank")

- # all_need_a = soup.select('.clink')# 或者用CSS选择器

- for each in all_need_a:

- if 'vote/rankdetail' in each['href']:

- actress_names.append(each.text)

- # 找出女优的链接

- actress_urls = []

- all_need_img = soup.find_all('img')

- # all_need_img = soup.select('a img')# 或者用CSS选择器

- for each in all_need_img:

- actress_urls.append(('http://www.ttpaihang.com/image/vote/' + each['src'].split('/', 3)[3]))

- print(actress_urls)

- # 女优名与链接相应对接

- actress_data = []

- length = len(actress_top)

- for j in range(length):

- actress_data.append([actress_top[j] + ' ' + actress_names[j], actress_urls[j]])

- return actress_data

- def cooking_soup(res):

- soup = bs4.BeautifulSoup(res.text, 'html.parser')

- return soup

- def open_url(url):

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko)'

- ' Chrome/55.0.2883.87 Safari/537.36'}

- res = requests.get(url, headers=headers)

- res.encoding = 'gbk'

- return res

- def file_func(folder='actressTop'):

- """生成文件夹"""

- if os.path.exists(folder):

- os.chdir(folder)

- else:

- os.mkdir(folder)

- os.chdir(folder)

- file = os.getcwd()

- return file

- def main():

- file = file_func()

- for i in range(1, 39):

- page_url = 'http://www.ttpaihang.com/vote/rank.php?voteid=1089&page=%d' % i

- res = open_url(page_url)

- soup = cooking_soup(res)

- actress_data = find_actress_urls(soup)

- for d in range(len(actress_data)):

- actress_data[d].append(file)

- length = len(actress_data)

- p = Pool(length)

- print(actress_data)

- # p.map(download_actress_img, actress_data) # 用map()虽然可以1行代码代替下面2行代码,虽然相对快点,但出现某些图片损坏

- for each in actress_data:

- p.apply_async(download_actress_img, args=(each,))

- p.close()

- p.join()

- if __name__ == '__main__':

- start_time = time.time()

- main()

- print('全部结束,耗时%d' % (time.time() - start_time))

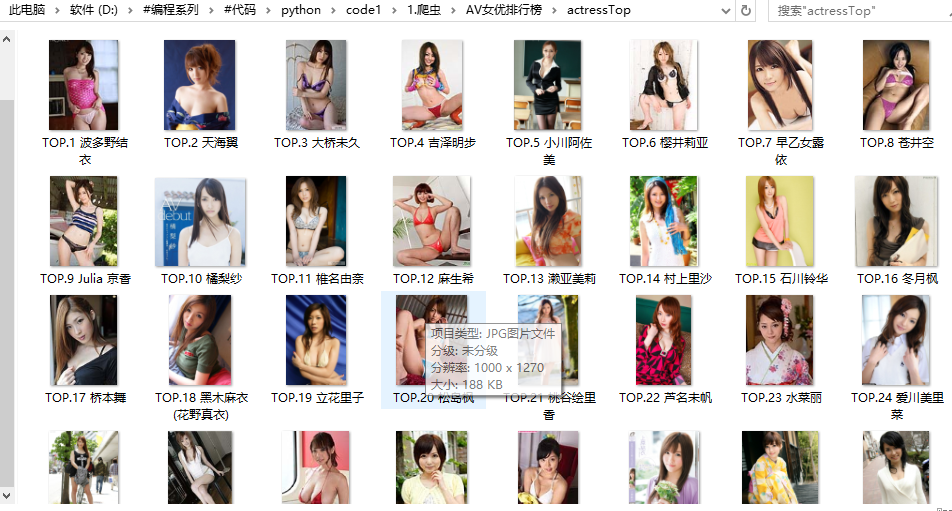

点击运行后,程序就会将女优排行榜内的女优排名及图片都下载下来。直接看我的截图吧。

这只是冰山一角,你们可以慢慢体会。感觉有了requests及bs4这两个模块后,能随便的为所欲为了,哈哈,我还用它们爬一些些羞羞的网站,咳咳,还是说正经的话题吧。以上的代码若需要我逐个分析的话,请各位在留言,我会将里面每个函数体及模块都详细讲解下,也说下我为何这样写代码。好了,今日的帖就到此结束,我还要继续做量化去。 |

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)