|

|

发表于 2019-3-15 10:45:00

|

显示全部楼层

发表于 2019-3-15 10:45:00

|

显示全部楼层

可能那些数据都是js生成的,所以我用的是splash渲染后,再用scrapy抓取的方式,具体流程如下:

1.安装scrapy跟splash,教程论坛可以找到

2.建好scrapy项目,然后在setting添加- # 渲染服务的url

- SPLASH_URL='http://192.168.99.100:8050'

- #下载器中间件

- DOWNLOADER_MIDDLEWARES = {

- 'scrapy_splash.SplashCookiesMiddleware':723,

- 'scrapy_splash.SplashMiddleware':725,

- 'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware':800

- }

- SPIDER_MIDDLEWARES ={

- 'scrapy_splash.SplashDeduplicateArgsMiddleware':100

- }

- # 去重过滤器

- DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

- # 使用Splash的Http缓存

- HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

然后spider的代码如下:

- # -*- coding: utf-8 -*-

- import scrapy

- from scrapy_splash import SplashRequest

- from scrapy_splash import SplashMiddleware

- from scrapy.selector import Selector

- class LagouJobSpider(scrapy.Spider):

- name = 'lagou_job'

- allowed_domains = ['www.lagou.com']

- urls = ['https://www.lagou.com/jobs/5549207.html']

- header = {"User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/57.0.2987.98 Safari/537.36 LBBROWSER"}

- def start_requests(self):

- yield SplashRequest(self.urls[0],headers=self.header,callback=self.parse)

- def parse(self, response):

- print(response.xpath('//*[@id="job_detail"]/dd[2]/div/p[1]').extract_first())

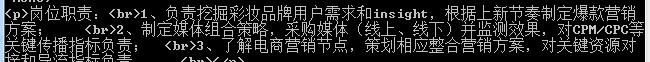

抓取完的结果是这样的,你再去掉那些标签就行

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)