|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

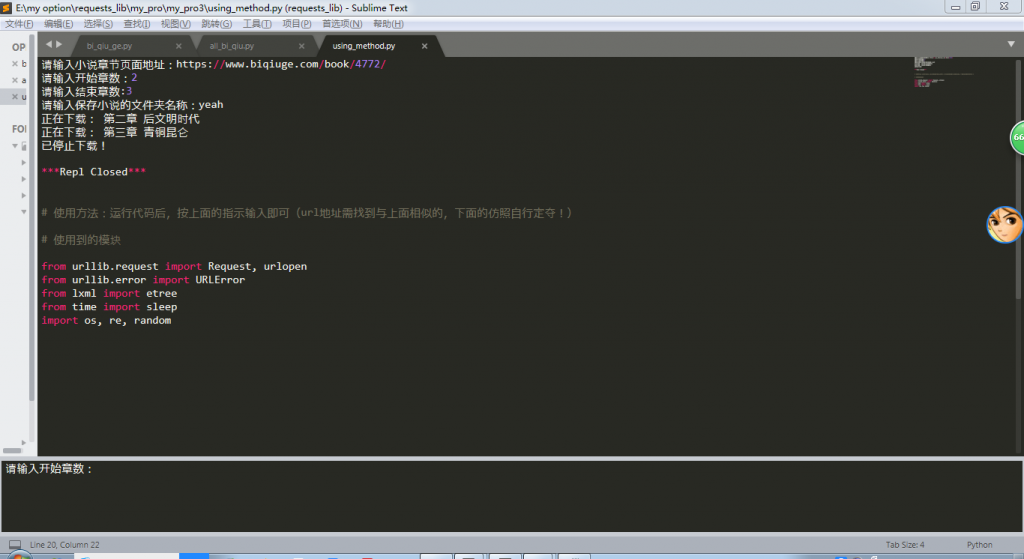

- # 网站地址:https://www.biqiuge.com/ (笔趣阁)

- from urllib.request import Request, urlopen

- from urllib.error import URLError

- from lxml import etree

- from time import sleep

- import os, re, random

- # 获取html

- def get_html(url):

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.157 Safari/537.36'}

- req = Request(url, headers=headers)

- try:

- response = urlopen(req)

- except URLError as e:

- if hasattr(e, "reason"):

- print("We failed to reach a server.")

- print("Reason:", e.reason)

- elif hasattr(e, "code"):

- print("The server couldn\'t fulfill the request.")

- print("Error code:", e.code)

- else:

- html = response.read()

- return html

- def get_all_chapter(html):

-

- base_url = "https://www.biqiuge.com"

- start = int(input("请输入开始章数:"))

- end = int(input("请输入结束章数:"))

- html_x = etree.HTML(html)

- chapter_title = html_x.xpath('//div[@class="listmain"]//dd[position() > 6]/a/text()')

- chapter_title = chapter_title[start-1:end]

- all_chapter = html_x.xpath('//div[@class="listmain"]//dd[position() > 6]/a/@href')

- #列表推导式 完整化章节url

- each_chapter_url = [base_url+suffix for suffix in all_chapter]

- each_chapter_url = each_chapter_url[start-1:end]

- return [each_chapter_url,chapter_title]

-

- # 获取章节内容并保存

- def get_content_and_save(all_chapter):

-

- files = input("请输入保存小说的文件夹名称:")

- os.mkdir(files)

- counter = 0

- for each_chapter_url in all_chapter[0]:

- html = get_html(each_chapter_url)

- html = html.decode("gbk")

- tree = etree.HTML(html)

- # 获取章节内容到列表

- fiction_content = tree.xpath('//div[@id="content"]/text()')

- # 优化获取章节内容

- text_content = ""

- for each_line_content in fiction_content:

- text_content += each_line_content

- beautiful_text = text_content.replace("\r", "\n").replace(" ","")

- # 保存

- print("正在下载:", all_chapter[1][counter])

- with open("./" + files + "/" + all_chapter[1][counter] + ".txt", "w", encoding="utf-8") as f:

- f.write(beautiful_text)

- counter += 1

- wait_time = random.choice([3, 4, 5, 6])

- sleep(wait_time)

- # 主函数

- def get_fictions(url):

- html = get_html(url)

- html = html.decode("gbk")

- all_chapter = get_all_chapter(html)

- get_content_and_save(all_chapter)

-

- if __name__ == "__main__":

- url = input("请输入小说章节页面地址:") # 例如下面格式的url

- # url = "https://www.biqiuge.com/book/24277/"

- # url = "https://www.biqiuge.com/book/4772/"

- get_fictions(url)

- print("已停止下载!")

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)