|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 金刚 于 2019-6-20 15:59 编辑

- import requests

- from bs4 import BeautifulSoup

- from lxml import etree

- import os

- from time import sleep

- def rs_func_one(url):

- headers = {

- "referer": "https://www.mzitu.com/jiepai/",

- "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36"

- }

- rs = requests.get(url, headers=headers)

- # print(rs.status_code)

- return rs

- def rs_func_two(url):

- headers = {

- "referer": "https://www.mzitu.com/all/",

- "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36"

- }

- cookies = {

- 'cookie': 'Hm_lvt_dbc355aef238b6c32b43eacbbf161c3c=1560850727,1560931430,1560994737; Hm_lpvt_dbc355aef238b6c32b43eacbbf161c3c=1560998871'

- }

- rs = requests.get(url, headers=headers, cookies=cookies)

- sleep(1)

- # print(rs.status_code)

- return rs

- def parse_html(html, start_group, end_group):

- # print(html)

- '''tree = etree.HTML(html)

- # url_list = tree.xpath('//div[@class="all"]/ul[@class="archives"]//a[@class]/@href')

- url_list = tree.xpath('//div[@class="all"]/ul[@class="archives"]//a[@target="_blank"]/@href')

- # print(url_list)

- # print(len(url_list))'''

- soup = BeautifulSoup(html, "lxml")

- url_list = soup.select('.all > .archives a[target="_blank"]')

- url_list = url_list[start_group-1:end_group]

- # print(url_list)

- # print(len(url_list))

- group_url_list = list()

- for tag_object in url_list:

- # print(tag_object['href'])

- # print(type(tag_object['href']))

- # exit()

- group_url_list.append(tag_object['href'])

- return group_url_list

- def parse_html_person(html):

- tree = etree.HTML(html)

- # img_sum_person_list = tree.xpath('//a/span[@class]/text()')

- img_sum_person_list = tree.xpath("//div[@class='pagenavi']/a/span/text()")

- # print(img_sum_person_list[-2])

- # print(type(img_sum_person_list[-2]))

- return img_sum_person_list[-2]

- def parse_last_page_html(html):

- tree = etree.HTML(html)

- # jpg_url = tree.xpath("//p[@class]/a/img/@src")

- jpg_url_list = tree.xpath("//div[@class='main-image']/p/a/img/@src")

- # print(jpg_url_list)

- # exit()

- return jpg_url_list

- def save_img(img, url, folder):

- filename = url.split('/')[-1]

- print('正在下载:', filename)

- with open('./{}/{}'.format(folder, filename), 'wb') as fp:

- fp.write(img)

- # 主函数

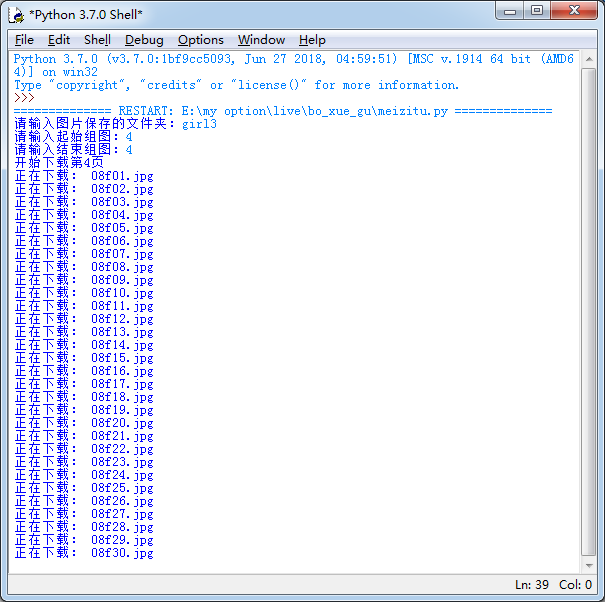

- def main():

- folder = input('请输入图片保存的文件夹:')

- os.mkdir(folder)

- # 输入阿拉伯数字,用法例:5-10 到现在为止有2997组图片,可以输入1-2997。之后还会更新

- start_group = int(input('请输入起始组图:'))

- end_group = int(input('请输入结束组图:'))

- syn_url = "https://www.mzitu.com/all/"

- # 请求

- rs = rs_func_one(syn_url)

- # print(rs.status_code)

- # print(rs.url)

- html = rs.text

- # print(html)

- # 组图url保存到列表

- url_list = parse_html(html, start_group, end_group)

- # print(url_list)

- start = start_group

- for group_url in url_list:

- print('开始下载第{}页'.format(start))

- rs = rs_func_two(group_url)

- html_person = rs.text

- # print(html_person)

- # 每个图片的总数量

- img_sum_person = parse_html_person(html_person)

- # print(img_sum_person)

- for img_num_url in range(1, int(img_sum_person)+1):

- # each_img_url = group_url + '/' + img_num_url

- each_img_url = '{}{}{}'.format(group_url, '/', img_num_url)

- # print(each_img_url)

- rs = rs_func_two(each_img_url)

- last_page_html = rs.text

- # print(last_page_html)

- # exit()

- jpg_url_list = parse_last_page_html(last_page_html)

- # print(jpg_url_list)

- # 一个图片地址

- jpg_url = jpg_url_list[0]

- rs = rs_func_two(jpg_url)

- # print(rs.status_code)

- # exit()

- img = rs.content

- save_img(img, jpg_url, folder)

- print('结束下载第{}页'.format(start))

- start += 1

- if __name__ == '__main__':

- main()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)