|

|

100鱼币

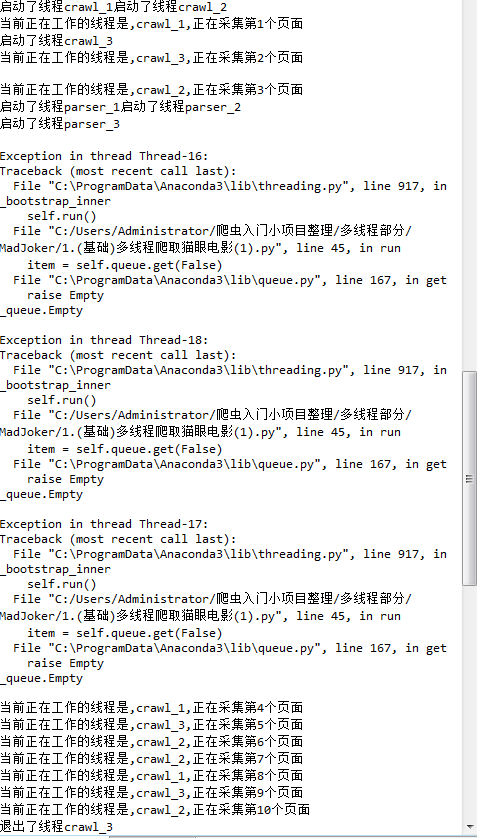

一个很简单的多线程爬虫,自己改了一天了,还是各种问题,其中采集类应该是没问题的(也有点不敢确定,毕竟菜鸟),因为我单独测试过,解析类中的选择器部分是确认无误的。但是运行的的时候还是出各种问题,只有这点币了,币什么的都不重要,但是我是真的想把爬虫学好,麻烦各位路过的大神帮忙改改,最好贴一下改正后的代码,以及指正我代码中的不足,谢谢大神,代码如下:

from bs4 import BeautifulSoup as bs

from queue import Queue

import threading

import requests

import json

class Crawl_thread(threading.Thread):

def __init__(self,thread_name,queue):

threading.Thread.__init__(self)

self.thread_name = thread_name

self.queue = queue

def run(self):

print('启动了线程{}'.format(self.thread_name))

self.crawl_spider()

print('退出了线程{}'.format(self.thread_name))

def crawl_spider(self):

while True:

if self.queue.empty():#判断队列是否为空

break

else:

page = self.queue.get()#获取队列里面的元素

print('当前正在工作的线程是,{},正在采集第{}个页面'.format(self.thread_name,str(page+1)))

base_url = 'https://maoyan.com/board/4?offset={}'

url = base_url.format(str(page*10))

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'

}

response = requests.get(url,headers=headers)

data = response.content.decode('utf-8')

data_queue.put(data)

class Parser_thread(threading.Thread):

def __init__(self,thread_name,queue,file):

threading.Thread.__init__(self)

self.thread_name = thread_name

self.queue = queue

self.file = file

def run(self):

print('启动了线程{}'.format(self.thread_name))

while not flag:

#get的参数为False的时候,队列为空,会抛出异常

item = self.queue.get(False)

if not item:

pass

self.parse_data(item)

self.queue.task_done()#每当get一次之后,提示是否阻塞

print('退出了线程{}'.format(self.thread_name))

def parse_data(self,item):

'''

解析网页内容的函数

'''

soup = bs(item,'lxml')

dd_list = soup.select('dl dd')

for dd in dd_list:

film_img =dd.find('img',attrs={'class':'board-img'}).attrs['data-src']

film_title = dd.find('p',attrs={'class':'name'}).find('a').get_text()

film_score = dd.find('p',attrs={'class':'score'}).get_text()

film_star = dd.find('p',attrs={'class':'star'}).get_text()

film_releasetime = dd.find('p',attrs={'class':'releasetime'}).get_text()

response = {

'电影图片':film_img,

'电影标题':film_title,

'电影得分':film_score,

'电影主演':film_star,

'上映时间':film_releasetime

}

self.file.write(json.dumps(response).encode('utf-8')+'\n')

data_queue = Queue()#创建一个队列

flag = False #全局变量

def main():

output = open('maoyan.json','a')

pagequeue = Queue(50)

for page in range(10):

pagequeue.put(page)

#初始化采集线程

crawl_threads=[]

crawl_name_list = ['crawl_1','crawl_2','crawl_3']

for thread_name in crawl_name_list:

thread = Crawl_thread(thread_name,pagequeue)

thread.start()#启动线程

crawl_threads.append(thread)#list的添加方法

#初始化解析线程

parser_threads=[]

parser_name_list = ['parser_1','parser_2','parser_3']

for thread_name in parser_name_list:

thread = Parser_thread(thread_name,data_queue,output)

thread.start()#启动线程

parser_threads.append(thread)#list的添加方法

#等待队列情况

while not pagequeue.empty():

pass

#等待所有的线程结束

for t in crawl_threads:

t.join()

while not data_queue.empty():

pass

#通知线程退出

global flag

for t in parser_threads:

t.join()

print('退出主线程')

output.close()

if __name__ == '__main__':

main()

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)