|

|

发表于 2019-9-14 21:21:43

|

显示全部楼层

发表于 2019-9-14 21:21:43

|

显示全部楼层

本帖最后由 huazisheng 于 2019-9-15 06:31 编辑

- import requests

- import json

- from lxml import etree

- import time

- import csv

- fp=open('C:/Users/Administrator/Desktop/simu.csv','wt',newline='',encoding='utf-8')

- writer=csv.writer(fp)

- writer.writerow(('网址','企业名称','负责人','成立时间','注册地址','办公地址','实缴资本','机构类型','全职人数','资格人数','机构网址'))

- headers = {

- 'Accept': 'application/json, text/javascript, */*; q=0.01',

- 'Accept-Encoding': 'gzip, deflate',

- 'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

- 'Cache-Control': 'no-cache',

- 'Connection': 'keep-alive',

- 'Content-Length': '2',

- 'Content-Type': 'application/json',

- 'Host': 'gs.amac.org.cn',

- 'Origin': 'http://gs.amac.org.cn',

- 'Pragma': 'no-cache',

- 'Referer': 'http://gs.amac.org.cn/amac-infodisc/res/pof/manager/managerList.html',

- 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.100 Safari/537.36',

- 'X-Requested-With': 'XMLHttpRequest'

- }

- def get_info(url):

- res=requests.get(url,headers=headers)

- res.raise_for_status()

- res.encoding=res.apparent_encoding

- seletctor=etree.HTML(res.text)

- 企业名称=seletctor.xpath('//*[@id="complaint1"]')[0].text[:-6]

- 成立时间=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[7]/td[4]')[0].text

- 注册地址=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[8]/td[2]')[0].text

- 办公地址=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[9]/td[2]')[0].text

- 实缴资本=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[10]/td[4]')[0].text

- 机构类型=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[12]/td[2]')[0].text

- 全职人数=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[13]/td[2]')[0].text

- 资格人数=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[13]/td[4]')[0].text

- 负责人=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[22]/td[2]')[0].text

- #/html/body/div/div[2]/div/table/tbody/tr[22]/td[2] #有的二级子页面是这个提取地址

- #/html/body/div/div[2]/div/table/tbody/tr[21]/td[2] #有的二级子页面是这个提取地址,不完全一致

- 机构网址=seletctor.xpath('/html/body/div/div[2]/div/table/tbody/tr[14]/td[2]/a')[0].text

- #/html/body/div/div[2]/div/table/tbody/tr[14]/td[2] #空白时是这个提取地址

- #/html/body/div/div[2]/div/table/tbody/tr[14]/td[2]/a #非空白时是这个提取地址,不完全一致

- writer.writerow((url,企业名称,负责人,成立时间,注册地址,办公地址,实缴资本,机构类型,全职人数,资格人数))

- for i in range(0,3):

- rank = 0.3389959824021722

- url = f"http://gs.amac.org.cn/amac-infodisc/api/pof/manager?rand=0.6122678870895568&page={i}&size=20"

- res = requests.post(url=url, data=json.dumps({}), headers=headers).json().get("content") # res是一个字典

- for each in res:

- url = "http://gs.amac.org.cn/amac-infodisc/res/pof/manager/" + each.get("url") # 构建二级子页面请求,需要采集其他数据,也可以采用。get("key")的方式

- get_info(url)

- #time.sleep(2)

- fp.close()

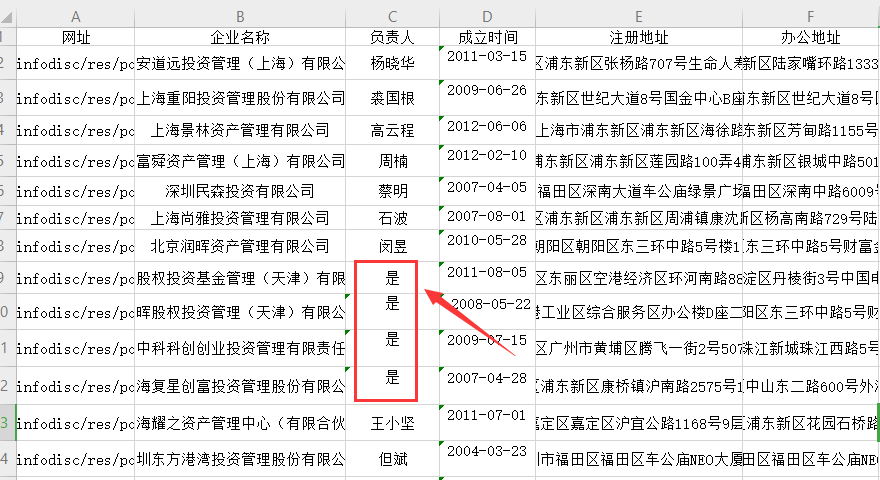

Xpath方式提取二级子页面时,部分提取地址不完全一致,导致信息提取失败或提取错误

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)