|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

百度图片的是下拉出来后续的item,一般只能爬取前20个图片,昨天的写错了,改了下

- from selenium import webdriver

- import time

- import json

- import re

- import random

- from selenium.webdriver.common.by import By

- from selenium.webdriver.common.keys import Keys

- from selenium.webdriver.support import expected_conditions as EC

- from selenium.webdriver.support.wait import WebDriverWait

- from selenium.common.exceptions import TimeoutException, NoSuchElementException

- from pyquery import PyQuery as pq

- import os,os.path

- import urllib.request

- #配置模拟器

- chrome_driver = r'C:\Users\Chysial\AppData\Local\Google\Chrome\Application\chromedriver.exe'

- browser = webdriver.Chrome(chrome_driver)

- wait = WebDriverWait(browser,10)

- browser.get('https://www.baidu.com/')

- #cookie模拟登陆,get一般运行一次就行,我因为调试后边把它注释了

- def get_cookies(browser):

- #cookies没有时限方法,一种方法直接在里面把expiry改成int,另一种就是输出时候改写然后写入文件不然就需要修改了

- time.sleep(50)

- with open('C:\\Users\\Chysial\\Desktop\\cookies.txt','w') as cookief:

- cookief.write(json.dumps(browser.get_cookies()))

- browser.close()

- def open_chrome(browser):

- browser.delete_all_cookies()

- with open('C:\\Users\\Chysial\\Desktop\\cookies.txt') as cookief:

- cookieslist = json.load(cookief)

- for cookies in cookieslist:

- browser.add_cookie(cookies)

- #从主页面baidu一步一步爬到图片里去

- def search():

- try:

- input = wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR ,'#kw'))

- )

- submit = wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR ,'#su'))

- )

- input.send_keys("百度图片")

- submit.click()

- wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR, '#page > a.n'))

- )

- html = browser.page_source

- doc = pq(html)

- items = doc('#1 .t a')

- links = []

- for each in items.items():

- links.append(each.attr('href'))

- links[0]

- browser.get(links[0])

- input = wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR ,'#kw'))

- )

- input.send_keys("坂井泉水")

- input.send_keys(Keys.ENTER)

- wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR, '.imgpage .imglist .imgitem'))

- )

- scorll_num(3)

- wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR, '.imgpage .imglist .imgitem'))

- )

- result = get_products()

- return result

- except TimeoutException:

- browser.refresh()

-

- #获取姐姐图片的地址

- def get_products():

- html = browser.page_source

- doc = pq(html)

- items = doc('.imgpage .imglist .imgitem').items()

- i = 0

- product = {}

- for each in items:

- i += 1

- product[ 'picture'+str(i)] = each.attr('data-objurl')

- return product

-

- def scorll_num(num = 10):

- for i in range(num):

- target = browser.find_element_by_id("pageMoreWrap")

- browser.execute_script("arguments[0].scrollIntoView();", target) #拖动到可见的元素去

- wait.until(

- EC.presence_of_element_located((By.CSS_SELECTOR, '#pageMoreWrap'))

- )

- #保存

- def save_class(name,url):

- req = urllib.request.Request(url)

- response = urllib.request.urlopen(req)

- img = response.read()

- if '.jpg' in url:

- with open(name+'.jpg','wb') as f:

- f.write(img)

- elif '.jpeg' in url:

- with open(name+'.jpeg','wb') as f:

- f.write(img)

- elif '.png' in url:

- with open(name+'.png','wb') as f:

- f.write(img)

- #下载

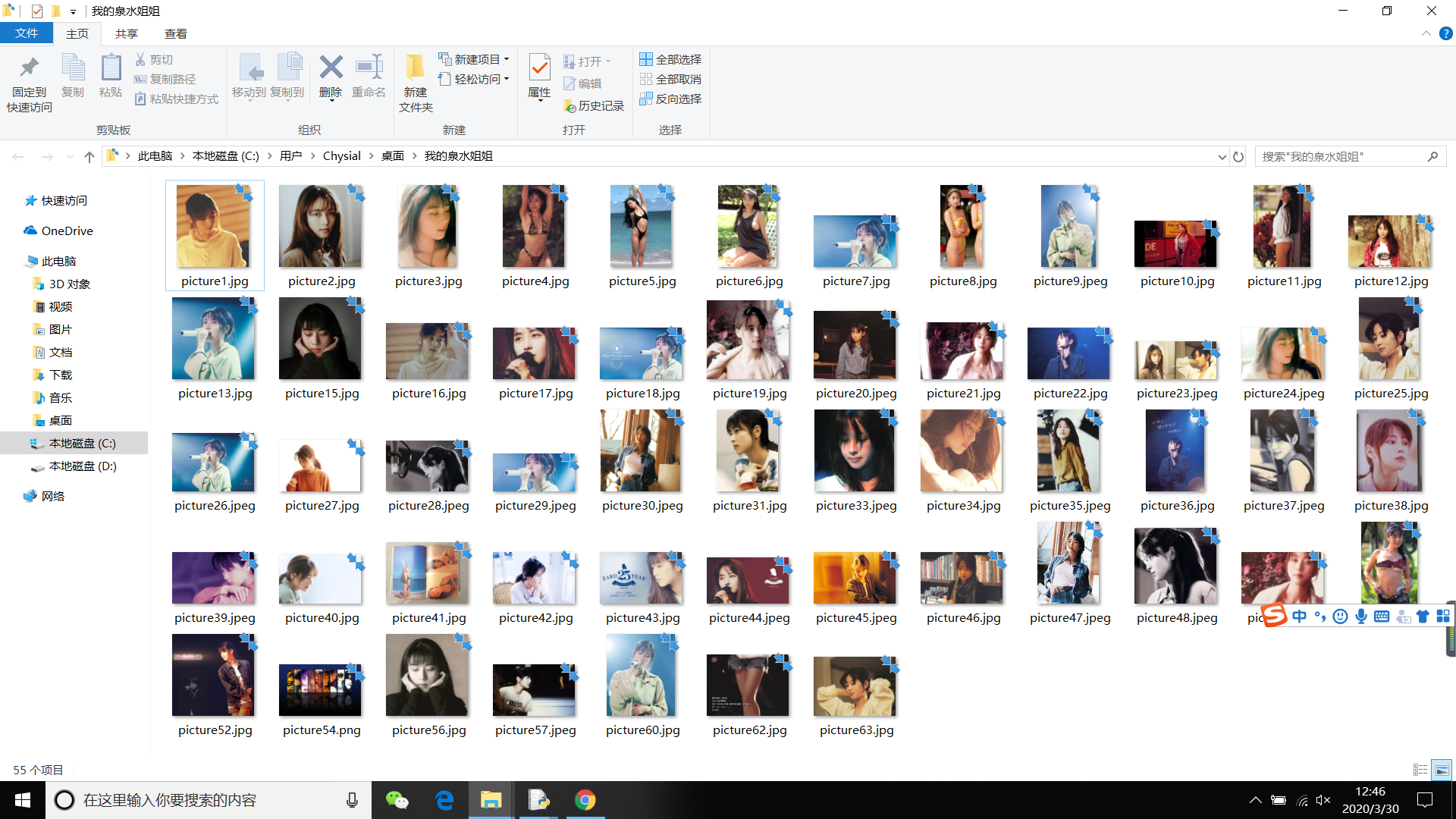

- def download_picture(folder='坂井泉水'):

- os.chdir("C:\\Users\\Chysial\\Desktop")

- os.mkdir(folder)

- os.chdir(folder)

- each = search()

- for i in each:

- try:

- save_class(i,each[i])

- except:

- continue

-

-

- def main():

- # 运行一次就行了,我这里就不重复了get_cookies(browser)

- open_chrome(browser)

- browser.refresh()

- download_picture(folder='我的泉水姐姐')

-

- if __name__ == '__main__':

- main()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)