|

|

6鱼币

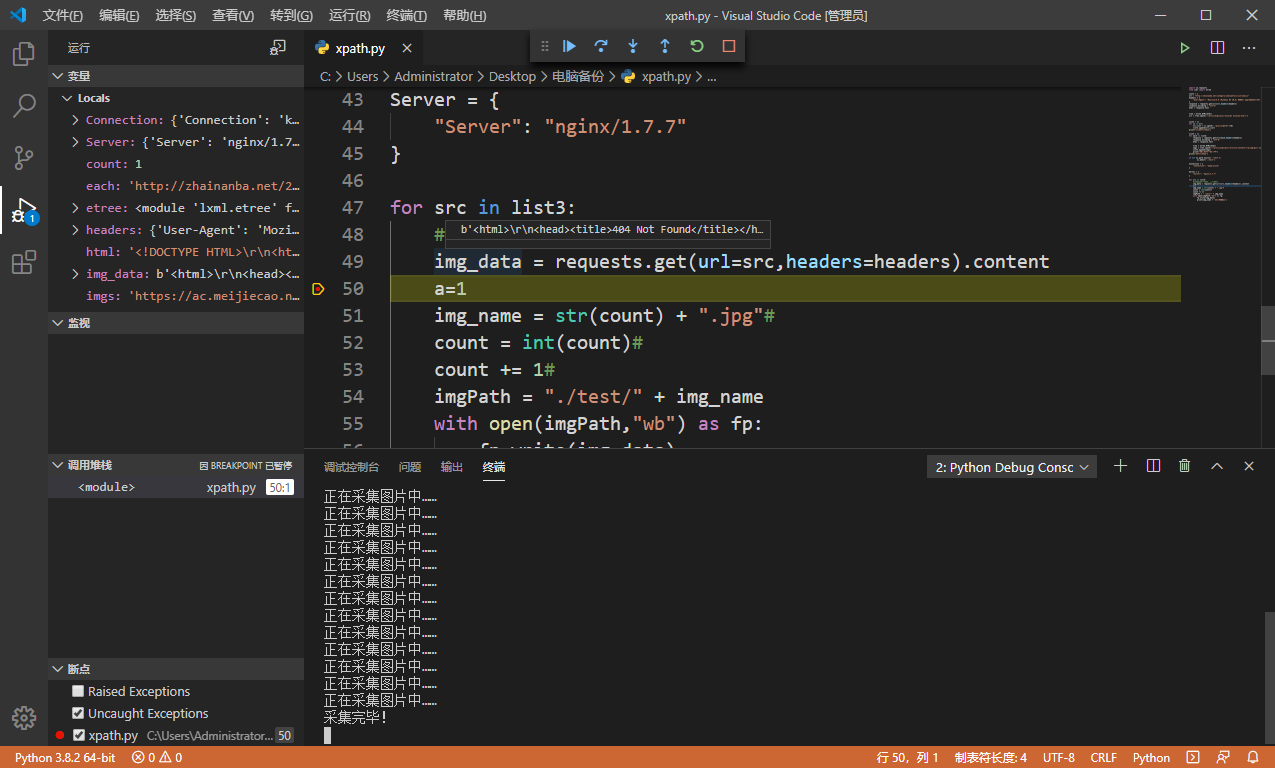

图片url已经被我获得了

使用遍历进行文件保存时候 访问图片url 返回的是404

第一次遇见这样的

我以为代码敲错了

所以我就使用断点测试发现返回404了

如图1所示

目标网站:http://zhainanba.net/category/zhainanfuli/jinrimeizi

下面是代码:

- import os,requests

- from lxml import etree

- count = 1

- url = "http://zhainanba.net/category/zhainanfuli/jinrimeizi"

- headers = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36"

- }

- response = requests.get(url=url,headers=headers)

- response.encoding = "utf-8"

- html = response.text

- tree = etree.HTML(html)

- url = tree.xpath('//article[@class="excerpt excerpt-one"]')

- list2 = []

- for li in url:

- url_list = li.xpath('./p[1]/a/@href')[0]

- list2.append(url_list)

- print("url采集完毕!")

- list3 = []

- for each in list2:

- response = requests.get(url=each,headers=headers)

- response.encoding = "utf-8"

- html = response.text

- tree = etree.HTML(html)

- imgs = tree.xpath('//article[@class="article-content"]/p/img/@src')[0]

- list3.append(imgs)

- print("正在采集图片中……")

- print("采集完毕!")

- if not os.path.exists('./test'):

- os.mkdir('./test')

- Connection = {

- "Connection": "keep-alive"

- }

- Server = {

- "Server": "nginx/1.7.7"

- }

- for src in list3:

- #请求到了图片的二进制数据

- img_data = requests.get(url=src,headers=headers).content

- img_name = str(count) + ".jpg"#

- count = int(count)#

- count += 1#

- imgPath = "./test/" + img_name

- with open(imgPath,"wb") as fp:

- fp.write(img_data)

- print(img_name + '下载成功!')

在网站时候可以正常请求图片 但是你只要刷新一下 马上404送给我 = =

大佬们 一共只有7个币 最多给你们6个 还有一个交税了 救救me!

本帖最后由 Stubborn 于 2020-4-24 00:06 编辑

好可爱的妹子啊  ,测试10张没有问题,速度好慢,不得行,得加代理池用Scrapy取搞

这里额外说一句,

for m in D:

session.headers["Referer"] = data[0]

关于跟新headers的Referer,还是重新用一个headers用于新的请求,有代测试,这里就爬了一个连接,不测试了

- # -*- coding: utf-8 -*-

- # !/usr/bin/python3

- """

- @ version: ??

- @ author: Alex

- @ file: test

- @datetime: 2020/4/23 - 23:30

- @explain:

- """

- import requests

- import re

- import os

- session = requests.session()

- domain = "http://zhainanba.net"

- params = {

- 'Accept': 'text/html, */*; q=0.01',

- 'Accept-Encoding': 'gzip, deflate',

- 'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

- 'Cache-Control': 'no-cache',

- 'Connection': 'keep-alive',

- 'Host': 'zhainanba.net',

- 'Pragma': 'no-cache',

- 'Referer': 'http://zhainanba.net/category/zhainanfuli/jinrimeizi',

- 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36',

- 'X-Requested-With': 'XMLHttpRequest',

- }

- data = []

- for page in range(1, 5):

- url = f"http://zhainanba.net/category/zhainanfuli/jinrimeizi/page/{page}"

- resp = session.get(url, params=params)

- data += re.findall(r"http://zhainanba\.net/\d+\.html", resp.text)

- param = {

- 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36',

- }

- # for urls in data:

- resp = session.get(data[0])

- D = re.findall("https://ac\.meijiecao\.net/ac/img/znb/meizitu/.*?\.jpg", resp.text)

- for m in D:

- session.headers["Referer"] = data[0]

- resp = session.get(m)

- *_, path_, name = m.split("/")

- file_path = os.path.abspath(path_)

- img_path = os.path.join(file_path, name)

- if not os.path.exists(file_path):

- os.mkdir(file_path)

- with open(img_path, "wb") as f:

- f.write(resp.content)

|

-

最佳答案

查看完整内容

好可爱的妹子啊 ,测试10张没有问题,速度好慢,不得行,得加代理池用Scrapy取搞

这里额外说一句,

for m in D:

session.headers["Referer"] = data[0]

关于跟新headers的Referer,还是重新用一个headers用于新的请求,有代测试,这里就爬了一个连接,不测试了

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)