|

|

40鱼币

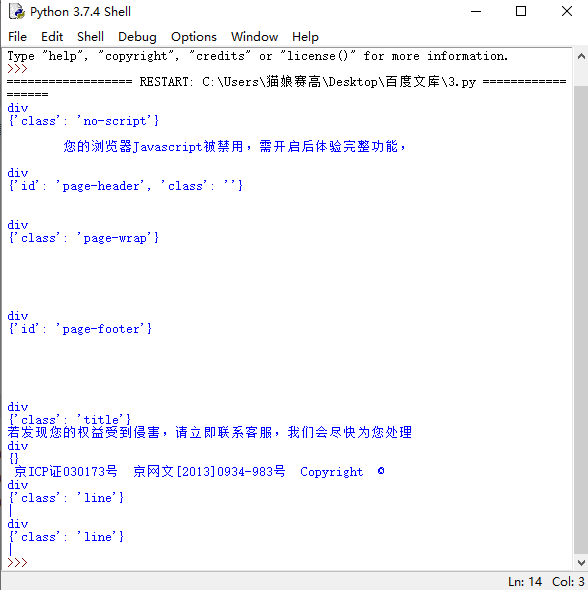

爬虫爬取结果:您的浏览器Javascript被禁用,需开启后体验完整功能

我的浏览器开启了Javascript,且爬取其他网址没有任何问题

cookies是用google的开发者工具当场抓取的放在test.txt里面的

- import requests

- import os, sys, stat

- from lxml import etree

- import time

- #获取cookies

- f=open(r'test.txt','r')

- cookies={}

- for line in f.read().split(';'):

- name,value=line.strip().split('=',1)

- cookies[name]=value

- #换ip

- proxies = {"HTTP":"163.204.240.202:9999",

- "HTTPS":"110.243.13.45:9999"}

- #主函数

- def start_1():

- url_1 = "https://wenku.baidu.com/view/1b4035173a3567ec102de2bd960590c69ec3d807.html"

- response = requests.get(url_1,headers = {'User-Agent':"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3"},\

- cookies=cookies,proxies=proxies).content.decode('utf-8')

- html = etree.HTML(response)

- links = html.xpath("//div")

- for index in range(len(links)):

- # links[index]返回的是一个字典

- #返回网站信息

- if (index % 2) == 0:

- print(links[index].tag)

- print(links[index].attrib)

- print(links[index].text)

- start_1()

爬虫访问百度文库被阻止,想知道是什么原因,ip,headers,cookies都加了为什么还是被拒绝了,

希望能给出问题解决方案(有解决代码就更好了) |

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)