|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

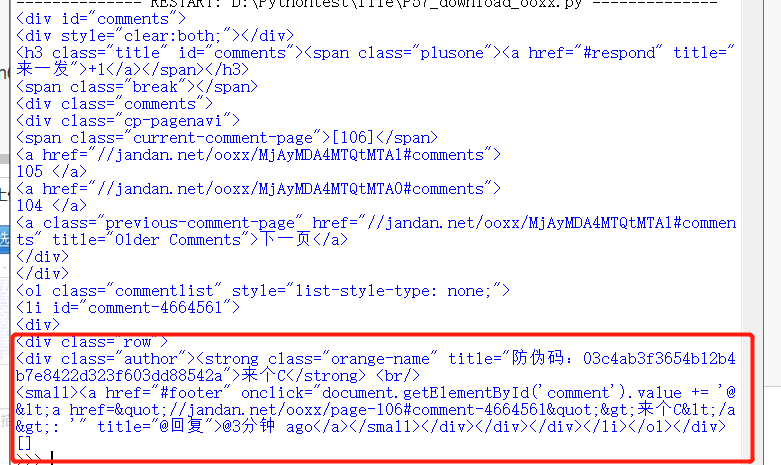

煎蛋网对图片有保护,只能爬到一个防伪码,请教这是哪种反爬措施,有破解方法吗?没有完美结束这一课的学习,让我强迫症很不爽 ,代码,运行结果如下: ,代码,运行结果如下:

- from urllib import request

- from bs4 import BeautifulSoup

- import re

- import base64

- import os

- def main():

- #新建文件夹

- os.mkdir('OOXX')

- os.chdir('OOXX')

-

- url='http://jandan.net/ooxx'

- #爬取页面

- headers={}

- headers['User-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0'

- req=request.Request(url,headers=headers)

- response=request.urlopen(req).read().decode('utf-8')

- #调用soup处理页面

- soup=BeautifulSoup(response,'html.parser')

- links_list=soup.find(attrs={'class':'cp-pagenavi'})

- #调用函数寻找初始页码链接,links为字典{page:'',link:''}

- pages_list=findlinks(links_list)

- page=int(pages_list['page'])

- page_link='http:'+pages_list['link']

- stop_page=page-5

- #爬取当前页的图片

- comment_list=soup.find(attrs={'id':'comments'})

- print(comment_list)

- img_list=comment_list.find_all('img')

- print(img_list)

- #下载指定图片

- for item in img_list:

- img_url='http:'+item['src']

- print(img_url)

- filename=item['src'].split('/')[-1]

- headers2={}

- headers2['User-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0'

- req2=request.Request(img_url,headers=headers)

- response2=request.urlopen(req2).read()

- with open(filename,'wb') as f:

- f.write(response2)

-

-

- while True:

- #爬取图片

- find_img(page_link)

- #处理链接(返回下一个页面对应的链接)

- page_link=handle_link(page_link)

-

- page-=1

- if page==stop_page:

- break

- def findlinks(links_list):

- target=links_list.find('a')

- page=target.text

- link=target['href']

- res={'page':page,'link':link}

- return res

- def handle_link(page_link):

- page_num=re.search('jandan.net/ooxx/(.+?)#',page_link).group(1)

- page_num=str(base64.b64decode(page_num),'utf-8')

- num=page_num.split('-')[1]

- date=page_num.split('-')[0]

- num=str(int(num)-1)

- page_num='-'.join((date,num))

- page_num=str(base64.b64encode(page_num.encode('utf-8')),'utf-8')

- link='http://jandan.net/ooxx/'+page_num+'#comments'

- return link

- def find_img(page_link):

- #爬取对应页面内容

- headers={}

- headers['User-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0'

- req=request.Request(page_link,headers=headers)

- response=request.urlopen(req).read().decode('utf-8')

- #使用BeautifulSoup获取指定图片

- soup=BeautifulSoup(response,'html.parser')

- comment_list=soup.find(attrs={'class':'commentlist'})

- img_list=comment_list.find_all('img')

- #下载指定图片

- for item in img_list:

- img_url='http:'+item['src']

- filename=item['src'].split('/')[-1]

- headers2={}

- headers2['User-Agent']='Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0'

- req2=request.Request(img_url,headers=headers)

- response2=request.urlopen(req2).read()

- with open(filename,'wb') as f:

- f.write(response2)

-

- if __name__=='__main__':

- main()

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)