|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

import scrapy

from selenium import webdriver

from wangyiPro.items import WangyiproItem

class WangyiSpider(scrapy.Spider):

name = 'wangyi'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://news.163.com/']

models_urls = []

#解析五大板块对应详情页的URL

#实例化一个浏览器对象

def __init__(self):

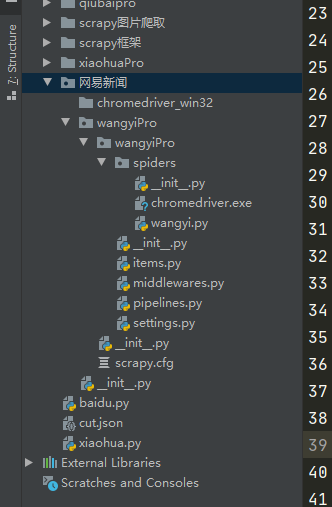

self.bro = webdriver.Chrome(executable_path='chromedriver.exe')

def parse(self, response):

li_list = response.xpath('//*[@id="index2016_wrap"]/div[1]/div[2]/div[2]/div[2]/div[2]/div/ul')

alist = [3,4,6,7,8]

for index in alist:

model_url = li_list[index].xpath('./a/@href').extract.first()

self.models_urls.append(model_url)

#依次对每一个板块对应的页面进行请求

for url in self.model_url:#对每一个板块的url进行请求发送

yield scrapy.Request(url,callback=self.models_urls)

#每一个板块对应的新闻标题相关的内容是动态加载出来的。

def parse_model(self,response):#解析每一个板块页面对应新闻的标题和新闻详情页的url

div_list = response.xpath('/html/body/div[1]/div[3]/div[4]/div[1]/div/div/ul/li/div/div')

for div in div_list:

title = div.xpath('./div/div[1]/h3/a/text()').extract_first()

new_detail_url=div.xpath('./div/div[1]/h3/a/@href').extract_first()

item = WangyiproItem()

item['title']=title

#对新闻详情页发起请求

yield scrapy.Request(url=new_detail_url,callback=self.parse_detail,meta={'item':item})

def parse_detail(self,response):

content = response.xpath('//*[@id="content"]/div[2]//text()').extract()

content=''.join(content)

item = response.meta['item']

item['content']=content

yield item

def close(self,spider):

self.bro.quit()

报错的内容为:

selenium.common.exceptions.WebDriverException: Message: 'chromedriver.exe' executable needs to be in PATH. Please see https://sites.google.com/a/chromium.org/chromedriver/home

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)