|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 猪猪虾 于 2020-12-26 15:55 编辑

‘!!!!!!!!!!!

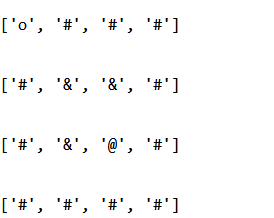

程序虽然长,但是都不用看,看create_environment(第一个定义的函数即可,只有这里是显示用的)

我想打印的列表就是env_list ,每次新生成的列表,覆盖掉原列表显示

’

- import numpy as np

- import pandas as pd

- import time

- def create_environment(state_x,state_y,episode,step_counter,table):

- '''

- 就是创建游戏的简单画面,定义游戏的移动等

- '''

- env_list = [['#', '#', '#', '#'] ,

- ['#', '&', '&', '#'] ,

- ['#', '&', '@', '#'] ,

- ['#', '#', '#', '#'] ]

- if state_x == 1000:

- interraction = 'episode %d: total step_step_move: %d'%(episode + 1,step_counter)

- print('\r{}'.format(interraction),end = '')

- time.sleep(2)

- print('\r ',end = '')

- else:

- env_list[state_x][state_y] = 'o'

- #interraction = ''.join(env_list)

- #print('\r{}'.format(interraction),end = '')

- print("***************new way******************")

- print("\n")

- for i in range(4):

- print(env_list[i])

- print("\n")

- time.sleep(Fresh_time)

- def get_evn_feedback(state_x,state_y,A):

- '''

- 就是看环境会给我多少reward以及下一步的状态是什么

- '''

- if A == 'right':

- #判断有没有超出边界

- if state_x >= N_state_x or state_x < 0 or \

- state_y >= N_state_y or state_y < 0:

- R = -1

- if state_x >= N_state_x:

- state_x =0

- if state_x < 0:

- state_x = N_state_x-1

- if state_y >= N_state_y:

- state_y = 0

- if state_y < 0:

- state_y = N_state_y-1

-

- #踩到陷阱

- elif (state_x,state_y) in [(1,1),(1,2),(2,1)]:

- state_x = state_x

- state_y += 1

- R = -1

-

- #终点

- elif (state_x,state_y) == (2,2):

- state_x = 1000

- state_y = 1000

- R = 1

-

- #其他情况不给奖励

- else:

- R = 0

- if state_y - 1 <0:

- state_y = N_state_y-1

- else:

- state_y -= 1

- state_x = state_x

-

-

- elif A == 'left':

- #判断有没有超出边界

- if state_x >= N_state_x or state_x < 0 or \

- state_y >= N_state_y or state_y < 0:

- R = -1

- if state_x >= N_state_x:

- state_x =0

- if state_x < 0:

- state_x = N_state_x-1

- if state_y >= N_state_y:

- state_y =0

- if state_y < 0:

- state_y = N_state_y-1

-

- #踩到陷阱

- elif (state_x,state_y) in [(1,1),(1,2),(2,1)]:

- state_x = state_x

- state_y -= 1

- R = -1

-

- #终点

- elif (state_x,state_y) == (2,2):

- state_x = 1000

- state_y = 1000

- R = 1

-

- #其他情况不给奖励

- else:

- R = 0

- state_x = state_x

- if state_y +1 >= N_state_y:

- state_y = 0

- else:

- state_y += 1

-

-

- elif A == 'up':

- #判断有没有超出边界

- if state_x >= N_state_x or state_x < 0 or \

- state_y >= N_state_y or state_y < 0:

- R = -1

- if state_x >= N_state_x:

- state_x =0

- if state_x < 0:

- state_x = N_state_x -1

- if state_y >= N_state_y:

- state_y =0

- if state_y < 0:

- state_y = N_state_y -1

-

- #踩到陷阱

- elif (state_x,state_y) in [(1,1),(1,2),(2,1)]:

- state_x -= 1

- state_y = state_y

- R = -1

-

- #终点

- elif (state_x,state_y) == (2,2):

- state_x = 1000

- state_y = 1000

- R = 1

-

- #其他情况不给奖励

- else:

- R = 0

- if state_x - 1 < 0:

- state_x = N_state_x - 1

- else:

- state_x -= 1

- state_y = state_y

-

-

-

-

- else:

- #判断有没有超出边界

- if state_x >= N_state_x or state_x < 0 or \

- state_y >= N_state_y or state_y < 0:

- R = -1

- if state_x >= N_state_x:

- state_x =0

- if state_x < 0:

- state_x = N_state_x -1

- if state_y >= N_state_y:

- state_y =0

- if state_y < 0:

- state_y = N_state_y -1

-

- #踩到陷阱

- elif (state_x,state_y) in [(1,1),(1,2),(2,1)]:

- state_x += 1

- state_y = state_y

- R = -1

-

- #终点

- elif (state_x,state_y) == (2,2):

- state_x = 1000

- state_y = 1000

- R = 1

- #其他情况不给奖励

- else:

- R = 0

- if state_x + 1 >= N_state_x:

- state_x = 0

- else:

- state_x += 1

- state_y = state_y

-

- return state_x,state_y,R

- def build_q_table(N_state_x,N_state_y):

- table = np.zeros((N_state_x,N_state_y)) #表格的值都初始化为0

- return table

- #print(build_q_table(N_state,Action))

- def choose_action(state_x,state_y,q_table):

- '''

- state = (N_state_x,N_state_y)

- 下一步动作的选取要根据当前的状态以及已有的q_table来进行选择

- 选择的时候分为两种情况:

- 1.在90%的情况下,根据当前的状态以及已有的q_table来选择最优的action

- 2.10%的情况下,随机选择一个action

- '''

- score_of_4_dir,score_of_4_direction = get_neibor_q(state_x,state_y,q_table)

-

- #>0.9,也就是产生的随机数是在0.9~1之间,10%的情况下,随机选择下部一的动作

- #全部为0的情况也随机选取下一步的状态

- sign_all_0 = True

- #判断上下左右四个分数是不是全部为0

- for i in range(len(score_of_4_dir)):

- if score_of_4_dir[i] != 0 and score_of_4_dir[i] != -100:

- sign_all_0= False

- break

-

- if np.random.uniform() > Greedy_plicy or sign_all_0 == True :

- #socre = -100的位置表示已经超出了游戏边界,这个方向不能走,三级选择的方向里面需要排除掉这些,

- #再进行随机选择

- action_choice = Action.copy()

- for i in range(4): #四个方向

- if score_of_4_dir[i] == -100:

- action_choice[i] = 'prohibit'

-

- #一直随机挑选,不能选择标记为none的方向

- action_name = ''

- while action_name == 'prohibit' or action_name == '':

- action_name = np.random.choice(action_choice)

-

- else:

- #标记为none的地方对应的q表值为-100,不可能是最大值

-

- action_name = Action[score_of_4_dir.index(max(score_of_4_dir))] #返回state_of_action里面较大值的索引

- return action_name

- def get_neibor_q(state_x,state_y,q_table):

- '''

- 获取当前状态的上下左右四个状态的q值以及对应的坐标

- '''

- #当前的点不一定能向四个方向前进,可能到边上了

- #分数在列表里面的存储顺序默认为 上下左右

- position_of_4_direction = [[state_x-1, state_y],\

- [state_x+1, state_y],\

- [state_x, state_y-1],\

- [state_x, state_y+1]]

- score_of_4_dir = []

- for i in range(len(position_of_4_direction)):

- if position_of_4_direction[i][0] < 0 or position_of_4_direction[i][0] >= N_state_x \

- or position_of_4_direction[i][1] < 0 or position_of_4_direction[i][1] >= N_state_y:

- position_of_4_direction[i][0] = -100

- position_of_4_direction[i][1] = -100 #设置成这个值,表示这个方向不能走

- score_of_4_dir.append(-100)

- else:

- # print("position_of_4_direction[{}][0]={} ".format(i,position_of_4_direction[i][0]))

- # print("\n")

- # print("position_of_4_direction[{}][0]={}".format(i,position_of_4_direction[i][1]))

- # print("\n")

- score_of_4_dir.append(q_table[position_of_4_direction[i][0],position_of_4_direction[i][1]])

- return score_of_4_dir,position_of_4_direction

-

-

- def reforcement_learning():

- table = build_q_table(N_state_x,N_state_y)

- for episode in range(max_episodes):

- state_x,state_y = 0,0

-

- step_counter =0

- create_environment(state_x,state_y,episode,step_counter,table)

-

- while state_x != 1000:

- A = choose_action(state_x,state_y,table)

- state_x_,state_y_,R = get_evn_feedback(state_x,state_y,A)

- q_predict = table[state_x,state_y]

-

- if state_x_ != 1000 :

- score_of_4_dir,position_of_4_direction = get_neibor_q(state_x,state_y,table)

- position_of_4_direction = get_neibor_q(state_x,state_y,table)

- reality = R + discount_factor * max(score_of_4_dir)

- else:

- reality= R #discount_factor * max(table.iloc[S_,:])不存在了,已经终结没有下一个状态了

- state_x,state_y = 1000,1000

-

- table[state_x,state_y] = table[state_x,state_y] + Learning_rate * (reality - q_predict)

- state_x,state_y = state_x_,state_y_

-

- create_environment(state_x,state_y,episode,step_counter+1,table)

- step_counter += 1

- return table

- if __name__== "__main__":

- N_state_x = 4 #状态的种类

- N_state_y = 4

- Action = ['up','down','left','right']

- Greedy_plicy = 0.9 #多少比例择优选择action,其余比例下随机选择action

- Learning_rate = 0.1 #学习率

- discount_factor = 0.9 #未来奖励的衰减值

- max_episodes = 50 #只玩13回合就结束

- Fresh_time = 0.3 #0.3秒显示走一步

- np.random.seed(2)

- q_table = reforcement_learning()

- print(q_table)

-

-

-

-

-

-

-

-

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)