|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

- import tensorflow as tf

- from tensorflow.examples.tutorials.mnist import input_data

- import numpy as np

- from PIL import Image

- #算法超参数

- batch_size=100

- training_epochs=10

- learning_rate_init=0.01

- display_step=100

- #网络参数

- n_input=784

- n_classes=10

- #根据指定的维数返回指定的权数

- def WeightsVariable(shape,name_str,stddev=0.1):

- """

- :param shape: 形状,n行n列

- :param name_str: 变量名称

- :param stddev: 正态分布标准差

- :return:

- """

- initial=tf.random_normal(shape=shape,stddev=stddev,dtype=tf.float32)

- return tf.Variable(initial_value=initial,dtype=tf.float32,name=name_str)

- #根据指定维数返回指定的偏置

- def BiasesVariable(shape,name_str,stddev=0.1):

- """

- :param shape: 偏置的形状,nxn

- :param name_str: 变量名称

- :param stddev: 正态分布标准差

- :return:

- """

- initial=tf.random_normal(shape=shape,stddev=stddev,dtype=tf.float32)

- return tf.Variable(initial_value=initial,dtype=tf.float32,name=name_str)

- #二维卷积层的封装(conv2d+bias)

- def Conv2d(X,w,b,stride=1,padding="SAME"):

- """

- :param X: 特征图输入n*n*None

- :param w: 滤波器(权重参数)

- :param b: 修正(偏置参数)

- :param stride: 滑动步长(默认为1)

- :param padding: 外填充

- :return:

- """

- with tf.name_scope("Conv2d"):

- y=tf.nn.conv2d(X,w,strides=[1,stride,stride,1],padding=padding)

- y=tf.nn.bias_add(y,b)

- return y

- #非线性激活层的封装

- def Activation(x,activation=tf.nn.relu,name="relu"):

- """

- :param x: 特征图输入

- :param activation: 激活函数,类似于看待事物的一种模式

- :param name: 名称

- :return:

- """

- with tf.name_scope(name):

- y=activation(x)

- return y

- #最大池化层的封装

- def Pool2d(x,pool=tf.nn.max_pool,k=2,stride=2):

- return pool(x,ksize=[1,k,k,1],strides=[1,stride,stride,1],padding="VALID")

- #全连接层封装

- def FullyConnect(x,w,b,activate=tf.identity,act_name="identity"):

- with tf.name_scope("Wx_b"):

- y=tf.add(tf.matmul(x,w),b)

- with tf.name_scope("SoftMax"):

- y=tf.nn.softmax(logits=y)

- with tf.name_scope(act_name):

- y=activate(y)

- return y

- #通用的评估函数,用来评估模型在给定的数据集上的损失和准确率

- def EvaluateMode10nDataset(sess,images,labels):

- n_samples=images.shape[0] #传入的样本数量

- per_batch_size=batch_size

- loss=0

- acc=0

- if(n_samples<=per_batch_size):

- batch_count=1

- loss,acc=sess.run([cross_entropy_loss,accuracy],

- feed_dict={X_origin:images,

- Y_true:labels,

- learning_rate:learning_rate_init})

- else:

- batch_count=int(n_samples/per_batch_size)

- batch_start=0

- for idx in range(batch_count):

- batch_loss,batch_acc=sess.run([cross_entropy_loss,accuracy],

- feed_dict={X_origin:images[batch_start:batch_start+per_batch_size,:],

- Y_true:labels[batch_start:batch_start+per_batch_size,:],

- learning_rate:learning_rate_init})

- batch_start+=per_batch_size

- #累计所有批次上的损失和准确率

- loss+=batch_loss

- acc+=batch_acc

- return loss/batch_count,acc/batch_count

- '''计算图绘制'''

- with tf.Graph().as_default():

- #输入层:28*28*1

- with tf.name_scope("Input"):

- X_origin=tf.placeholder(tf.float32,shape=[None,n_input],name="X_origin")

- Y_true=tf.placeholder(tf.float32,shape=[None,n_classes],name="Y_true")

- X_image=tf.reshape(X_origin,[-1,28,28,1],name="X_image")

- #前向推断过程

- with tf.name_scope("Inference"):

- #卷积层第一层:24*24*16

- with tf.name_scope("Conv2d_1"):

- weight_1=WeightsVariable(shape=[5,5,1,16],name_str="weight_1")

- bias_1=BiasesVariable(shape=[16],name_str="bias_1")

- conv1_out=Conv2d(X_image,weight_1,bias_1,stride=1,padding="VALID")

- #非线性激活层

- with tf.name_scope("Active"):

- activation_out=Activation(x=conv1_out,activation=tf.nn.relu,name="relu")

- #池化层(默认采用最大池化)12*12*16

- with tf.name_scope("Pool2d_1"):

- pool1_out=Pool2d(x=activation_out,pool=tf.nn.max_pool,k=2,stride=2)

- #将二维特征图转变为1维,2304

- with tf.name_scope("FeatsReshape"):

- features=tf.reshape(pool1_out,[-1,12*12*16])

- #全连接层

- with tf.name_scope("FC_linear1"):

- weight_fc=WeightsVariable(shape=[12*12*16,n_classes],name_str="weight_fc")

- bias_fc=BiasesVariable(shape=[n_classes],name_str="bias_fc")

- Ypre_logits=FullyConnect(features,weight_fc,bias_fc,activate=tf.identity,act_name="identity")

- #定义损失层

- with tf.name_scope("Loss"):

- cross_entropy_loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

- labels=Y_true, logits=Ypre_logits

- ))

- #定义训练优化层

- with tf.name_scope("Train"):

- global_step = tf.Variable(0, name='global_step', trainable=False)

- learning_rate=tf.placeholder(tf.float32)

- optimizer=tf.train.AdamOptimizer(learning_rate=learning_rate)

- trainer=optimizer.minimize(cross_entropy_loss,global_step=global_step)

- #定义模型评估层

- with tf.name_scope("Evaluate"):

- correct_pred=tf.equal(tf.argmax(Ypre_logits,1),tf.argmax(Y_true,1))

- accuracy=tf.reduce_mean(tf.cast(correct_pred,tf.float32))

- #定义初始化所有变量节点

- init=tf.initialize_all_variables()

- print("将计算图写入log事件文件中,并在tensorboard中查看!!")

- writer=tf.summary.FileWriter("logs/",graph=tf.get_default_graph())

- writer.close()

- # 导入mnist数据集

- mnist=input_data.read_data_sets("MNIST_data/",one_hot=True)

- '''启动会话'''

- with tf.Session() as sess:

- sess.run(init) #初始化variable变量

- total_batches=int(mnist.train.num_examples/batch_size)

- print("每批次的样本数量:",batch_size)

- print("总共的批次数量:",total_batches)

- print("总共的训练数据:",mnist.train.num_examples)

- #保存和载入网络

- saver=tf.train.Saver()

- checkpoint=tf.train.get_checkpoint_state("saver_network")

- if checkpoint and checkpoint.model_checkpoint_path:

- saver.restore(sess,checkpoint.model_checkpoint_path)

- print("Successfully loaded:", checkpoint.model_checkpoint_path)

- else:

- print("Could not find old network weights")

- training_step=0 #记录模型被训练的步数

- #指定训练轮数,将所有的样本都训练一遍

- for epoch in range(training_epochs):

- #把一轮所有的batch都跑一遍

- for batch_idx in range(total_batches):

- # 取出数据

- batch_x, batch_y = mnist.train.next_batch(batch_size)

- #训练优化器训练节点

- sess.run(trainer,feed_dict={

- X_origin:batch_x,

- Y_true:batch_y,

- learning_rate:learning_rate_init,

- })

- #每调用一次训练节点,training_step就加1

- training_step=training_step+1

- # 每训练display_step次,就计算当前模型的损失和准确率

- if training_step % display_step == 0:

- start_idx = max(0, (batch_idx - display_step) * batch_size)

- end_idx = batch_idx * batch_size

- train_loss, train_acc = EvaluateMode10nDataset(sess,

- mnist.train.images[start_idx:end_idx, :],

- mnist.train.labels[start_idx:end_idx, :])

- print("Training Step:" + str(training_step) +

- ",Training_Loss={:.6f}".format(train_loss) +

- ",Training_accuracy={:.5f}".format(train_acc))

- # 计算当前模型在验证集上的损失和准确率

- validation_loss, validation_acc = EvaluateMode10nDataset(sess,

- mnist.validation.images,

- mnist.validation.labels)

- print("Training Step:" + str(training_step) +

- ",Validation_Loss={:.6f}".format(validation_loss) +

- ",Validation_accuracy={:.5f}".format(validation_acc))

- if training_step%display_step==0:

- saver.save(sess,"./saver_network/check_mnist",global_step=global_step)

- print("训练完毕!!!")

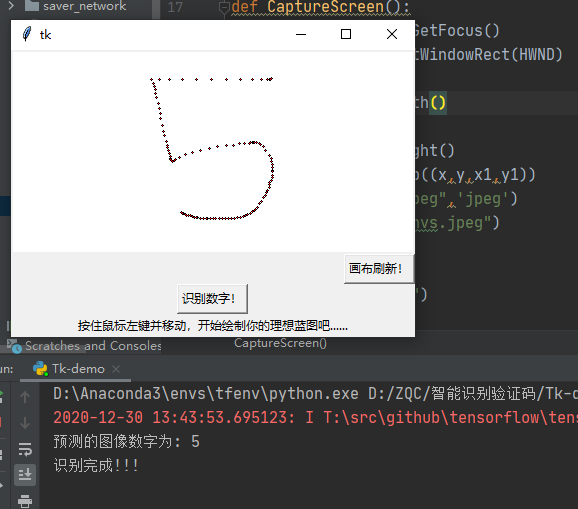

- img = Image.open("5.png")

- img = img.convert('L') # 灰度化

- img = np.reshape(img, (784, 1)).reshape(1, 784)

- img = ((255 - np.array(img, dtype=np.uint8)) / 255.0).reshape(1, 784)

- logits=sess.run(Ypre_logits,feed_dict={

- X_origin:img

- })

- print("预测的图像数字为:",np.argmax(logits))

- if __name__ == '__main__':

- pass

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)