|

|

5鱼币

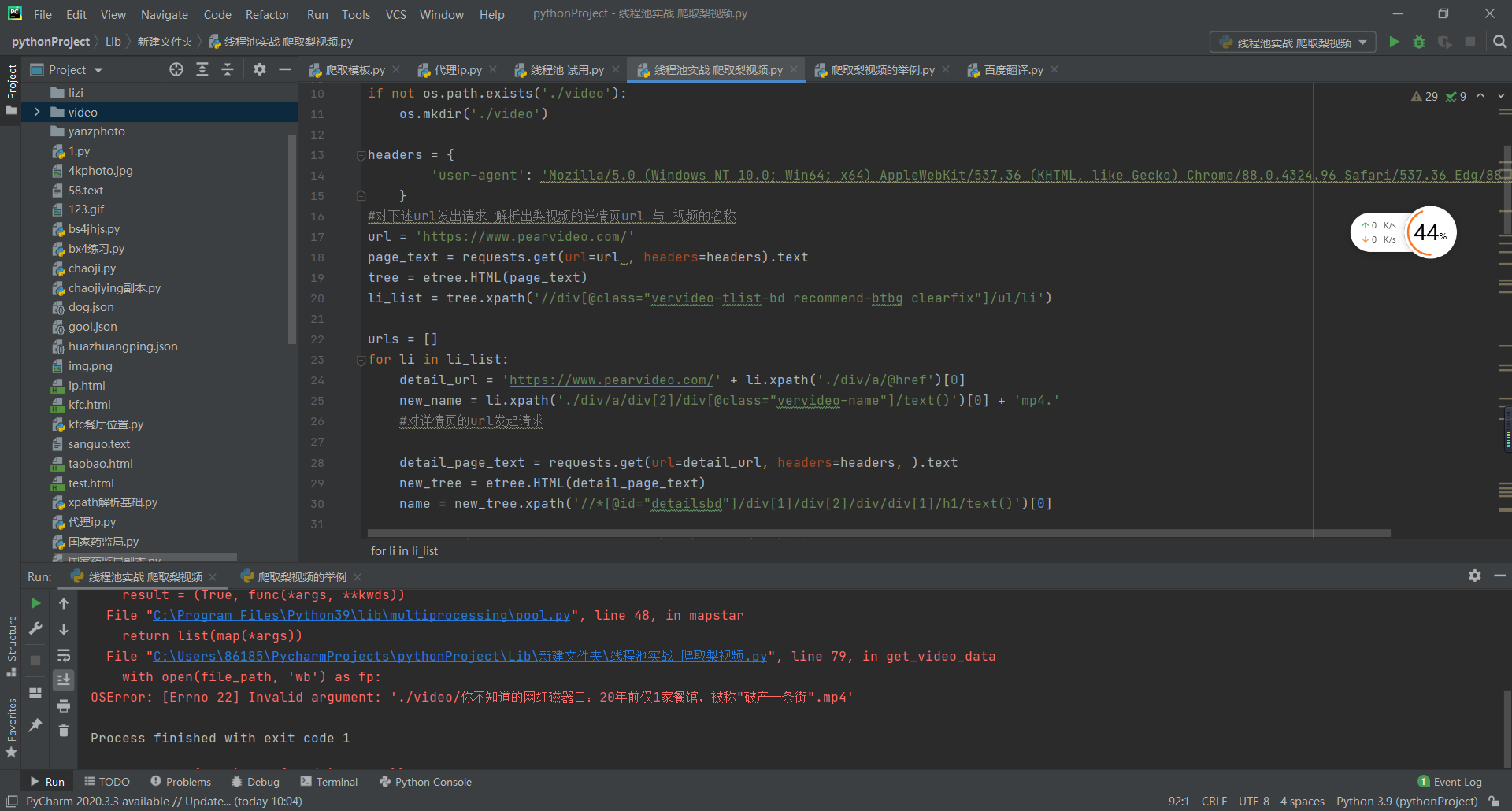

import requests

from lxml import etree

from multiprocessing.dummy import Pool

import random

import os

if not os.path.exists('./video'):

os.mkdir('./video')

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36 Edg/88.0.705.50'

}

#对下述url发出请求 解析出梨视频的详情页url 与 视频的名称

url = 'https://www.pearvideo.com/'

page_text = requests.get(url=url , headers=headers).text

tree = etree.HTML(page_text)

li_list = tree.xpath('//div[@class="vervideo-tlist-bd recommend-btbg clearfix"]/ul/li')

urls = []

for li in li_list:

detail_url = 'https://www.pearvideo.com/' + li.xpath('./div/a/@href')[0]

new_name = li.xpath('./div/a/div[2]/div[@class="vervideo-name"]/text()')[0] + 'mp4.'

#对详情页的url发起请求

detail_page_text = requests.get(url=detail_url, headers=headers, ).text

new_tree = etree.HTML(detail_page_text)

name = new_tree.xpath('//*[@id="detailsbd"]/div[1]/div[2]/div/div[1]/h1/text()')[0]

id = str(li.xpath('./div/a/@href')[0]).split('_')[1]

ajax_url = 'https://www.pearvideo.com/videoStatus.jsp?'

parames ={

'contId': id,

'mrd': str(random.random())#随机数这样子处理

}

ajax_headers = {

"User-Agent":'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36 Edg/88.0.705.63',

'Referer': 'https://www.pearvideo.com/video_' + id

}

new_page_json = requests.get(url=ajax_url, params=parames, headers=ajax_headers).json()

video_url =new_page_json["videoInfo"]['videos']["srcUrl"]

#print(video_url)

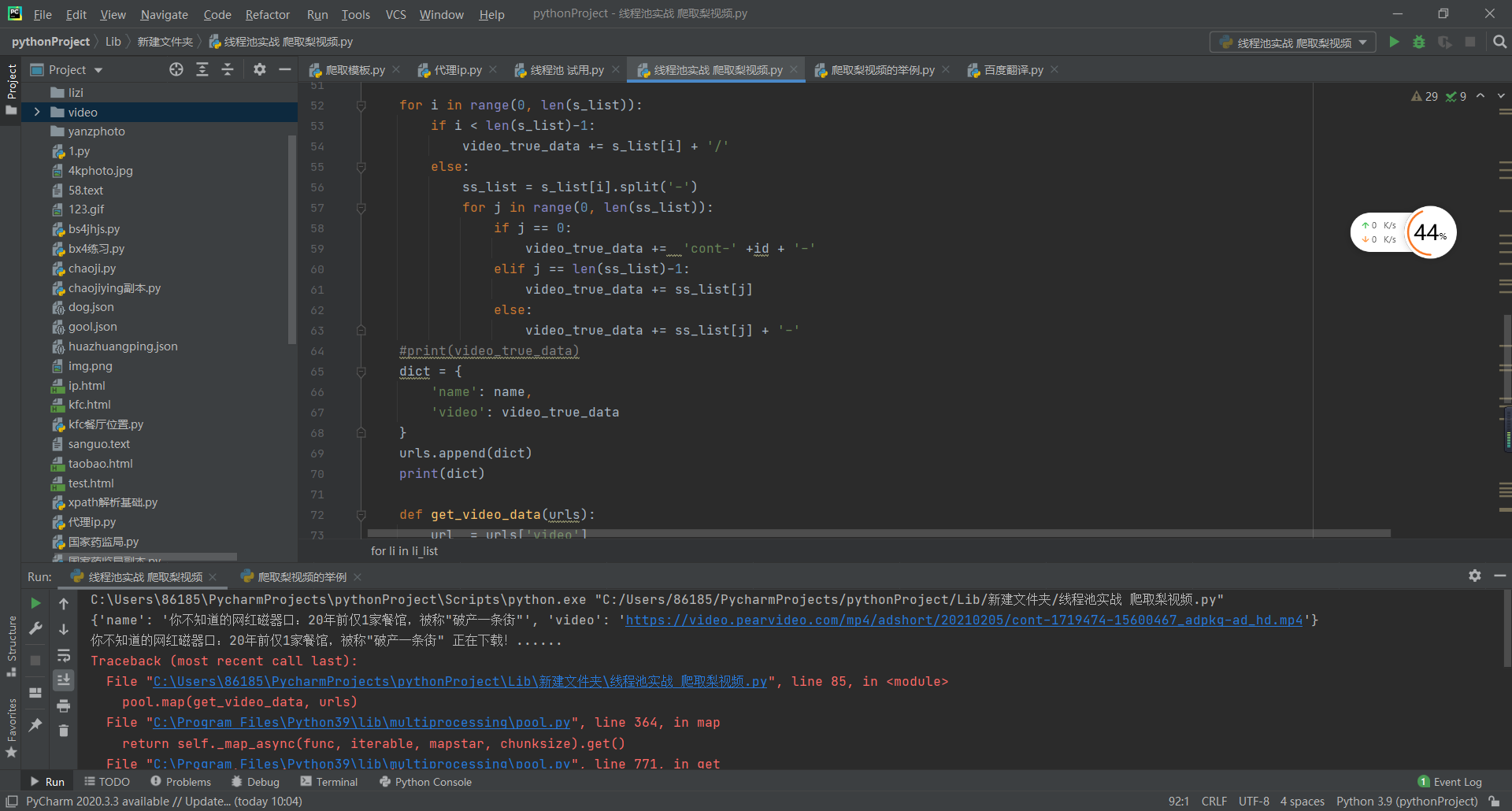

# 此处视频地址做了加密即ajax中得到的地址需要加上cont-,并且修改一段数字为id才是真地址

# 真地址:"https://video.pearvideo.com/mp4/third/20201120/cont-1708144-10305425-222728-hd.mp4"

# 伪地址:"https://video.pearvideo.com/mp4/third/20201120/1606132035863-10305425-222728-hd.mp4"

video_true_data = ''

s_list = str(video_url).split('/')

for i in range(0, len(s_list)):

if i < len(s_list)-1:

video_true_data += s_list[i] + '/'

else:

ss_list = s_list[i].split('-')

for j in range(0, len(ss_list)):

if j == 0:

video_true_data += 'cont-' +id + '-'

elif j == len(ss_list)-1:

video_true_data += ss_list[j]

else:

video_true_data += ss_list[j] + '-'

#print(video_true_data)

dict = {

'name': name,

'video': video_true_data

}

urls.append(dict)

print(dict)

def get_video_data(urls):

url_ = urls['video']

print(urls['name'] ,'正在下载!......')

file_path = './video/' + urls['name'] + '.mp4'

video_data_shiping = requests.get(url=url_ , headers=headers).content

with open(file_path, 'wb') as fp:

fp.write(video_data_shiping)

print(urls['name'], '下载成功!!!!')

pool = Pool(4)

pool.map(get_video_data, urls)

pool.close()

pool.join()

#爬取梨视频 热门视频

|

-

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)