|

|

60鱼币

本帖最后由 python羊 于 2021-5-21 15:44 编辑

地址:https://www.gia.edu/sites/Satell ... p;cid=1495275503754

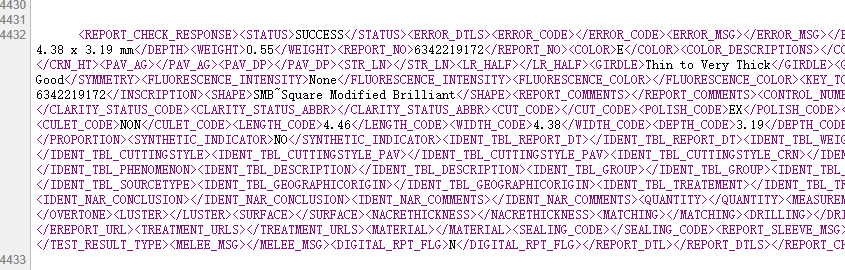

提取类容在:4432行的全部类容。如下图:

话说我只想要这个数据,为什么源代码这么多。。。。

或许 有更快速的方法,请指教。感谢

我的代码:

——————————————

import requests

import re

s = requests.Session()

headers={

'User-Agent':'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Mobile Safari/537.36',

}

url_end = 'https://www.gia.edu/sites/Satellite?reportno=6342219172&c=Page&childpagename=GIA%2FPage%2FReportCheck&pagename=GIA%2FWrapper&cid=1495275503754'

r_end =s.get(url_end,headers=headers)

r_end_str = r_end.text

content_list=re.findall('<span style="display:none;" name="xmlcontent" id="xmlcontent">"(.*?)"</span>',r_end_str)

print(content_list)

感觉 bs4 快点,re 不怎么会,span 里面很多节点不知道怎么弄

re(标签没去除)参考代码:

- import requests

- import re

- s = requests.Session()

- headers={

- 'User-Agent':'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Mobile Safari/537.36',

- }

- url_end = 'https://www.gia.edu/sites/Satellite?reportno=6342219172&c=Page&childpagename=GIA%2FPage%2FReportCheck&pagename=GIA%2FWrapper&cid=1495275503754'

- r_end =s.get(url_end,headers=headers)

- r_end_str = r_end.text

- content_list = re.findall('<REPORT_CHECK_RESPONSE>(.+)</REPORT_CHECK_RESPONSE>',r_end_str)[0]

- print(content_list)

bs4 参考代码:

- import requests

- from bs4 import BeautifulSoup

- s = requests.Session()

- headers={

- 'User-Agent':'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Mobile Safari/537.36',

- }

- url_end = 'https://www.gia.edu/sites/Satellite?reportno=6342219172&c=Page&childpagename=GIA%2FPage%2FReportCheck&pagename=GIA%2FWrapper&cid=1495275503754'

- r_end =s.get(url_end,headers=headers)

- r_end_str = r_end.text

- soup = BeautifulSoup(r_end_str,'lxml')

- content_list= soup.find_all("span",id="xmlcontent")[0].text

- print(content_list)

|

-

最佳答案

查看完整内容

感觉 bs4 快点,re 不怎么会,span 里面很多节点不知道怎么弄

re(标签没去除)参考代码:

bs4 参考代码:

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)