|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

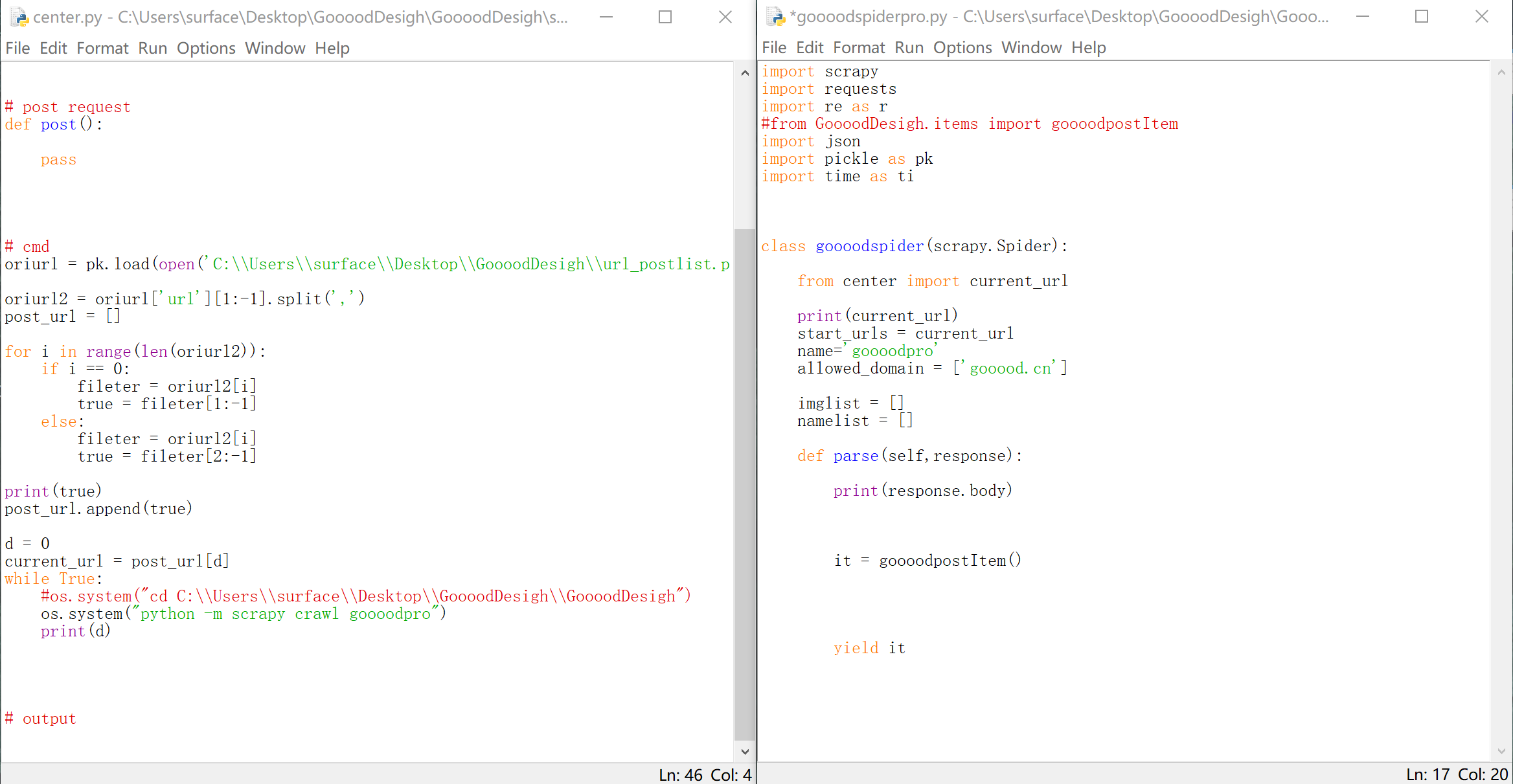

我想用一个外部循环来实现反复调用scrapy来爬取不同的网页内容,但是现在就出现这种情况:

附上源码:

1.spider:

import scrapy

import requests

import re as r

#from GoooodDesigh.items import goooodpostItem

import json

import pickle as pk

import time as ti

class goooodspider(scrapy.Spider):

from center import current_url

print(current_url)

start_urls = current_url

name='goooodpro'

allowed_domain = ['gooood.cn']

imglist = []

namelist = []

def parse(self,response):

print(response.body)

it = goooodpostItem()

yield it

2.外部文件:

import os

import pickle as pk

import time as ti

# input

def input():

pass

# post request

def post():

pass

# cmd

oriurl = pk.load(open('C:\\Users\\surface\\Desktop\\GoooodDesigh\\url_postlist.pkl','rb'))

oriurl2 = oriurl['url'][1:-1].split(',')

post_url = []

for i in range(len(oriurl2)):

if i == 0:

fileter = oriurl2[i]

true = fileter[1:-1]

else:

fileter = oriurl2[i]

true = fileter[2:-1]

print(true)

post_url.append(true)

d = 0

current_url = post_url[d]

while True:

#os.system("cd C:\\Users\\surface\\Desktop\\GoooodDesigh\\GoooodDesigh")

os.system("python -m scrapy crawl goooodpro")

print(d) |

-

这是执行代码和scrapy里面对应调用的spider

-

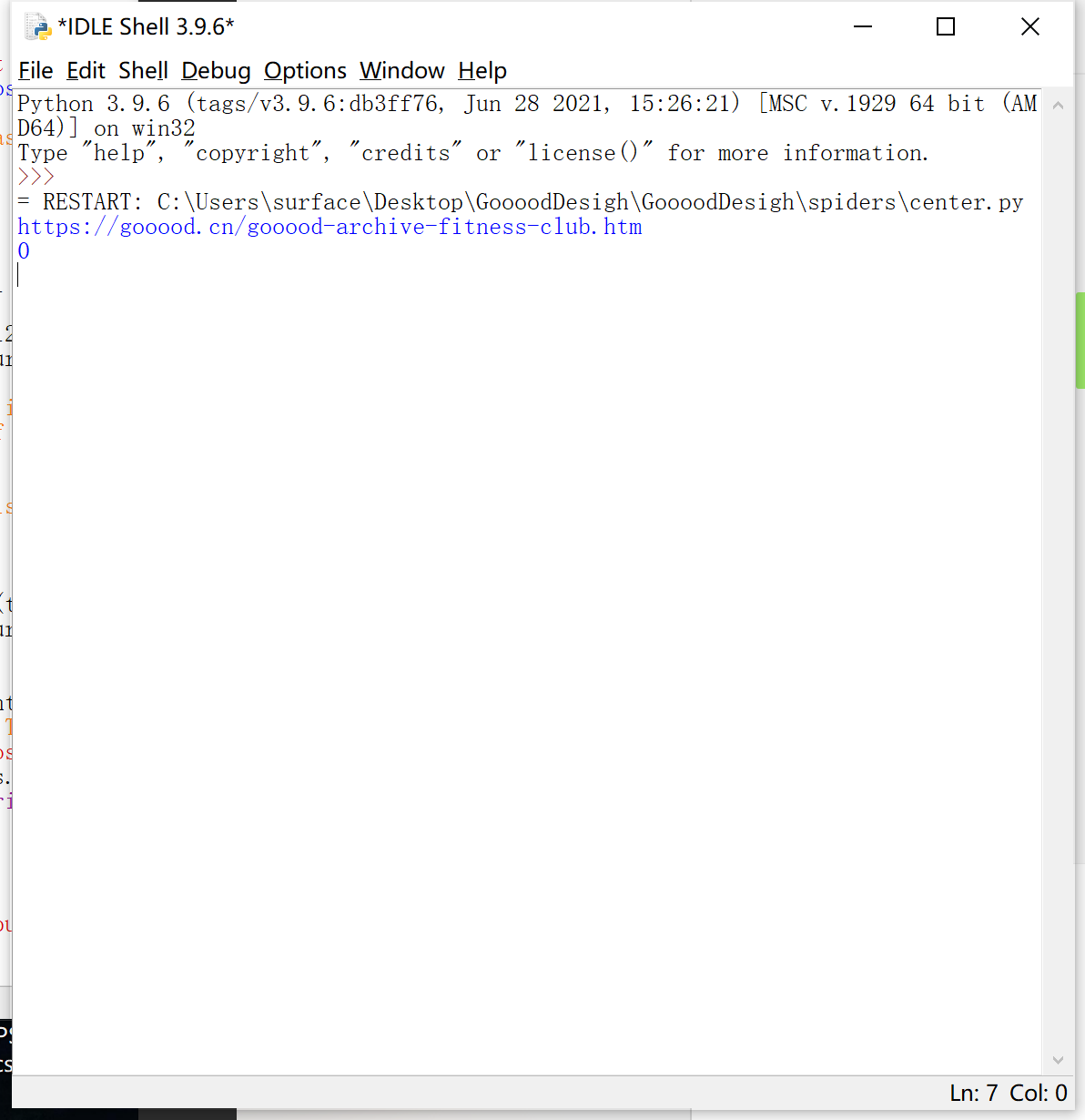

这是shell运行时的内容

-

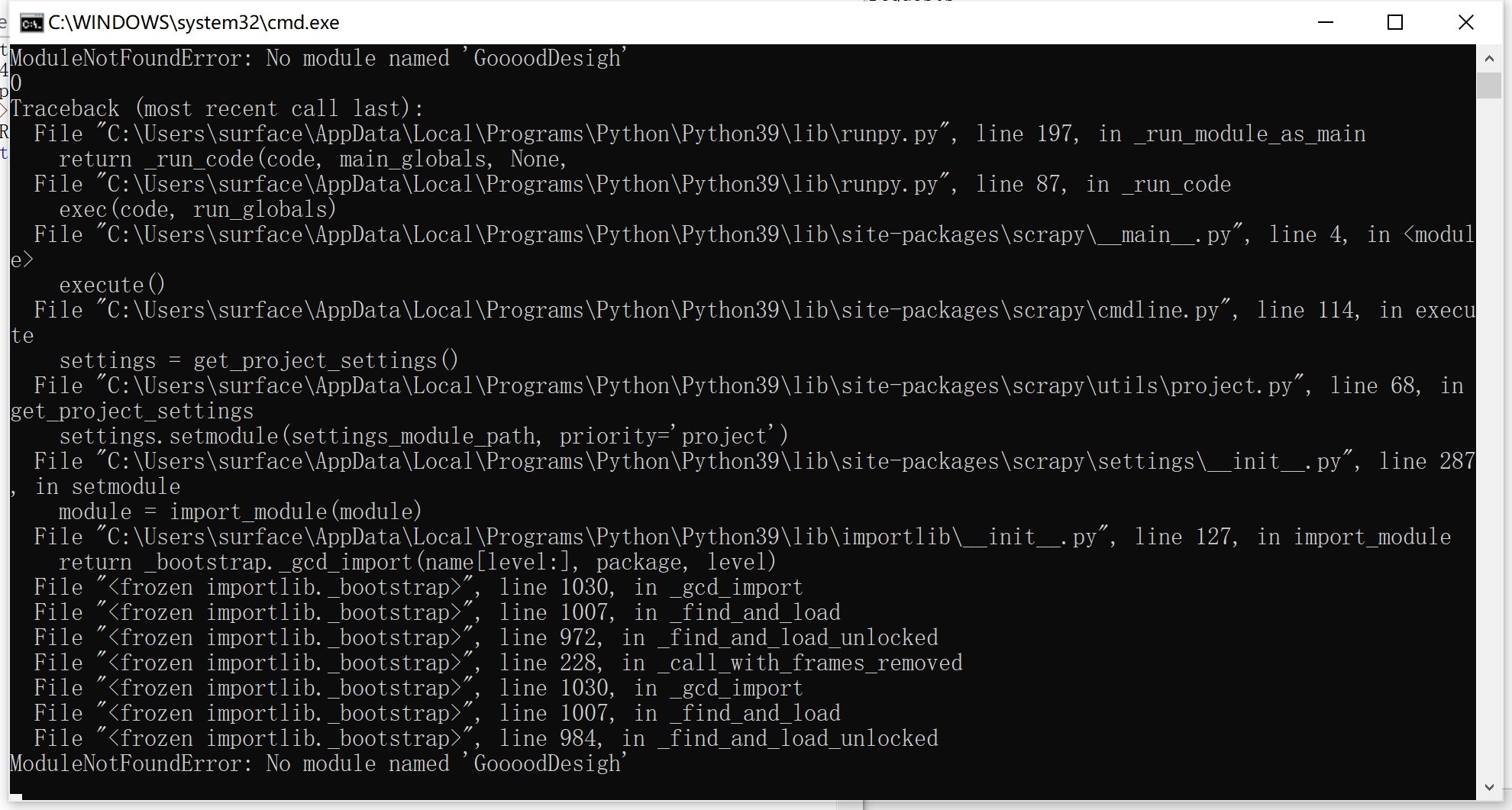

这是cmd界面的报错

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)