|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

两个url:

url1:https://s.search.bilibili.com/ca ... mp;time_to=20210924

url2:https://s.search.bilibili.com/ca ... mp;time_to=20210924

两个url就cate_id不一样,一个171,一个172

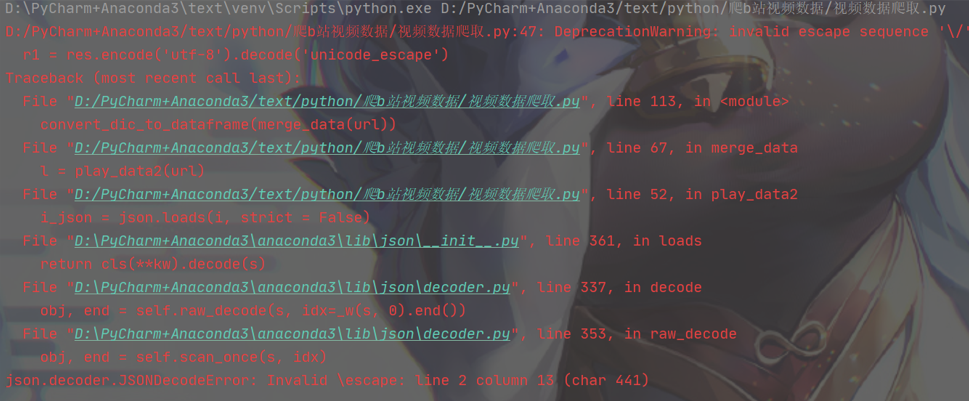

url1不报错,url2报错,我把pagesize改成5又不报错了,然后新建一个文件测试 play_data2(url) 这个函数,pagesize=100 也没报错,不知道什么情况

代码:

- import requests

- import json

- import time

- import random

- import pandas as pd

- import re

- def play_data1(number):

- headers = {'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3314.0 Safari/537.36 SE 2.X MetaSr 1.0'}

- url = 'https://www.bilibili.com/video/%s'%number

- res = requests.get(url, headers = headers).text

- '''

- 播放量:view

- 弹幕:danmaku

- 点赞:like

- 投币:coin

- 收藏:favorite

- 分享:share

- '''

- r = re.findall(r'"stat":\{"aid":.*?\}', res)

- r = str(r)

- view = re.findall(r'"view":(.*?),', r)

- danmaku = re.findall(r'"danmaku":(.*?),', r)

- like = re.findall(r'"like":(.*?),', r)

- coin = re.findall(r'"coin":(.*?),', r)

- favorite = re.findall(r'"favorite":(.*?),', r)

- share = re.findall(r'"share":(.*?),', r)

- d = {

- '播放量': view[0],

- '弹幕': danmaku[0],

- '点赞': like[0],

- '投币': coin[0],

- '收藏': favorite[0],

- '分享': share[0]

- }

- return d

- def play_data2(url):

- headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3314.0 Safari/537.36 SE 2.X MetaSr 1.0'}

- res = requests.get(url, headers=headers).text

- r1 = res.encode('utf-8').decode('unicode_escape')

- r2 = re.findall(r'\{"senddate":[\s\S]*?\}', r1)

- l = []

- for i in r2:

- i_json = json.loads(i, strict = False)

- l.append({

- 'title': i_json['title'],

- 'arcurl': i_json['arcurl'],

- 'bvid': i_json['bvid'],

- 'author': i_json['author'],

- 'pubdate': i_json['pubdate'],

- 'tag': i_json['tag'],

- 'review': i_json['review'],

- 'duration': i_json['duration']

- })

- return l

- def merge_data(url):

- l = play_data2(url)

- for l_1 in l:

- print(1)

- d = play_data1(l_1['bvid'])

- l_1.update(d)

- time.sleep(random.randint(1, 3))

- dd = {'title':[],

- 'arcurl':[],

- 'bvid':[],

- 'author':[],

- 'pubdate':[],

- 'tag':[],

- 'review':[],

- 'duration':[],

- "播放量":[],

- "弹幕":[],

- "点赞":[],

- "投币":[],

- "收藏":[],

- "分享":[], }

- for l_2 in l:

- dd['title'].append(l_2['title'])

- dd['arcurl'].append(l_2['arcurl'])

- dd['bvid'].append(l_2['bvid'])

- dd['author'].append(l_2['author'])

- dd['pubdate'].append(l_2['pubdate'])

- dd['tag'].append(l_2['tag'])

- dd['review'].append(l_2['review'])

- dd['duration'].append(l_2['duration'])

- dd["播放量"].append(l_2["播放量"])

- dd["弹幕"].append(l_2["弹幕"])

- dd["点赞"].append(l_2["点赞"])

- dd["投币"].append(l_2["投币"])

- dd["收藏"].append(l_2["收藏"])

- dd["分享"].append(l_2["分享"])

- return dd

- def convert_dic_to_dataframe(dic):

- data = pd.DataFrame(dic)

- data.to_csv(r'手机游戏.csv', encoding="utf_8_sig")

- url = 'https://s.search.bilibili.com/cate/search?main_ver=v3&search_type=video&view_type=hot_rank&order=click©_right=-1&cate_id=172&page=1&pagesize=100&jsonp=jsonp&time_from=20210917&time_to=20210924'

- convert_dic_to_dataframe(merge_data(url))

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)