|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

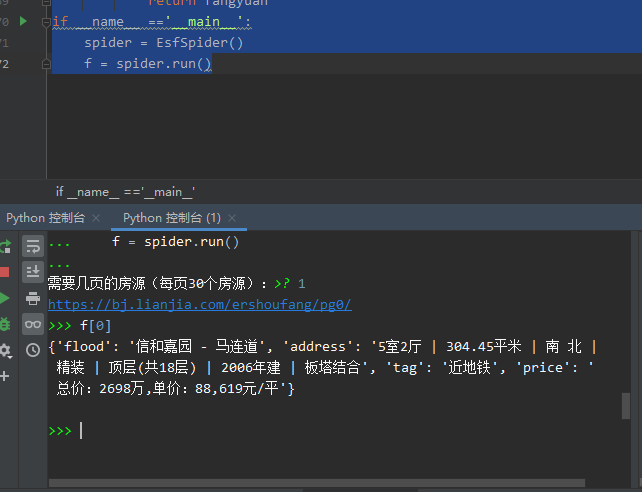

做了个爬二手房信息的脚本,发现个问题,求大神指点下:

我用脚本爬取页面得到的内容,和我用浏览访问的内容似乎是不一样的。

- import requests

- from lxml import etree

- import time

- from ua_info import ua_list

- class EsfSpider:

- def __init__(self):

- self.url = '''https://bj.lianjia.com/ershoufang/pg{}/'''

- #修改heeader信息模拟浏览器访问

- self.headers = {'UserAgent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36 Edg/94.0.992.38'}

- self.blog = 1

- #获取页面内容

- def get_html(self,url):

- #对失败页面尝试请求三次

- if self.blog <= 3:

- try:

- res = requests.get(url,headers=self.headers,timeout=3)

- res.encoding = 'utf-8'

- self.html = res.text

- return self.html

- except Exception as e :

- print(e)

- self.blog += 1

- self.get_html(url)

- else:

- print('访问页面失败')

- exit()

- #Xpath解析页面,爬取所需信息

- def xpath_html(self,url):

- html = self.get_html(url)

- p = etree.HTML(html)

- # 房源信息节点

- info_clear = '''//div[@class="info clear"]'''

- '''//ul[@class="sellListContent"]/li[@class="clear LOGCLICKDATA"]'''

- info_clear_list = p.xpath(info_clear)

- fangyuan = []

- for i in info_clear_list:

- item = {}

- # 地址

- flood = '''.//div[@class="flood"]/div/a[@target="_blank"]/text()'''

- flood = i.xpath(flood)

- item['flood'] = flood[0] +'- '+ flood[1]

- # 基础信息

- address = '''.//div[@class="address"]/div/text()'''

- address_list = i.xpath(address)[0].strip()

- item['address'] = address_list

- # 优点

- tag = '''.//div[@class="tag"]/span[@class="subway"]/text()'''

- tag_list = i.xpath(tag)

- item['tag'] = tag_list[0] if tag_list else None

- # 总价、单价

- priceinfo = '''.//div[@class="priceInfo"]/node()/*/text()'''

- #[' ', '548', '万', '48,977元/平']

- priceinfo_list = i.xpath(priceinfo)

- totaprice = priceinfo_list[1] + priceinfo_list[2]

- item['price'] = '总价:%s,单价:%s'%(totaprice,priceinfo_list[3])

- fangyuan.append(item)

- return fangyuan

- def run(self):

- ye = input('需要几页的房源(每页30个房源):')

- for i in range(0,int(ye)):

- url = self.url.format(i)

- print(url)

- fangyuan = self.xpath_html(url)

- self.blog = 1

- return fangyuan

- if __name__ =='__main__':

- spider = EsfSpider()

- f = spider.run()

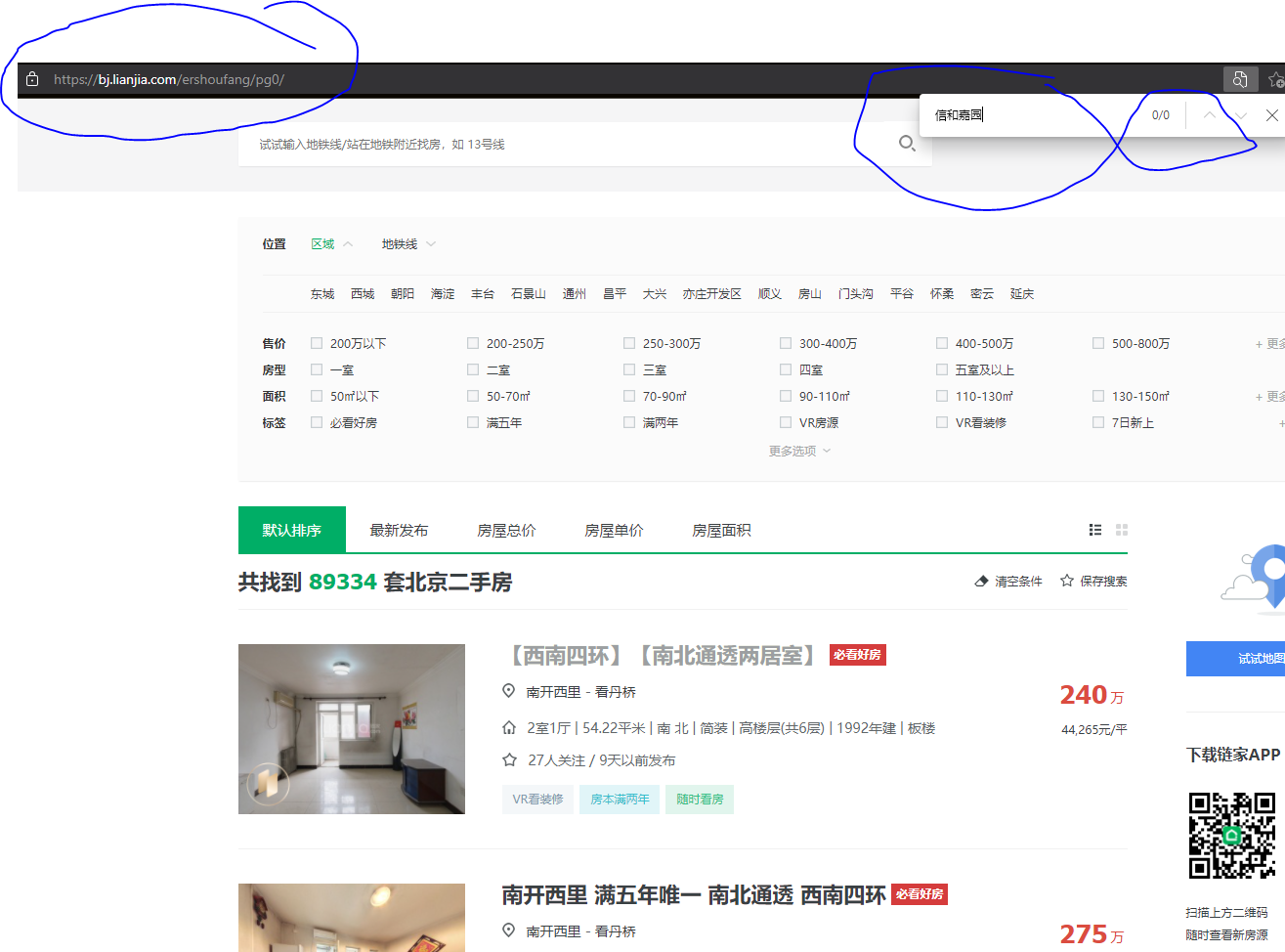

我脚本最后会打印出脚本爬的连接,但我将这连接放浏览器上里面的房子信息内容就和爬出来的内容不一样。这是为什么呢?

如连接是

https://bj.lianjia.com/ershoufang/pg0/

我脚本里爬到的第一条房子的信息是:信和嘉园

但我将连接放浏览器里,打开搜不到:信和嘉园

浏览器看到的是经过css和js渲染后得到的结果,requests等get到的并没有渲染,这是正常的。所以写爬虫要以get的结果为准。

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)