|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

源代码为:

import requests

from bs4 import BeautifulSoup

from lxml import etree

import csv

i = open("introduction.csv",mode='w',encoding='utf-8',newline='')

csvwriter_introduction = csv.writer(i)

def download_one_page(url):

#拿到页面源代码

resp = requests.get(url)

resp.encoding = 'utf-8'#处理乱码

html = etree.HTML(resp.text)

cla = html.xpath('/html/body/div[5]/div/div')[0]#此处需要加上[0],因为默认是列表

names = cla.xpath('./div[@class = "sea_a23 clearfix"]')

contents = cla.xpath('./div[@class = "sea_a23 clearfix pt25"]')

#拿到每个title和content

for title in names:

txt1 = title.xpath('./h2/a/text()')

#csvwriter_title.writerow(txt1)

for content in contents:

feature = content.xpath('./div/h3/text()')

introduction = content.xpath('./div/p//text()')

#对数据进行简单的处理:\n\t,\n,空格,【】,\xa0,›,去掉

intro = (item.replace("\n\t","").replace(", ","").replace("›","").replace("\n","").replace(" ","").replace("【厂家】","厂家").replace("\xa0","").replace("【产品分类】","产品分类") for item in introduction)

introduction = (x.strip() for x in intro if x.strip()!='')

#把数据存放在文件中

#csvwriter_introduction.writerows(feature)

introduction = ''.join(introduction)

csvwriter_introduction.writerows(introduction)

#csvwriter_picture.writerow(picture)

#print(feature)

#print(introduction)

#print(''.join(introduction))

if __name__ == '__main__':

download_one_page('http://www.c-denkei.cn/index.php?d=home&c=goods&m=search&s=%E7%94%B5%E6%BA%90&c1=0&c2=0&c3=0&page=')

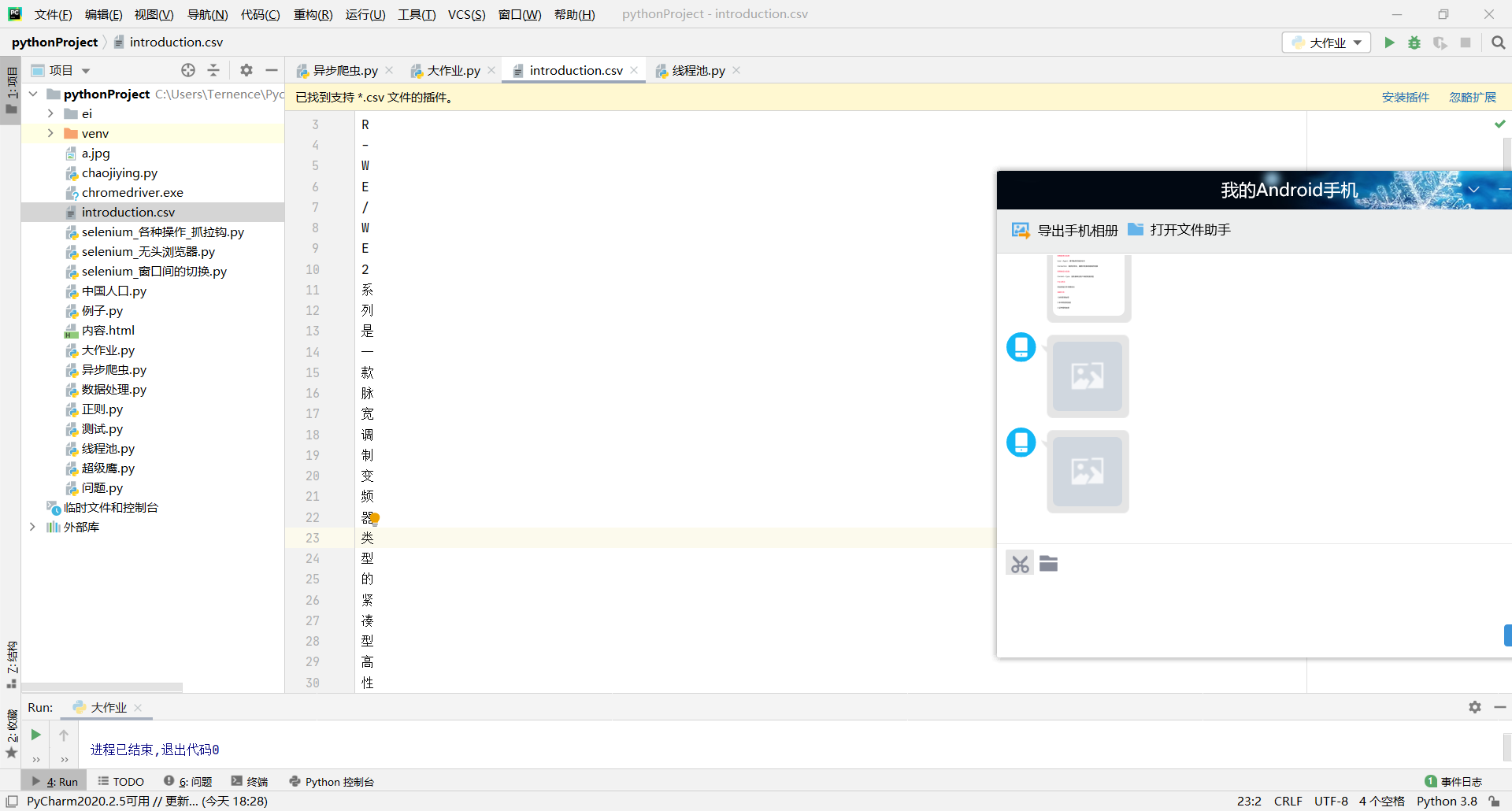

直接用print打印出来都是好好的,每次一写入就出问题

writerows 会把可迭代对象迭代出的每一个元素写入多行,而字符串的每一个元素是一个字符,

所以造成了一个字符一行的情况,故对你的代码修改如下:

- import requests

- from bs4 import BeautifulSoup

- from lxml import etree

- import csv

- i = open("introduction.csv",mode='w',encoding='utf-8',newline='')

- csvwriter_introduction = csv.writer(i)

- def download_one_page(url):

- #拿到页面源代码

- resp = requests.get(url)

- resp.encoding = 'utf-8'#处理乱码

- html = etree.HTML(resp.text)

- cla = html.xpath('/html/body/div[5]/div/div')[0]#此处需要加上[0],因为默认是列表

- names = cla.xpath('./div[@class = "sea_a23 clearfix"]')

- contents = cla.xpath('./div[@class = "sea_a23 clearfix pt25"]')

- #拿到每个title和content

- for title in names:

- txt1 = title.xpath('./h2/a/text()')

- #csvwriter_title.writerow(txt1)

- for content in contents:

- feature = content.xpath('./div/h3/text()')

- introduction = content.xpath('./div/p//text()')

- #对数据进行简单的处理:\n\t,\n,空格,【】,\xa0,›,去掉

- intro = (item.replace("\n\t","").replace(", ","").replace("›","").replace("\n","").replace(" ","").replace("【厂家】","厂家").replace("\xa0","").replace("【产品分类】","产品分类") for item in introduction)

- introduction = (x.strip() for x in intro if x.strip()!='')

- #把数据存放在文件中

- #csvwriter_introduction.writerows(feature)

- introduction = ''.join(introduction)

- csvwriter_introduction.writerow([introduction]) # 修改了这里

- #csvwriter_picture.writerow(picture)

- #print(feature)

- #print(introduction)

- #print(''.join(introduction))

- if __name__ == '__main__':

- download_one_page('http://www.c-denkei.cn/index.php?d=home&c=goods&m=search&s=%E7%94%B5%E6%BA%90&c1=0&c2=0&c3=0&page=')

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)