|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

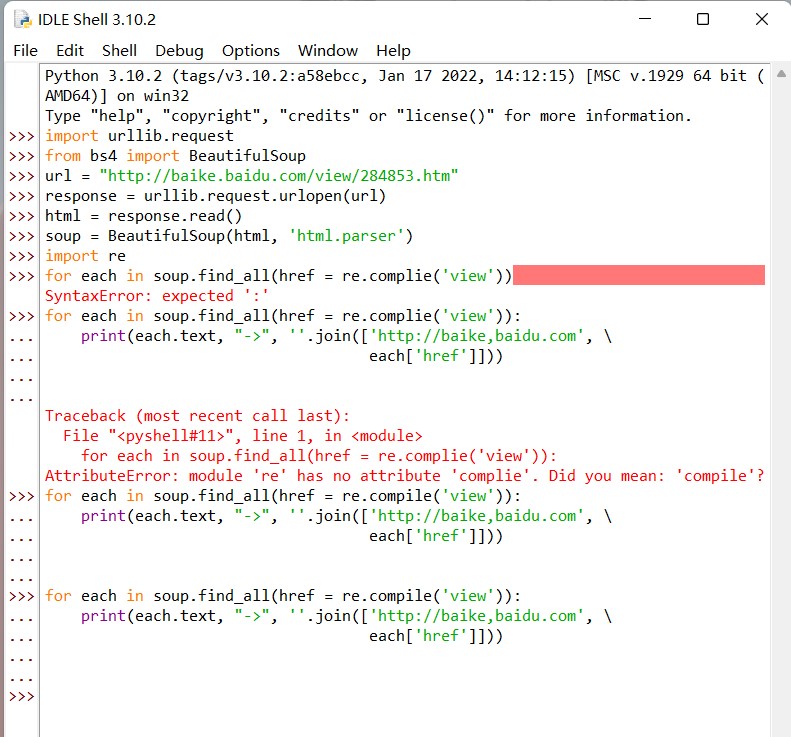

难道百度百科也设置反爬了么 ,使用这个代码无法访问百度百科,请问这段代码应该怎么改,headers参数应该怎么写 ,使用这个代码无法访问百度百科,请问这段代码应该怎么改,headers参数应该怎么写

- >>> import urllib.request

- >>> from bs4 import BeautifulSoup

- >>> url = "http://baike.baidu.com/view/284853.htm"

- >>> response = urllib.request.urlopen(url)

- >>> html = response.read()

- >>> soup = BeautifulSoup(html, 'html.parser')

- >>> import re

- >>> for each in soup.find_all(href = re.complie('view'))

- SyntaxError: expected ':'

- >>> for each in soup.find_all(href = re.complie('view')):

- print(each.text, "->", ''.join(['http://baike,baidu.com', \

- each['href']]))

-

- Traceback (most recent call last):

- File "<pyshell#11>", line 1, in <module>

- for each in soup.find_all(href = re.complie('view')):

- AttributeError: module 're' has no attribute 'complie'. Did you mean: 'compile'?

- >>> for each in soup.find_all(href = re.compile('view')):

- print(each.text, "->", ''.join(['http://baike,baidu.com', \

- each['href']]))

-

- >>> for each in soup.find_all(href = re.compile('view')):

- print(each.text, "->", ''.join(['http://baike,baidu.com', \

- each['href']]))

代码过时了,你要清楚,爬虫都是具有时效性的,随着网站的改变,爬虫可能会失效

现在百度百科的url中早就不是view了,而是item

你把那个 http://baike.baidu.com/view/284853.htm打开一下就会知道网页自动跳转到了 https://baike.baidu.com/item/%E7%BD%91%E7%BB%9C%E7%88%AC%E8%99%AB

所以代码应修改为

- import urllib.request

- from bs4 import BeautifulSoup

- url = "http://baike.baidu.com/view/284853.htm"

- headers = {

- "User-Agent":"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36",

- "Cookie":"BIDUPSID=A92A6802F7D86AED4D4E206949C7BDAD; PSTM=1650383456; BAIDUID=A92A6802F7D86AED6342FE5ECB6326A0:FG=1; BD_UPN=12314353; BDUSS=lKbG5Van54QlllVVlRfkZtSHB5UVFyZDJRQTFYVkZiRDBvMVk4Znc4OUpwWTlpRVFBQUFBJCQAAAAAAAAAAAEAAAChTKa30-7W5tL40NAzNDUAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAEkYaGJJGGhiUU; BDUSS_BFESS=lKbG5Van54QlllVVlRfkZtSHB5UVFyZDJRQTFYVkZiRDBvMVk4Znc4OUpwWTlpRVFBQUFBJCQAAAAAAAAAAAEAAAChTKa30-7W5tL40NAzNDUAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAEkYaGJJGGhiUU; ispeed_lsm=2; ab_sr=1.0.1_NGU2NGZmNjBmMWMwODlmNmNmMzNkYjRiYTA5ODM2N2M2M2QyZGIyNTg4MmMxNGI3ZmFmM2NlYzI1ZDI3NTQzNzQzZjQ5ODE4YjY2MGNhZTBhMTYwYzUwYzliNDZkM2I5NTMxYjE0NDM0ODUwZjAyOTE3YTM0MWU1Y2NiMDM1MmFjMWIxM2FjZWZiN2U1MDMwYmYxNTc3YWUwMTdjMzI0OTdkMzgwNTRmNjU3NDEyMWQ2OTc2YzQ0NjlhZjllYTI3; BA_HECTOR=2085012k0k252k20ag03951d1hcr8fj16; ZFY=l1Dlk45JxTCwOPrlwIUjq8tRX3jy5nZT9F9jDP2NNnA:C; BAIDUID_BFESS=BFC9AF9D2D83193E805DC3D919F0D1B6:FG=1; BD_HOME=1; delPer=0; BD_CK_SAM=1; PSINO=6; H_PS_PSSID=36553_36460_36725_36455_36452_36691_36167_36694_36697_36816_36652_36773_36746_36760_36769_36765_26350; BDRCVFR[feWj1Vr5u3D]=mk3SLVN4HKm; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; H_PS_645EC=233f6REQC1PnLDurVcWHYoD2TfKhF1re3d6AcrXwDgE25OQ55x1kmFuxvDE",

- "Accept":"text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9"

- }

- request = urllib.request.Request(url,headers=headers)

- response = urllib.request.urlopen(request)

- html = response.read()

- soup = BeautifulSoup(html, 'html.parser')

- import re

- for each in soup.find_all(href=re.compile('item')):

- print(each.text, "->", ''.join(['http://baike.baidu.com',each['href']]))

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)