|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 Handsome_zhou 于 2022-10-15 14:42 编辑

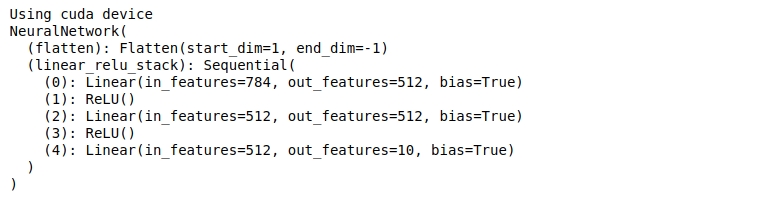

模型各层的输入输出维度在搭建好模型之后,进行模型实例化,就可以打印出来了。

- device = "cuda" if torch.cuda.is_available() else "cpu"

- print(f"Using {device} device")

- class NeuralNetwork(nn.Module):

- def __init__(self):

- super(NeuralNetwork, self).__init__()

- self.flatten = nn.Flatten()

- self.linear_relu_stack = nn.Sequential(

- nn.Linear(28*28, 512),

- nn.ReLU(),

- nn.Linear(512, 512),

- nn.ReLU(),

- nn.Linear(512,10)

- )

-

- def forward(self, x):

- x = self.flatten(x)

- logits = self.linear_relu_stack(x)

- return logits

- model = NeuralNetwork().to(device)

- print(model)

输出结果:

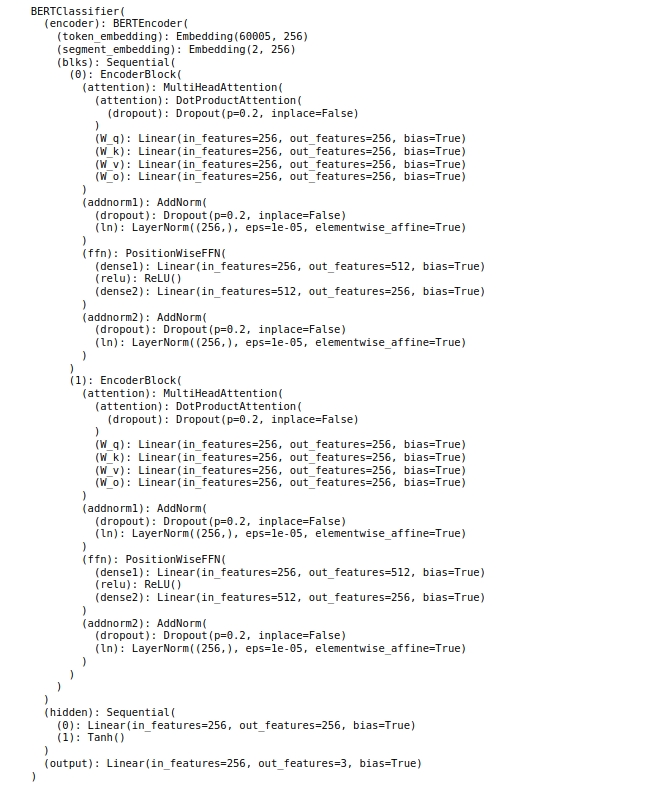

- import json

- import multiprocessing #使用python的多线程

- import os

- import torch

- from torch import nn

- from d2l import torch as d2l

- #load bert.small预训练模型

- devices = d2l.try_all_gpus()

- bert,vocab = load_pretrained_model('bert.small',num_hiddens=256,ffn_num_hiddens=512,

- num_heads=4,num_layers=2,dropout=0.1,max_len=512,devices=devices)

- class BERTClassifier(nn.Module):

- def __init__(self, bert):

- super(BERTClassifier, self).__init__()

- self.encoder = bert.encoder

- self.hidden = bert.hidden

- self.output = nn.Linear(256,3)

-

- def forward(self, inputs):

- tokens_X, segments_X, valid_lens_x = inputs

- encoded_X = self.encoder(tokens_X, segments_X, valid_lens_x)

- return self.output(self.hidden(encoded_X[:, 0, :]))

-

- net = BERTClassifier(bert).to(device)#模型实例化

- print(net)

输出结果:

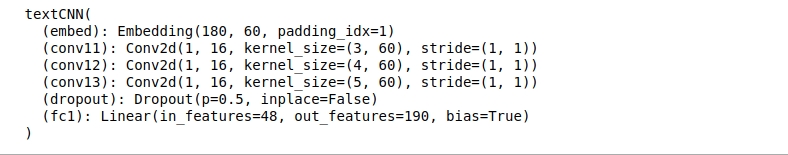

textCNN模型的各层输入输出维度:

- import torch

- import torch.nn as nn

- from torch.nn import functional as F

- import math

- class textCNN(nn.Module):

- def __init__(self, param):

- super(textCNN, self).__init__()

- ci = 1 # input chanel size

- kernel_num = param['kernel_num'] # output chanel size

- kernel_size = param['kernel_size']

- vocab_size = param['vocab_size']

- embed_dim = param['embed_dim']

- dropout = param['dropout']

- class_num = param['class_num']

- self.param = param

- self.embed = nn.Embedding(vocab_size, embed_dim, padding_idx=1)

- self.conv11 = nn.Conv2d(ci, kernel_num, (kernel_size[0], embed_dim))

- self.conv12 = nn.Conv2d(ci, kernel_num, (kernel_size[1], embed_dim))

- self.conv13 = nn.Conv2d(ci, kernel_num, (kernel_size[2], embed_dim))

- self.dropout = nn.Dropout(dropout)

- self.fc1 = nn.Linear(len(kernel_size) * kernel_num, class_num)

- def init_embed(self, embed_matrix):

- self.embed.weight = nn.Parameter(torch.Tensor(embed_matrix))

- @staticmethod

- def conv_and_pool(x, conv):

- # x: (batch, 1, sentence_length, )

- x = conv(x)

- # x: (batch, kernel_num, H_out, 1)

- x = F.relu(x.squeeze(3))

- # x: (batch, kernel_num, H_out)

- x = F.max_pool1d(x, x.size(2)).squeeze(2)

- # (batch, kernel_num)

- return x

- def forward(self, x):

- # x: (batch, sentence_length)

- x = self.embed(x)

- # x: (batch, sentence_length, embed_dim)

- # TODO init embed matrix with pre-trained

- x = x.unsqueeze(1)

- # x: (batch, 1, sentence_length, embed_dim)

- x1 = self.conv_and_pool(x, self.conv11) # (batch, kernel_num)

- x2 = self.conv_and_pool(x, self.conv12) # (batch, kernel_num)

- x3 = self.conv_and_pool(x, self.conv13) # (batch, kernel_num)

- x = torch.cat((x1, x2, x3), 1) # (batch, 3 * kernel_num)

- x = self.dropout(x)

- logit = F.log_softmax(self.fc1(x), dim=1)

- return logit

- def init_weight(self):

- for m in self.modules():

- if isinstance(m, nn.Conv2d):

- n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

- m.weight.data.normal_(0, math.sqrt(2. / n))

- if m.bias is not None:

- m.bias.data.zero_()

- elif isinstance(m, nn.BatchNorm2d):

- m.weight.data.fill_(1)

- m.bias.data.zero_()

- elif isinstance(m, nn.Linear):

- m.weight.data.normal_(0, 0.01)

- m.bias.data.zero_()

-

- textCNN_param = {

- # 'vocab_size': len(word2ind),

- 'vocab_size': 180,

- 'embed_dim': 60,

- # 'class_num': len(label_w2n),

- 'class_num': 190,

- "kernel_num": 16,

- "kernel_size": [3, 4, 5],

- "dropout": 0.5,

- }

- dataLoader_param = {

- 'batch_size': 128,

- 'shuffle': True,

- }

- net = textCNN(textCNN_param)

- print(net)

输出结果:

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)