|

|

10鱼币

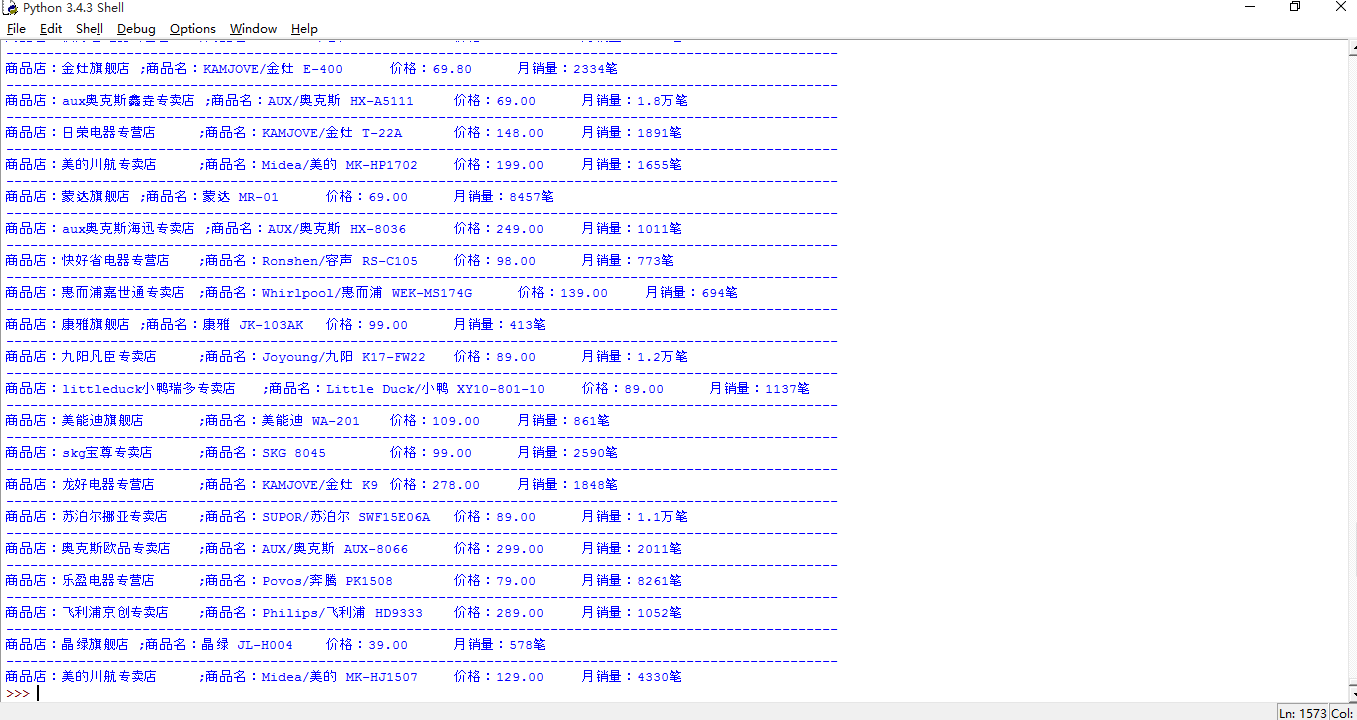

各位论坛的朋友,最近在学习python3的爬虫知识,也接触了BeautifulSoup库和requests库。感觉这两种包配合起来挺搞高效的,尤其是BeautifulSoup(windows 安装,命令行pip install BeautifulSoup就可以了)比正则表达式高效多了,大家可以去尝试一下。我也尝试去爬取天猫官网“水壶一栏”的商品信,经过网页代码的分析,最后爬出了天猫“水壶”首页商品的信息。具体代码我分享一下。

- import requests

- import html.parser

- from bs4 import BeautifulSoup

- res=requests.get('https://list.tmall.com/search_product.htm?q=%CB%AE%BA%F8+%C9%D5%CB%AE&type=p&vmarket=&spm=875.7931836%2FA.a2227oh.d100&from=mallfp..pc_1_searchbutton')

- soup=BeautifulSoup(res.text,'html.parser')

- #抓取页面信息

- for item in soup.select('.product-iWrap'):

-

- #剔除那些不含有关键信息的网络标签

- money_tag=item.select('em')

- if len(money_tag)==0 or len(money_tag)==1:

- pass

- else:

- new_money_tag=item.select('em')

-

- #剔除那些不含有关键信息的网络标签

- product_tag=item.select('a')

- if len(product_tag)<3:

- pass

- else:

- new_product_tag=product_tag=item.select('a')

-

- #剔除空信息

- if new_product_tag[1].string==None:

- product=None

- else:

- product=new_product_tag[1].string.strip()

-

- #提取标签里的商品字符串信息

- store=new_product_tag[2].string.strip() #商店名字

- #product=new_product_tag[1].string #商品名

- money=new_money_tag[0]['title'] #商品价格

- sale=new_money_tag[1].string.strip() #销售量

-

- print('--------------------------------------------------------------------------------------------------------')

- print('商品店:%s\t;商品名:%s\t价格:%s\t月销量:%s'%(store,product,money,sale))

。

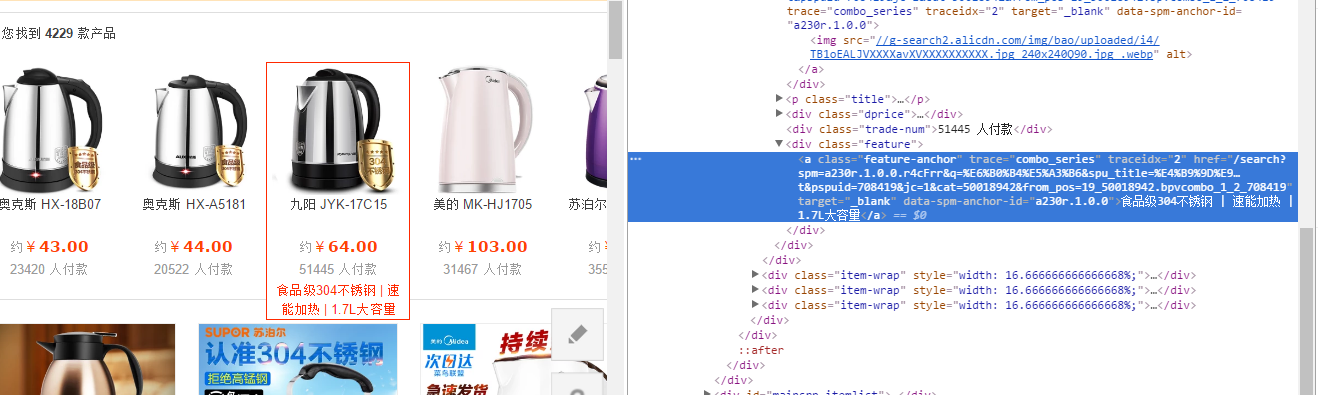

于是我想在淘宝网页上上练练手,想抓水壶名字,价钱,购买量,产品介绍(图片还是算了!)等有价值信息

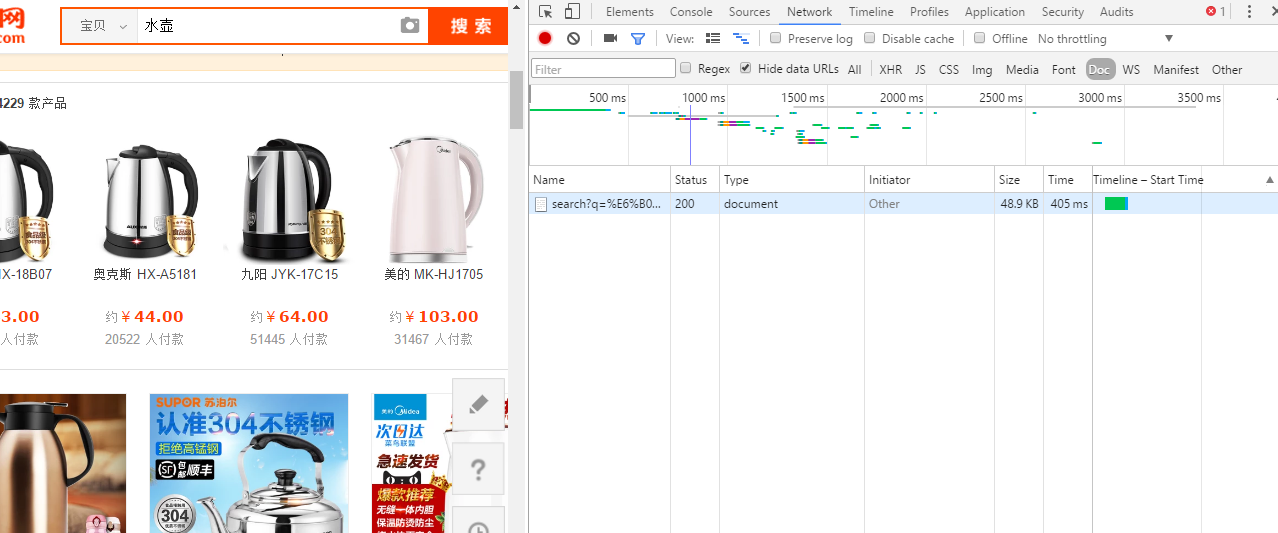

既然上天猫可以抓取相关信息,我想淘宝应该可以,于是我打开了淘宝界面,输入”水壶“两个字,链接在这里:https://s.taobao.com/search?q=%E6%B0%B4%E5%A3%B6&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.50862.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20160805。

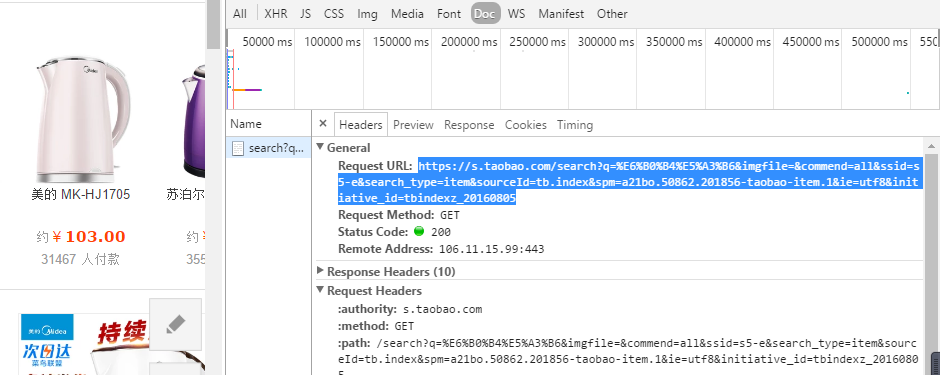

右键点击“检查”,弹出下框架,再点击“Network”,再点击f5重新刷新一下淘宝界.如下图

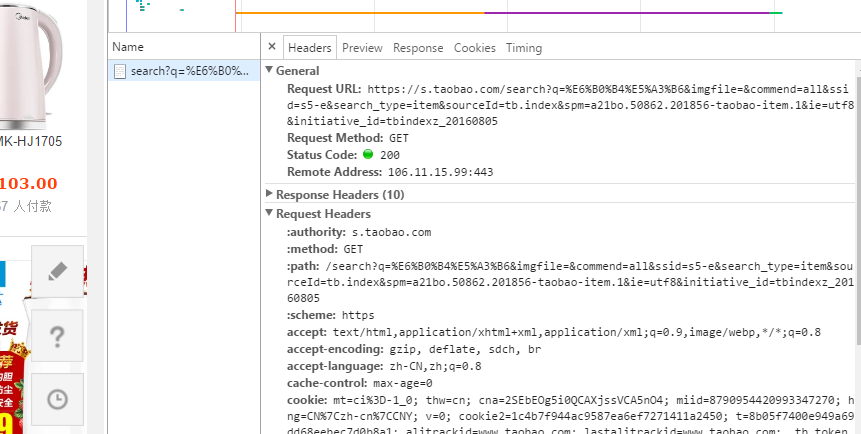

点击“Name”第一条,于是我们看到了下面的图片:

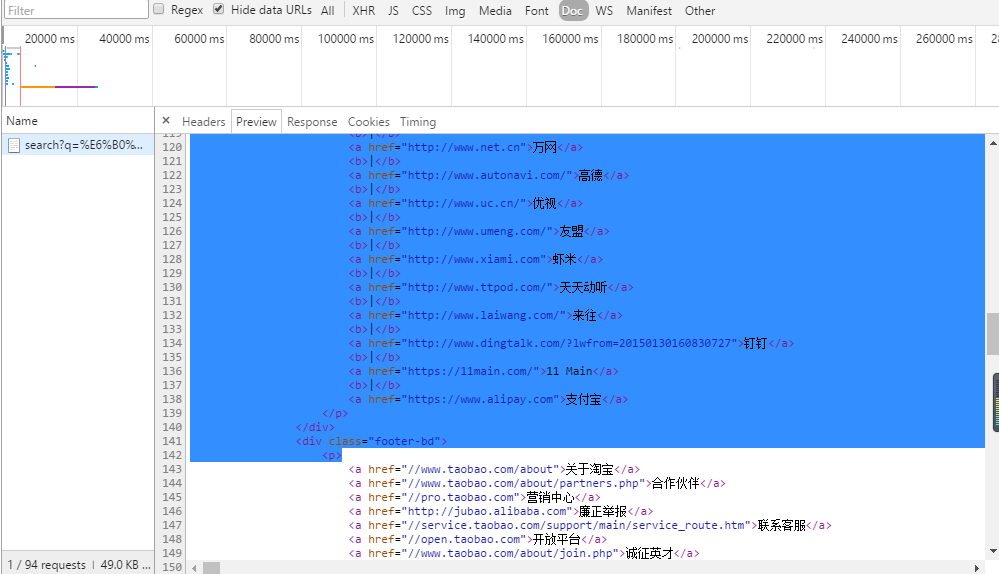

点击preview,预览下html代码,找啊找啊找,都没有找到有关商品相关信息的html代码(或者说css,javascript).该html代码我就不copy了,大家可以尝试一下。

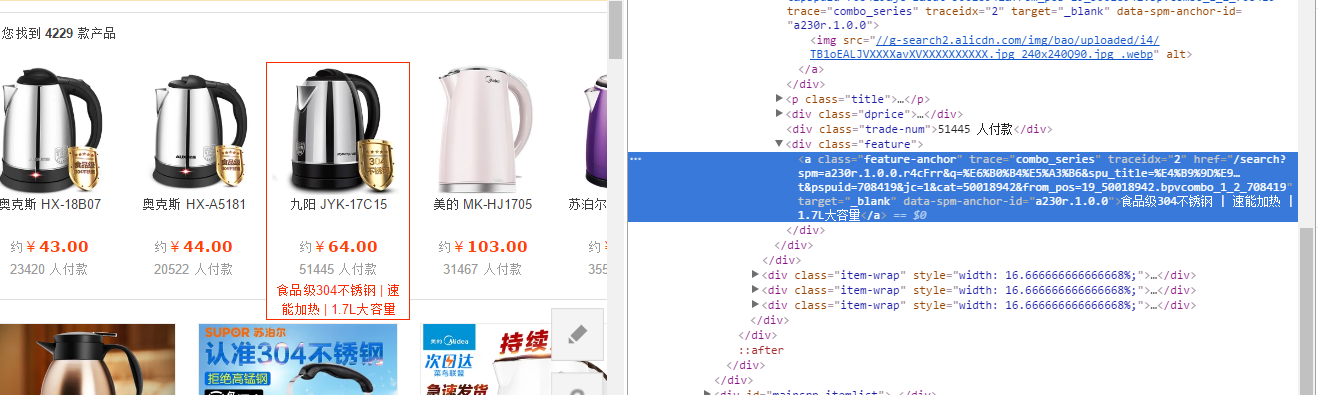

同时我们可以点击一个商品,右键点击“检查”,我们可以发现Elements那一栏是含有有其对应的商品信息的html便签。但是为什么在Ntework>name>preview显示的代码却没有呢?

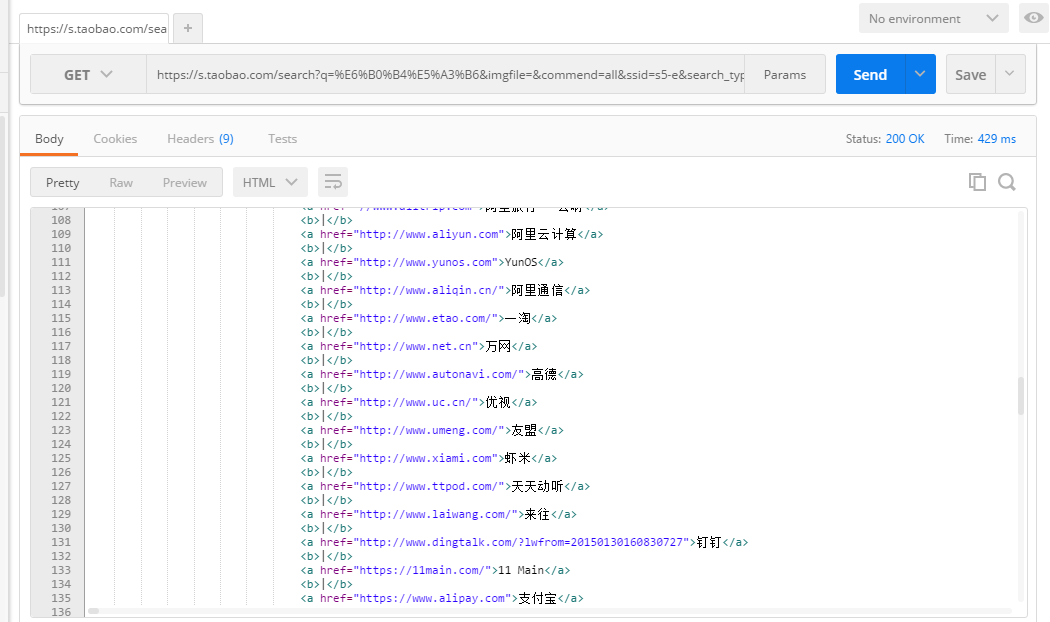

为了进一步确认,我们打开谷歌插件postman(你也可以用fiddler 4软件)来分析一下,先把Network>General>Request URL下的url复制下来,粘贴到potstman的url上,点击send,我们就可以看到请求url后的html代码了。html代码我就不贴了,代码太多了,怕撑爆该框框了。

大家可以亲自动手试试看,我从请求url后的html代码中找不到我想要的商品信息标签,所以我觉得这候编写python代码程序去抓取页面是没有用的,不过在再一次用python抓一下验证一下看看有没有。pytho代码如下:

- import requests

- import html.parser

- from bs4 import BeautifulSoup

- url='https://s.taobao.com/search?q=%E6%B0%B4%E5%A3%B6&imgfile=&commend=all&ssid=s5-e&search_type=item&sourceId=tb.index&spm=a21bo.50862.201856-taobao-item.1&ie=utf8&initiative_id=tbindexz_20160805'

- headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.21 Safari/537.36'}

- res=requests.get(url,headers=headers)

- soup=BeautifulSoup(res.text,'html.parser')

- #打印出含有食谱号,收藏次数的html代码

- print(soup.select('html'))

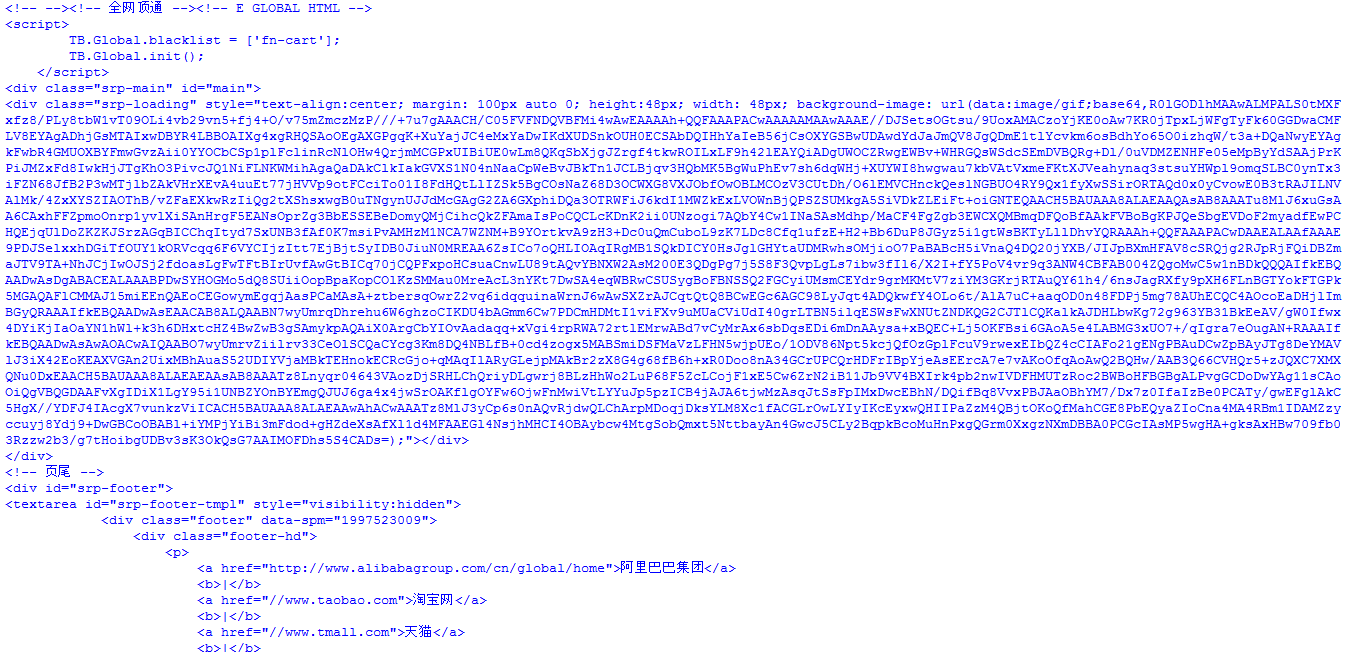

输出的html结果还是没有抓取信息的html代码标签。不过我对其中某些形式的代码还是挺感兴趣的,比如中间这些“毫无逻辑”的代码。不知道这些东西是什么鬼,我觉得还是这段代码还是贴上给大家欣赏一下

- <script>

- TB.Global.blacklist = ['fn-cart'];

- TB.Global.init();

- </script>

- <div class="srp-main" id="main">

- <div class="srp-loading" style="text-align:center; margin: 100px auto 0; height:48px; width: 48px; background-image: url(data:image/gif;base64,R0lGODlhMAAwALMPALS0tMXFxfz8/PLy8tbW1vT09OLi4vb29vn5+fj4+O/v75mZmczMzP///+7u7gAAACH/C05FVFNDQVBFMi4wAwEAAAAh+QQFAAAPACwAAAAAMAAwAAAE//DJSetsOGtsu/9UoxAMACzoYjKE0oAw7KR0jTpxLjWFgTyFk60GGDwaCMFLV8EYAgADhjGsMTAIxwDBYR4LBBOAIXg4xgRHQSAoOEgAXGPgqK+XuYajJC4eMxYaDwIKdXUDSnkOUH0ECSAbDQIHhYaIeB56jCsOXYGSBwUDAwdYdJaJmQV8JgQDmE1tlYcvkm6osBdhYo65O0izhqW/t3a+DQaNwyEYAgkFwbR4GMUOXBYFmwGvzAii0YYOCbCSp1plFclinRcNlOHw4QrjmMCGPxUIBiUE0wLm8QKqSbXjgJZrgf4tkwROILxLF9h42lEAYQiADgUWOCZRwgEWBv+WHRGQsWSdcSEmDVBQRg+Dl/0uVDMZENHFe05eMpByYdSAAjPrKPiJMZxFd8IwkHjJTgKhO3PivcJQ1NiFLNKWMihAgaQaDAkClkIakGVXS1N04nNaaCpWeBvJBkTn1JCLBjqv3HQbMK5BgWuPhEv7sh6dqWHj+XUYWI8hwgwau7kbVAtVxmeFKtXJVeahynaq3stsuYHWpl9omqSLBC0ynTx3iFZN68JfB2P3wMTjlbZAkVHrXEvA4uuEt77jHVVp9otFCciTo01I8FdHQtLlIZSk5BgCOsNaZ68D3OCWXG8VXJObfOwOBLMCOzV3CUtDh/O6lEMVCHnckQeslNGBUO4RY9Qx1fyXwSSirORTAQd0x0yCvowE0B3tRAJILNVAlMk/4ZxXYSZIAOThB/vZFaEXkwRzIiQg2tXShsxwgB0uTNgynUJJdMcGAgG2ZA6GXphiDQa3OTRWFiJ6kdI1MWZkExLVOWnBjQPSZSUMkgA5SiVDkZLEiFt+oiGNTEQAACH5BAUAAA8ALAEAAQAsAB8AAATu8MlJ6xuGsAA6CAxhFFZpmoOnrp1yvlXiSAnHrgF5EANsOprZg3BbESSEBeDomyQMjCihcQkZFAmaIsPoCQCLcKDnK2ii0UNzogi7AQbY4Cw1INaSAsMdhp/MaCF4FgZgb3EWCXQMBmqDFQoBfAAkFVBoBgKPJQeSbgEVDoF2myadfEwPCHQEjqUlDoZKZKJSrzAGqBICChqItyd7SxUNB3fAf0K7msiPvAMHzM1NCA7WZNM+B9YOrtkvA9zH3+Dc0uQmCuboL9zK7LDc8Cfq1ufzE+H2+Bb6DuP8JGyz5i1gtWsBKTyLllDhvYQRAAAh+QQFAAAPACwDAAEALAAfAAAE9PDJSelxxhDGiTfOUY1kORVcqq6F6VYCIjzItt7EjBjtSyIDB0JiuN0MREAA6ZsICo7oQHLIOAqIRgMB1SQkDICY0HsJglGHYtaUDMRwhsOMjioO7PaBABcH5iVnaQ4DQ20jYXB/JIJpBXmHFAV8cSRQjg2RJpRjFQiDBZmaJTV9TA+NhJCjIwOJSj2fdoasLgFwTFtBIrUvfAwGtBICq70jCQPFxpoHCsuaCnwLU89tAQvYBNXW2AsM200E3QDgPg7j5S8F3QvpLgLs7ibw3fIl6/X2I+fY5PoV4vr9q3ANW4CBFAB004ZQgoMwC5w1nBDkQQQAIfkEBQAADwAsDgABACEALAAABPDwSYHOGMo5dQ8SUiiOopBpaKopCOlKzSMMau0MreAcL3nYKt7DwSA4eqWBRwCSUSygBoFBNSSQ2FGCyiUMsmCEYdr9grMKMtV7ziYM3GKrjRTAuQY61h4/6nsJagRXfy9pXH6FLnBGTYokFTGPk5MGAQAFlCMMAJ15miEEnQAEoCEGowymEgqjAasPCaMAsA+ztbersqOwrZ2vq6idqquinaWrnJ6wAwSXZrAJCqtQtQ8BCwEGc6AGC98LyJqt4ADQkwfY4OLo6t/AlA7uC+aaqOD0n48FDPj5mg78AUhECQC4AOcoEaDHjlImBGyQRAAAIfkEBQAADwAsEAACAB8ALQAABN7wyUmrqDhrehu6W6ghzoCIKDU4bAGmm6Cw7PDCmHDMtI1viFXv9uMUaCViUdI40grLTBN5ilqESWsFwXNUtZNDKQG2CJTlCQKalkAJDHLbwKg72g963YB31BkEeAV/gW0Ifwx4DYiKjIaOaYN1hWl+k3h6DHxtcHZ4BwZwB3gSAmykpAQAiX0ArgCbYIOvAadaqq+xVgi4rpRWA72rtlEMrwABd7vCyMrAx6sbDqsEDi6mDnAAysa+xBQEC+Lj5OKFBsi6GAoA5e4LABMG3xUO7+/qIgra7eOugAN+RAAAIfkEBQAADwAsAwAOACwAIQAABO7wyUmrvZiilrv33CeOlSCQaCYcg3Km8DQ4NBLfB+0cd4zogx5MABSmiDSFMaVzLFHN5wjpUEo/1ODV86Npt5kcjQfOzGplFcuV9rwexEIbQZ4cCIAFo21gENgPBAuDCwZpBAyJTg8DeYMAVlJ3iX42EoKEAXVGAn2UixMBhAuaS52UDIYVjaMBkTEHnokECRcGjo+qMAqIlARyGLejpMAkBr2zX8G4g68fB6h+xR0Doo8nA34GCrUPCQrHDFrIBpYjeAsEErcA7e7vAKoOfqAoAwQ2BQHw/AAB3Q66CVHQr5+zJQXC7XMXQNu0DxEAACH5BAUAAA8ALAEAEAAsAB8AAATz8Lnyqr04643VAozDjSRHLChQriyDLgwrj8BLzHhWo2LuP68F5ZcLCojF1xE5Cw6ZrN2iB11Jb9VV4BXIrk4pb2nwIVDFHMUTzRoc2BWBoHFBGBgALPvgGCDoDwYAg11sCAoOiQgVBQGDAAFvXgIDiX1LgY95i1UNBZYOnBYEmgQJUJ6ga4x4jwSrOAKflgOYFw6OjwFnMwiVtLYYuJp5pzICB4jAJA6tjwMzAsqJtSsFpIMxDwcEBhN/DQifBq8VvxPBJAaOBhYM7/Dx7z0IfaIzBe0PCATy/gwEFglAkC5HgX//YDFJ4IAcgX7vunkzViICACH5BAUAAA8ALAEAAwAhACwAAATz8MlJ3yCp6s0nAQvRjdwQLChArpMDoqjDksYLM8Xc1fACGLrOwLYIyIKcEyxwQHIIPaZzM4QBjtOKoQfMahCGE8PbEQyaZIoCna4MA4RBm1IDAMZzyccuyj8Ydj9+DwGBCoOBABl+iYMPjYiBi3mFdod+gHZdeXsAfXl1d4MFAAEGl4NsjhMHCI4OBAybcw4MtgSobQmxt5NttbayAn4GwcJ5CLy2BqpkBcoMuHnPxgQGrm0XxgzNXmDBBA0PCGcIAsMP5wgHA+gksAxHBw709fb03Rzzw2b3/g7tHoibgUDBv3sK3OkQsG7AAIMOFDhs5S4CADs=);"></div>

- </div>

- <!-- 页尾 -->

- <div id="srp-footer">

鉴于第二次发帖,各种规则还不是很懂,如果描述有问题、格式不正确,请大家多多包涵、多多指点!

静候佳音!!

- url(data:image/gif;base64,

不是写明了吗~

base64编码的gif图片

真正的内容在<script>标签里

网页内容是js动态生成的

你写的 爬虫只能抓静态网页

所以看不到商品信息。

|

-

最佳答案

查看完整内容

不是写明了吗~

base64编码的gif图片

真正的内容在标签里

网页内容是js动态生成的

你写的 爬虫只能抓静态网页

所以看不到商品信息。

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)