|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

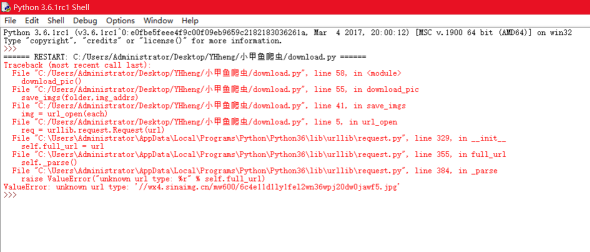

我看着小甲鱼的Python056视频,就是那个爬取妹子图的视频写了以下程序(大部分都是和小甲鱼的一样的)

可是在运行的时候出现了截图里的错误,希望能有大神能帮我一下

(第一次发帖求助,发帖格式有什么不对的地方,望雅正。)

import os

import urllib.request

def url_open(url):

req = urllib.request.Request(url)

req.add_header("User-Agent","Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3000.4 Safari/537.36")

response = urllib.request.urlopen(url)

html = response.read()

return html

def get_page(url):

html = url_open(url).decode("utf-8")

a = html.find("current-comment-page") + 23

b = html.find("]",a)

return html[a:b]

def find_imgs(url):

html = url_open(url).decode("utf-8")

img_addrs = []

a = html.find("img src=")

while a != -1:

b = html.find(".jpg",a,a+256)

if b != -1:

img_addrs.append(html[a+9:b+4])

else:

b=a+9

a = html.find("img src=",b)

return img_addrs

def save_imgs(folder,img_addrs):

for each in img_addrs:

filename = each.split("/")[-1]

with open(filename,'wb') as f:

img = url_open(each)

f.write(img)

def download_pic(folder = 'ooxx',pages=10):

os.mkdir(folder)

os.chdir(folder)

url = "http://jandan.net/ooxx/"

page_num = int(get_page(url))

for i in range(pages):

page_num -= 1

page_url = url + 'page-' + str(page_num) + "#comments"

img_addrs = find_imgs(page_url)

save_imgs(folder,img_addrs)

if __name__ == '__main__':

download_pic()

save_imgs函数下的filename是//wx4.xxxx.xxx格式的,所以需要在前面加上'http:',格式需要是http://wx4.xxxx.xxx的样子,urlopen才认识

|

-

这是出现的错误,萌新看不懂呀。。。

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)