|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

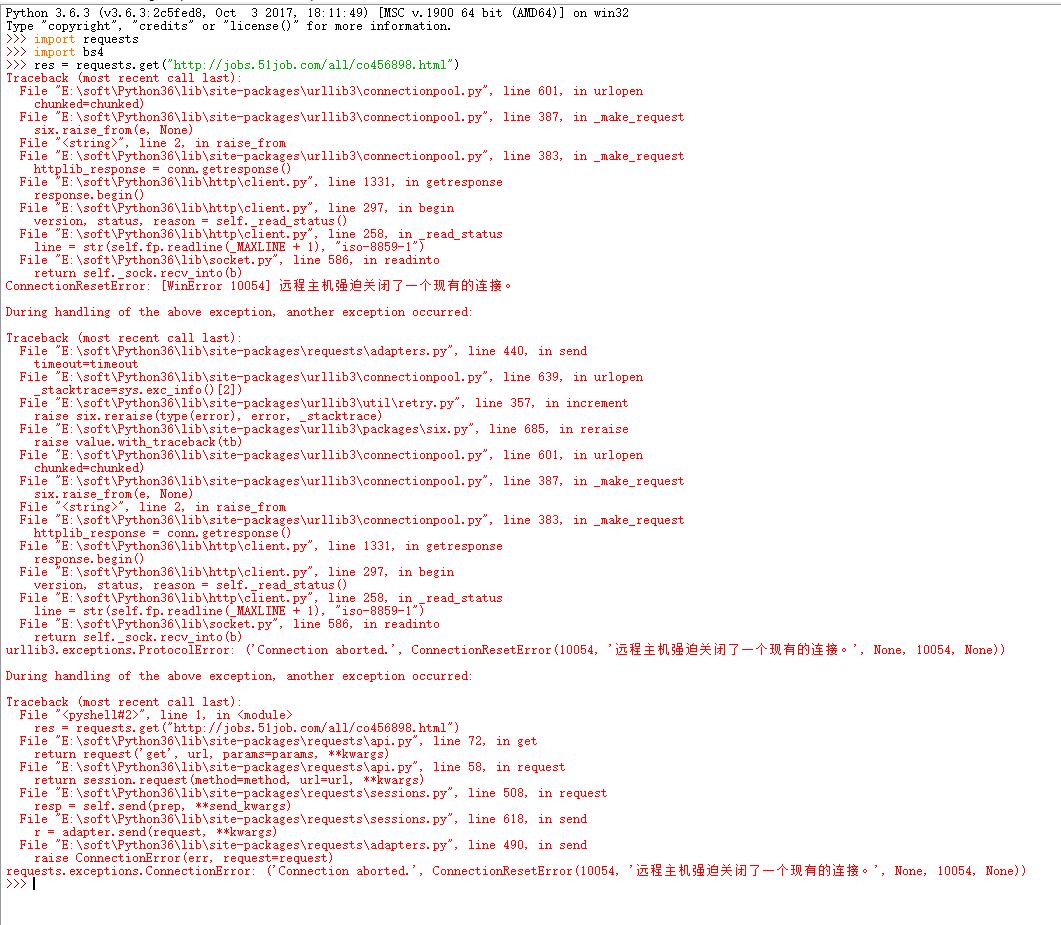

我用 res = requests.get("http://jobs.51job.com/all/co456898.html")

打开的时候出错了,换其他网址又不会报错,是这个网站有反爬机制吗

- Traceback (most recent call last):

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 601, in urlopen

- chunked=chunked)

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 387, in _make_request

- six.raise_from(e, None)

- File "<string>", line 2, in raise_from

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 383, in _make_request

- httplib_response = conn.getresponse()

- File "E:\soft\Python36\lib\http\client.py", line 1331, in getresponse

- response.begin()

- File "E:\soft\Python36\lib\http\client.py", line 297, in begin

- version, status, reason = self._read_status()

- File "E:\soft\Python36\lib\http\client.py", line 258, in _read_status

- line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

- File "E:\soft\Python36\lib\socket.py", line 586, in readinto

- return self._sock.recv_into(b)

- ConnectionResetError: [WinError 10054] 远程主机强迫关闭了一个现有的连接。

- During handling of the above exception, another exception occurred:

- Traceback (most recent call last):

- File "E:\soft\Python36\lib\site-packages\requests\adapters.py", line 440, in send

- timeout=timeout

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 639, in urlopen

- _stacktrace=sys.exc_info()[2])

- File "E:\soft\Python36\lib\site-packages\urllib3\util\retry.py", line 357, in increment

- raise six.reraise(type(error), error, _stacktrace)

- File "E:\soft\Python36\lib\site-packages\urllib3\packages\six.py", line 685, in reraise

- raise value.with_traceback(tb)

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 601, in urlopen

- chunked=chunked)

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 387, in _make_request

- six.raise_from(e, None)

- File "<string>", line 2, in raise_from

- File "E:\soft\Python36\lib\site-packages\urllib3\connectionpool.py", line 383, in _make_request

- httplib_response = conn.getresponse()

- File "E:\soft\Python36\lib\http\client.py", line 1331, in getresponse

- response.begin()

- File "E:\soft\Python36\lib\http\client.py", line 297, in begin

- version, status, reason = self._read_status()

- File "E:\soft\Python36\lib\http\client.py", line 258, in _read_status

- line = str(self.fp.readline(_MAXLINE + 1), "iso-8859-1")

- File "E:\soft\Python36\lib\socket.py", line 586, in readinto

- return self._sock.recv_into(b)

- urllib3.exceptions.ProtocolError: ('Connection aborted.', ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None))

- During handling of the above exception, another exception occurred:

- Traceback (most recent call last):

- File "<pyshell#2>", line 1, in <module>

- res = requests.get("http://jobs.51job.com/all/co456898.html")

- File "E:\soft\Python36\lib\site-packages\requests\api.py", line 72, in get

- return request('get', url, params=params, **kwargs)

- File "E:\soft\Python36\lib\site-packages\requests\api.py", line 58, in request

- return session.request(method=method, url=url, **kwargs)

- File "E:\soft\Python36\lib\site-packages\requests\sessions.py", line 508, in request

- resp = self.send(prep, **send_kwargs)

- File "E:\soft\Python36\lib\site-packages\requests\sessions.py", line 618, in send

- r = adapter.send(request, **kwargs)

- File "E:\soft\Python36\lib\site-packages\requests\adapters.py", line 490, in send

- raise ConnectionError(err, request=request)

- requests.exceptions.ConnectionError: ('Connection aborted.', ConnectionResetError(10054, '远程主机强迫关闭了一个现有的连接。', None, 10054, None))

- headers = {

- 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:54.0) Gecko/20100101 Firefox/54.0'

- }

response = requests.get(url,headers=headers)

你爬取时默认的爬虫头是python-requests/x.xx.x

诸如此类,服务器发现携带这类headers的数据包的时候,会直接拒绝访问

解决方法就是,直接伪造User-Agent信息

'User-Agent':'BaiduSipder'便是百度爬虫的header信息

|

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)