|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 Stubborn 于 2019-2-12 17:18 编辑

网页跟新,get_page_name函数获取不到最大页数的值,需要跟正,渔友动起手来吧

把py文件放到,需要保存的文件目录就可以开始扒图片了。刚学习爬虫的渔友可以看看,写的备注很详细,仅供学习参考,请不要下载欣赏,有伤身体。

需要用到的库bs4 lxml requests fake_useragent

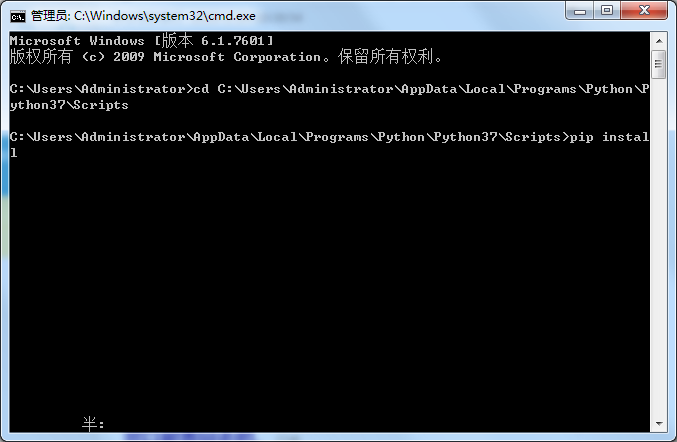

关于库的安装,首先打开CMD命令,cd+空格+pyrhon的Scripts目录,如下图,然后使用pip install 库名,例如输入pip install lxml就是安装lxml解析库

- #_*_coding:utf-8

- import requests,os

- from bs4 import BeautifulSoup

- from fake_useragent import UserAgent

- ua = UserAgent()

- Hostreferer = {

- 'User-Agent': ua.random,

- 'Referer': 'http://www.mzitu.com'

- }

- Picreferer = {

- 'User-Agent': ua.random,

- 'Referer': 'http://i.meizitu.net'

- }

- def get_page_name(url):

- "获取到图集最大页数和图集介绍"

- html = get_html(url)

- soup = BeautifulSoup(html, 'lxml')

- span = soup.findAll('span')

- title = soup.find('h2', class_="main-title")

- return span[10].text, title.text

- def get_html(url):

- "请求网页函数,获取页面代码"

- page_data = requests.get(url,headers=Hostreferer)

- soup_data = page_data.text

- return soup_data

- def get_img_url(url, name):

- "获取到图片下载地址"

- html = get_html(url)

- soup = BeautifulSoup(html, 'lxml')

- img_url = soup.find('img', alt= name)

- return img_url['src']

- def save_img(img_url, count, name):

- "下载"

- req = requests.get(img_url, headers=Picreferer)

- with open(name+'/'+str(count)+'.jpg', 'wb') as f:

- f.write(req.content)

- def get_Atlas_dict(img_link):

- "获取到页面的图集URL"

- soup_data = get_html(img_link)

- soup_data = BeautifulSoup(soup_data,'html.parser')

- img_link = soup_data.find_all("span")

- Atlas_dict = []

- try:

- for i in img_link:

- if i.a == None:

- pass

- else:

- #题取出图集的url,因为第一页有总网址,这个得删下,技术不足

- if i.a.text == "妹子图":

- pass

- else:

- Atlas_dict.append(i.a["href"])

- except:

- pass

- return Atlas_dict

- if __name__ == "__main__":

- i = int(input("输入需要下载的页数,一页24个图集:"))

- for each in range(1,i+1):

- url = "https://www.mzitu.com/page/" + str(each) + "/"

- img_url_list = get_Atlas_dict(url)

- for atlas_url in img_url_list:

- page, name = get_page_name(atlas_url)

- print("开始下载:{}图集共计{}张".format(name,page))

- os.mkdir(name)

- for i in range(1, int(page)+1):

- url = atlas_url + "/" + str(i)

- img_url = get_img_url(url, name)

- save_img(img_url, i, name)

- print('保存第' + str(i) + '张图片成功')

- if i ==int(page):

- print(name+"图集下载完成")

|

-

评分

-

查看全部评分

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)