|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

本帖最后由 小伤口 于 2021-3-11 20:46 编辑

[b][b]==========头像大全========== [/b][/b]

[b][b]前言[/b][/b]

[b]往往爬取图片时,爬一堆往往也用不了那么多(MM图除外),

爬一张还不如直接下载,所以想出来了 ’在线预览‘ ,简单来说就是先爬取许多图片,并显示在界面上,退出程序时,只会保存用户选择的图片

程序虽然只是简单的爬取图片,但是如何用pygame实现实时预览图片,用pygame实现分类爬取,

实现‘在线预览’等还是有点挑战的(针对个人而言)[/b]

使用的第三方库

[b]requests---》爬取网页

安装:

pygame---》界面

安装:- pip install pygame-1.9.6-cp38-cp38-win_amd64.whl

BeautifulSoup---》提取图片下载地址

安装:

pillow---》缩小图片,保存图片

安装:[/b]

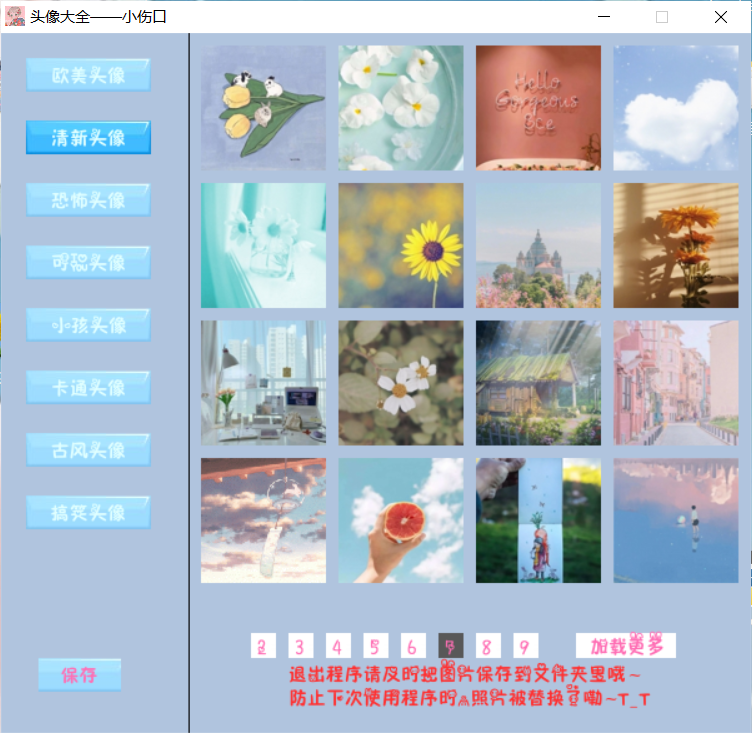

程序界面

使用方法

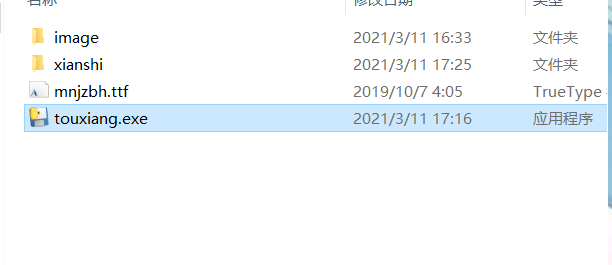

exe文件运行

1 打开dist文件夹

2 [b]找到touxiang.exe文件双击运行[/b]

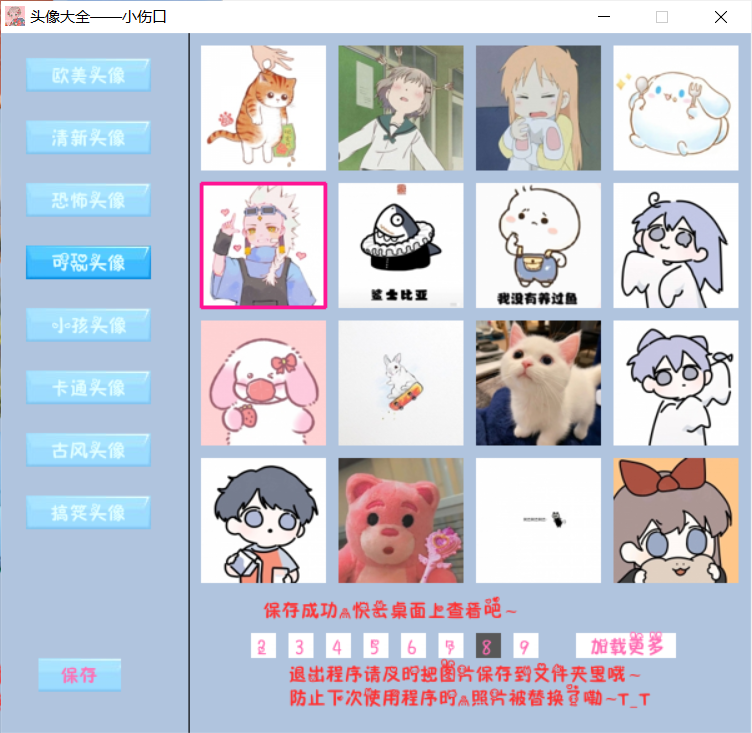

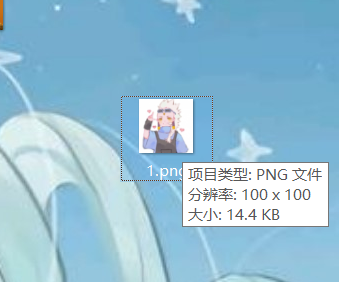

3 [b]选择你喜欢的类别,如何保存想要的图片?使用鼠标点击想要保存的图片,照片边框出现红色时,点击保存,当程序显示保存成功,快去桌面上查看吧~,图片保存成功[/b]。

代码运行

1 打开tou_xiang文件夹,找到touxiang.py文件

2 鼠标右击打开

3 同exe文件的3步骤

注:退出程序请及时把图片保存到文件夹里哦~ 防止下次使用程序时,照片被替换了嘞~T_T

这里将爬取图片的代码发出来吧,有详细注释~

- # 获取页面

- def huo_qu(page_url):

- # 加请求头

- headers = {

- 'user-agent': ""'Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.72 Mobile Safari/537.36 Edg/89.0.774.45'}

- res = requests.get(page_url, headers=headers)

- return res

- # 查找图片下载链接,并保存链接

- def xun_zhao(res):

- # 用BeautifulSoup解析

- soup = BeautifulSoup(res.text,features="lxml")

- img_addrs = []

- # 查找所有有img的标签

- for link in soup.find_all('img'):

- # 找到有src属性的链接并保存

- if link.get('src') not in img_addrs:

- img_addrs.append(link.get('src'))

- if len(img_addrs)>=16:

- break

- return img_addrs

- # 保存图片

- def bao_cun(img_addrs):

- for each in img_addrs:

- filename = each.split('/')[-1]

- r = requests.get(each)

- with open(filename, 'wb') as f:

- f.write(r.content)

- dizhi.append(filename)

- if each==img_addrs[-1]:

- caiqu = 0

- for sd in dizhi[:]:

- im1 = ims.open(sd)

- im2 = im1.copy()

- im2.thumbnail((100, 100))

- baga = 'xianshi/' + str(caiqu) + '.png'

- zai_xian.append(baga)

- im2.save(baga)

- caiqu += 1

- if caiqu==16:

- caiqu=0

- if sd == dizhi[-1]:

- caiqu = 0

- # 主代码

- def download_tx(temp, pages):

- fenglei = {'欧美头像': 'oumei/', '清新头像': 'xiaoqingxin/', '恐怖头像': 'kongbu/', \

- '可爱头像': 'keai/', '小孩头像': 'xiaohai/', '卡通头像': 'katong/', '古风头像': 'gufeng/', '搞笑头像': 'gaoxiao/'}

- url = 'http://www.imeitou.com/' + fenglei[temp]

- if pages == 1:#这里加判断是因为爬取的图片网站的第一页与之后的页数的网址不一样

- page_url = url

- res = huo_qu(page_url)

- img_addrs = xun_zhao(res)

- bao_cun(img_addrs)

- else:

- page_url = url + 'index_' + str(pages) + '.html'#拼接完整网址

- res = huo_qu(page_url)

- img_addrs = xun_zhao(res)

- bao_cun(img_addrs)

exe文件:

源代码

快去设置你喜欢的头像吧~

如果喜欢请评个分吧

|

评分

-

查看全部评分

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)