|

|

马上注册,结交更多好友,享用更多功能^_^

您需要 登录 才可以下载或查看,没有账号?立即注册

x

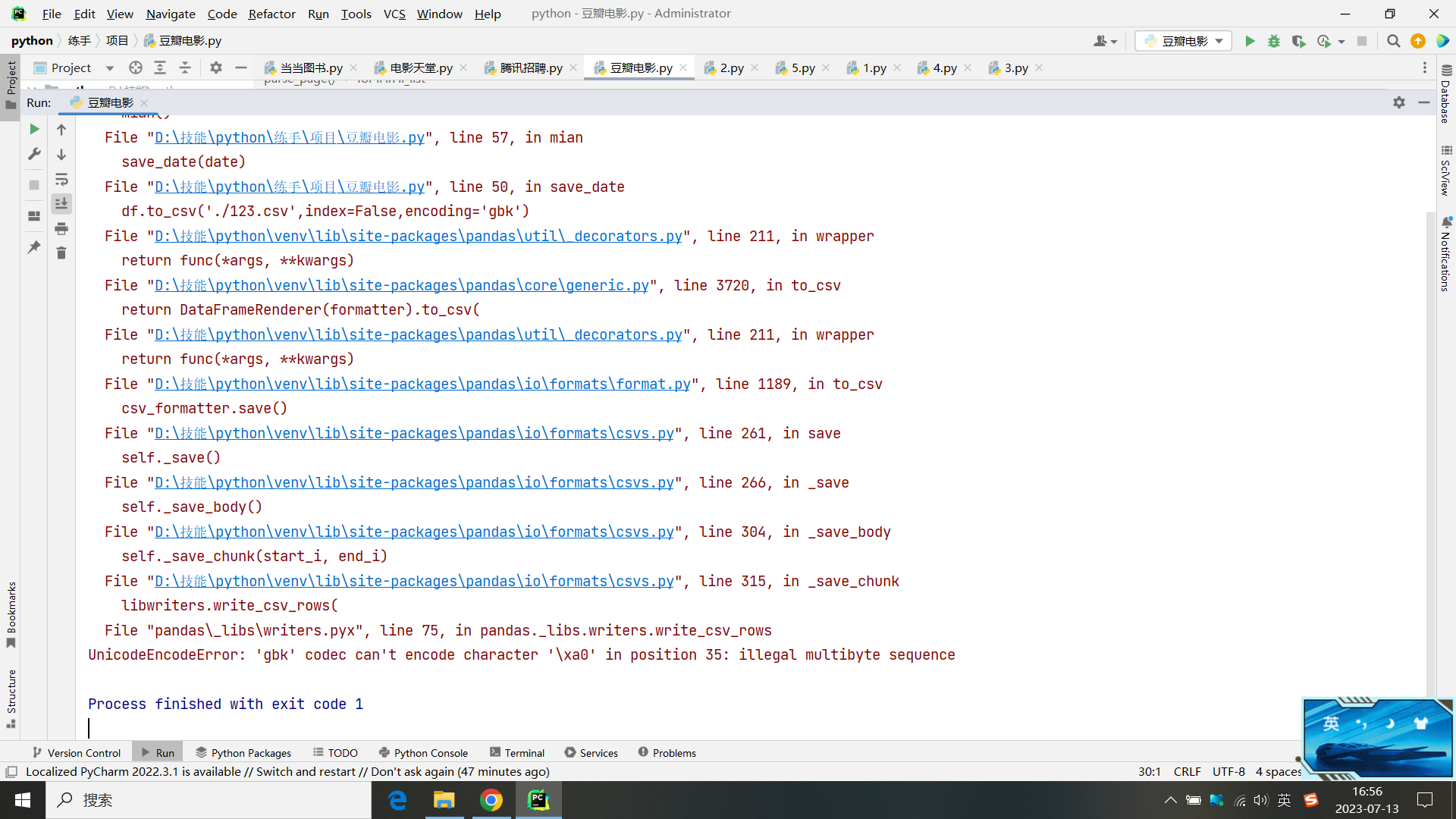

请问各位大佬,这个爬取到的内容有一些特殊空格,该怎么才能消除并保存到CSV里

- import re

- import pandas as pd

- import requests

- import logging

- from bs4 import BeautifulSoup

- logging.basicConfig(level=logging.INFO,format='%(asctime)s - %(levelname)s: %(message)s')

- index_url = 'https://movie.douban.com/top250?start={}&filter='

- header = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

- }

- index_page = 2

- #发起请求

- def scrape_url(url):

- logging.info(f'正在爬取{url}')

- try:

- response = requests.get(url=url,headers=header)

- if response.status_code == 200:

- return response.text

- logging.error(f'错误代码{response.status_code}')

- except requests.RequestException:

- logging.error(f'爬取{url}出现错误')

- #拼接url并发起请求

- def page_url(page):

- url = index_url.format(page*25)

- return scrape_url(url)

- #解析数据

- def parse_page(html):

- date = []

- soup = BeautifulSoup(html,'lxml')

- #取出每一个电影代表的li标签

- li_list = soup.select('.article .grid_view li')

- for li in li_list:

- title = li.select('.hd span')[0].string

- strings = ''.join(li.select('.bd p')[0].get_text().strip())

- score = li.select('.rating_num')[0].string

- date.append({'title':title,'strings':strings,'score':score})

- return date

- def save_date(date):

- df = pd.DataFrame(date)

- df.to_csv('./123.csv',index=False,encoding='gbk')

- def mian():

- for page in (0,index_page):

- html = page_url(page)

- date = parse_page(html)

- save_date(date)

- print(date)

- if __name__ == '__main__':

- mian()

这是报错信息

那这样呢?

- import re

- import pandas as pd

- import requests

- import logging

- from bs4 import BeautifulSoup

- logging.basicConfig(level=logging.INFO,format='%(asctime)s - %(levelname)s: %(message)s')

- index_url = 'https://movie.douban.com/top250?start={}&filter='

- header = {

- "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36"

- }

- index_page = 2

- #发起请求

- def scrape_url(url):

- logging.info(f'正在爬取{url}')

- try:

- response = requests.get(url=url,headers=header)

- if response.status_code == 200:

- return response.text

- logging.error(f'错误代码{response.status_code}')

- except requests.RequestException:

- logging.error(f'爬取{url}出现错误')

- #拼接url并发起请求

- def page_url(page):

- url = index_url.format(page*25)

- return scrape_url(url)

- #解析数据

- def parse_page(html):

- date = []

- soup = BeautifulSoup(html,'lxml')

- #取出每一个电影代表的li标签

- li_list = soup.select('.article .grid_view li')

- for li in li_list:

- title = li.select('.hd span')[0].string

- strings = ''.join(li.select('.bd p')[0].get_text().strip())

- score = li.select('.rating_num')[0].string

- date.append({'title':title,'strings':strings,'score':score})

- return date

- def save_date(date):

- df = pd.DataFrame(date)

- df.to_csv('./123.csv',index=False,encoding='utf-8-sig')

- def mian():

- for page in (0,index_page):

- html = page_url(page)

- date = parse_page(html)

- save_date(date)

- print(date)

- if __name__ == '__main__':

- mian()

|

-

|

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)

( 粤ICP备18085999号-1 | 粤公网安备 44051102000585号)